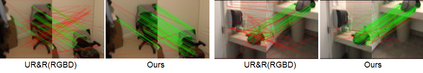

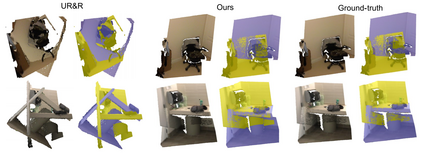

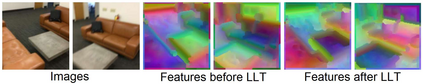

Point cloud registration aims at estimating the geometric transformation between two point cloud scans, in which point-wise correspondence estimation is the key to its success. In addition to previous methods that seek correspondences by hand-crafted or learnt geometric features, recent point cloud registration methods have tried to apply RGB-D data to achieve more accurate correspondence. However, it is not trivial to effectively fuse the geometric and visual information from these two distinctive modalities, especially for the registration problem. In this work, we propose a new Geometry-Aware Visual Feature Extractor (GAVE) that employs multi-scale local linear transformation to progressively fuse these two modalities, where the geometric features from the depth data act as the geometry-dependent convolution kernels to transform the visual features from the RGB data. The resultant visual-geometric features are in canonical feature spaces with alleviated visual dissimilarity caused by geometric changes, by which more reliable correspondence can be achieved. The proposed GAVE module can be readily plugged into recent RGB-D point cloud registration framework. Extensive experiments on 3D Match and ScanNet demonstrate that our method outperforms the state-of-the-art point cloud registration methods even without correspondence or pose supervision. The code is available at: https://github.com/514DNA/LLT.

翻译:点云登记旨在估计两点云扫描之间的几何变化,其中点光度估计是其成功的关键。除了以往寻求手动或学习的几何特征通信的方法外,最近的点云登记方法还试图应用RGB-D数据实现更准确的通信。然而,将这两种不同模式的几何和视觉信息有效结合起来,特别是对于登记问题而言,并非微不足道。在这项工作中,我们提议一个新的几何-软件视觉特征提取器(GAVE),采用多尺度的本地线性转换,逐步结合这两种模式,其中深度数据中的几何特征作为以几何为依存的共振内核,以转换RGB数据中的视觉特征。结果的视觉地理特征在可视性空间中,由于几何变化而导致的视觉差异减轻,从而可以实现更可靠的通信。拟议的GAVE模块可以很容易地插入最近的RGB-D点云层登记框架。在3DMatch和扫描Net上进行的广泛实验,表明我们的方法超越了RGB4号/RVALT的可使用的方法。