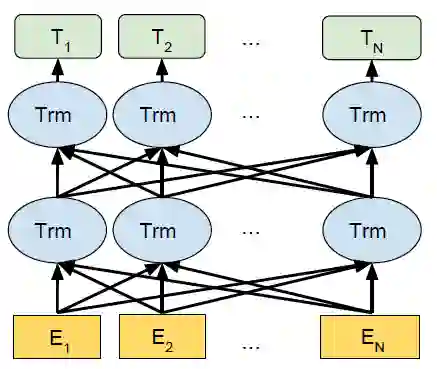

Large-scale pre-trained language models have demonstrated strong knowledge representation ability. However, recent studies suggest that even though these giant models contains rich simple commonsense knowledge (e.g., bird can fly and fish can swim.), they often struggle with the complex commonsense knowledge that involves multiple eventualities (verb-centric phrases, e.g., identifying the relationship between ``Jim yells at Bob'' and ``Bob is upset'').To address this problem, in this paper, we propose to help pre-trained language models better incorporate complex commonsense knowledge. Different from existing fine-tuning approaches, we do not focus on a specific task and propose a general language model named CoCoLM. Through the careful training over a large-scale eventuality knowledge graphs ASER, we successfully teach pre-trained language models (i.e., BERT and RoBERTa) rich complex commonsense knowledge among eventualities. Experiments on multiple downstream commonsense tasks that requires the correct understanding of eventualities demonstrate the effectiveness of CoCoLM.

翻译:然而,最近的研究表明,尽管这些巨型模型包含丰富的简单常识知识(例如,鸟可以飞,鱼可以游泳.),但它们往往与涉及多种可能性的复杂的常识知识(以语言为中心的词组,例如,确定“Jim对Bob'大叫”和“Bob是心烦意乱的”之间的关系)纠缠不休。 为了解决这个问题,我们在本文件中提议帮助预先培训的语言模型更好地纳入复杂的常识知识。与现有的微调方法不同,我们并不专注于一项具体任务,而是提出一个名为CoCoLM的一般语言模型。通过对大规模事件知识图ASER的认真培训,我们成功地教授了预先培训的语言模型(例如,BERTER和ROBERTA)在各种可能性之间丰富的复杂常识。在多个下游共同任务上进行的实验需要正确理解可能性,以证明COCOLM的有效性。