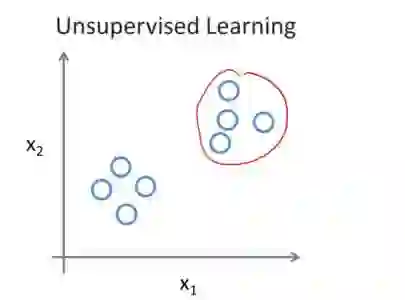

The recently advanced unsupervised learning approaches use the siamese-like framework to compare two "views" from the same image for learning representations. Making the two views distinctive is a core to guarantee that unsupervised methods can learn meaningful information. However, such frameworks are sometimes fragile on overfitting if the augmentations used for generating two views are not strong enough, causing the over-confident issue on the training data. This drawback hinders the model from learning subtle variance and fine-grained information. To address this, in this work we aim to involve the distance concept on label space in the unsupervised learning and let the model be aware of the soft degree of similarity between positive or negative pairs through mixing the input data space, to further work collaboratively for the input and loss spaces. Despite its conceptual simplicity, we show empirically that with the solution -- Unsupervised image mixtures (Un-Mix), we can learn subtler, more robust and generalized representations from the transformed input and corresponding new label space. Extensive experiments are conducted on CIFAR-10, CIFAR-100, STL-10, Tiny ImageNet and standard ImageNet with popular unsupervised methods SimCLR, BYOL, MoCo V1&V2, SwAV, etc. Our proposed image mixture and label assignment strategy can obtain consistent improvement by 1~3% following exactly the same hyperparameters and training procedures of the base methods. Code is publicly available at https://github.com/szq0214/Un-Mix.

翻译:最近先进的未经监督的学习方法使用类似直观的框架来比较同一图像中的两种“视图”来进行学习演示。使两种观点具有独特性是一个核心,以保证未经监督的方法能够学习有意义的信息。然而,如果用于生成两种观点的增强器不够强大,导致培训数据中的过度信任问题,则这些框架有时会过于完善。这一缺陷阻碍了模型学习微妙的差异和细微的图像。为了解决这个问题,我们力求在这项工作中将标签空间的远程概念纳入非监督学习,让模型通过混合输入数据空间来了解正对对对对对对或负对之间的软性相似度。然而,如果用于生成两种观点的增强器不够强大,则这些框架有时会过于脆弱。尽管其概念性不够简单,导致培训数据中出现过度自信的问题。 我们可以从变换的输入和相应的新标签空间中学习更精细、更有力和更加笼统的表述。 在 CIDAR-10、CIFAR-100、STL-10、Tiny图像网和SWIM-ML1和标准图像网络的改进过程中,我们提出的SIML-VLM-ML3和SV-C-C-C-SIS-C-C-C-C-C-C-C-C-C-C-C-C-C-SLAR-C-C-C-C-C-C-SLM-SLV-SB-C-C-C-C-SLV-C-C-C-C-SLAR-C-SL-SL-C-C-C-C-C-C-C-C-C-C-C-C-C-C-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-SL-S-S-SL-S-S-S-S-S-SB-SB-SLAR-SA-SA-SA-SA-SA-SA-SA-SA-SL-SA-SL-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S