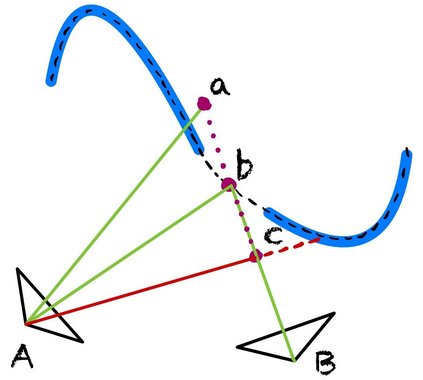

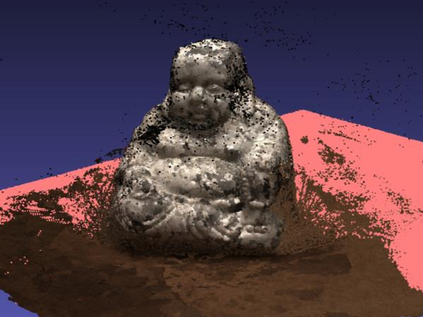

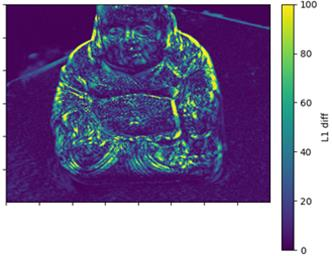

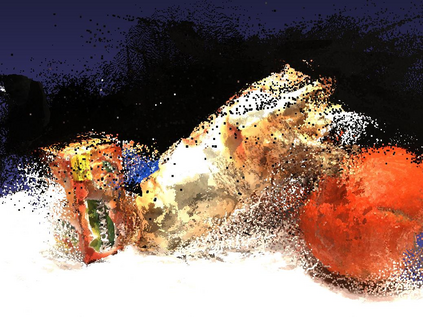

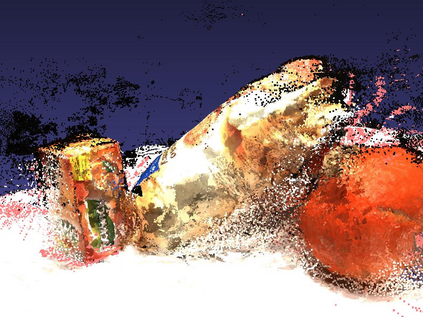

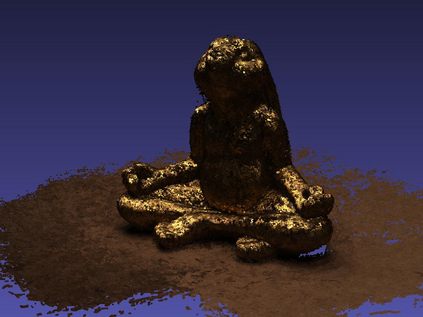

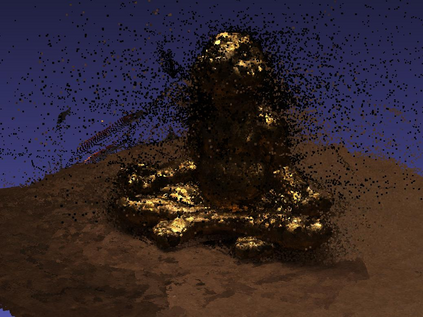

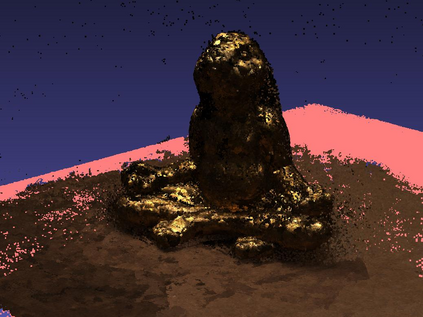

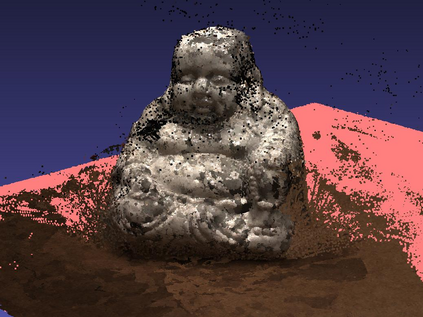

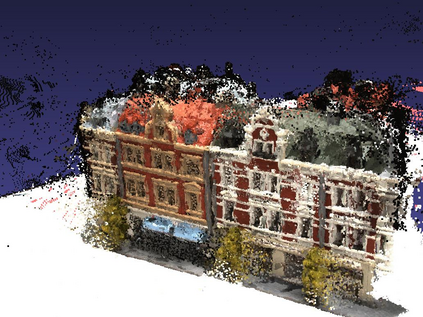

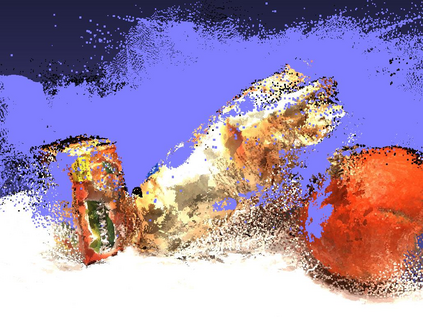

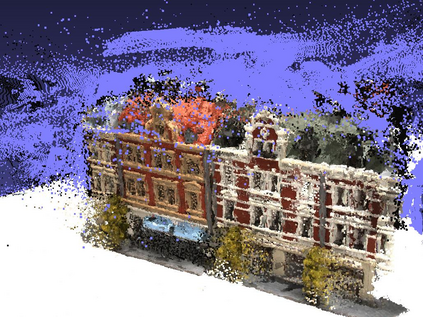

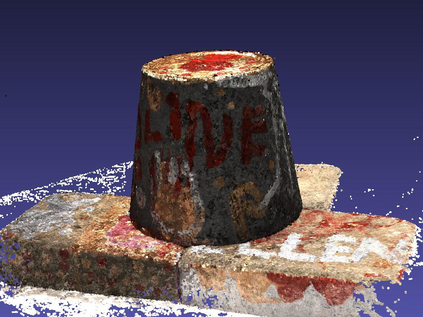

We address the task of view synthesis, which can be posed as recovering a rendering function that renders new views from a set of existing images. In many recent works such as NeRF, this rendering function is parameterized using implicit neural representations of scene geometry. Implicit neural representations have achieved impressive visual quality but have drawbacks in computational efficiency. In this work, we propose a new approach that performs view synthesis using point clouds. It is the first point-based method to achieve better visual quality than NeRF while being more than 100x faster in rendering speed. Our approach builds on existing works on differentiable point-based rendering but introduces a novel technique we call "Sculpted Neural Points (SNP)", which significantly improves the robustness to errors and holes in the reconstructed point cloud. Experiments show that on the task of view synthesis, our sculpting technique closes the gap between point-based and implicit representation-based methods. Code is available at https://github.com/princeton-vl/SNP and supplementary video at https://youtu.be/dBwCQP9uNws.

翻译:我们处理的观点合成任务可以是恢复从一组现有图像中产生新观点的转换功能。 在诸如 NeRF 等许多近期著作中,这种转换功能是使用现场几何的隐性神经表示法进行参数化的。 隐性神经表示方式取得了令人印象深刻的视觉质量,但在计算效率方面有缺陷。 在这项工作中,我们提出了一种利用点云进行视图合成的新方法。 这是第一个基于点的基于点的方法,目的是实现比 NERF 更好的视觉质量,同时速度要快100x以上。 我们的方法以现有基于不同点的显示法为基础,但采用了一种我们称之为“ 雕塑神经点” 的新技术,这大大提高了对重塑点云中错误和洞的稳健性。 实验显示,在视觉合成任务上,我们的雕刻技术缩小了基于点和隐性表示方法之间的差距。 代码可在https://github.com/prenceton-vl/SNP和https://youtube/dBwCQ9Nws上的补充视频。