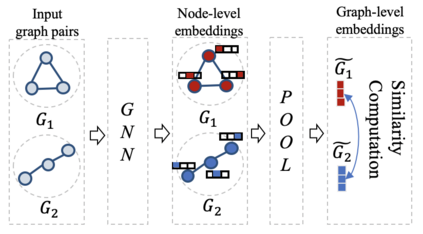

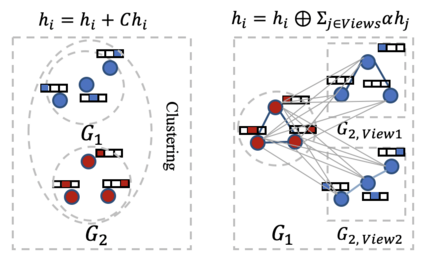

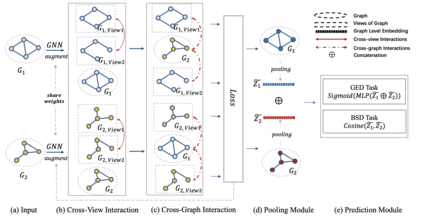

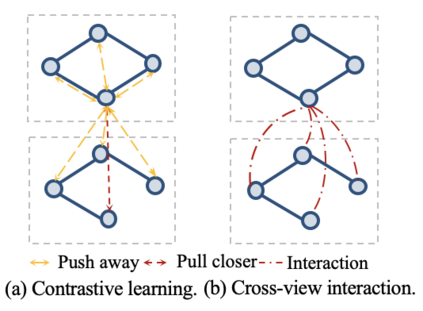

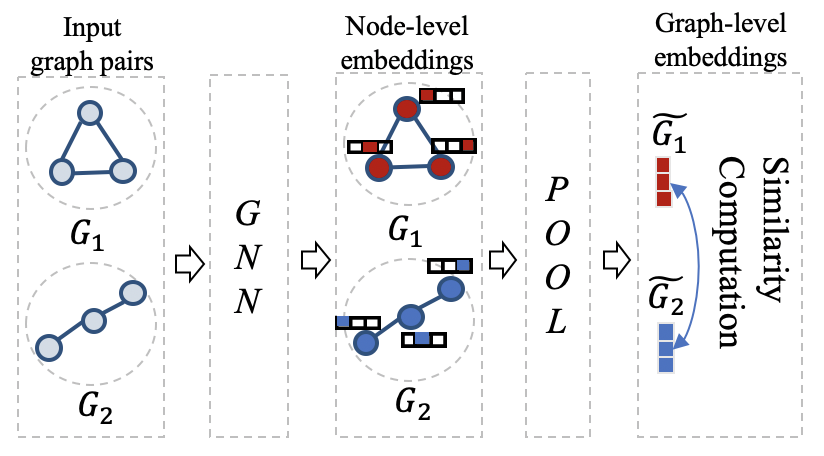

Graph similarity learning refers to calculating the similarity score between two graphs, which is required in many realistic applications, such as visual tracking, graph classification, and collaborative filtering. As most of the existing graph neural networks yield effective graph representations of a single graph, little effort has been made for jointly learning two graph representations and calculating their similarity score. In addition, existing unsupervised graph similarity learning methods are mainly clustering-based, which ignores the valuable information embodied in graph pairs. To this end, we propose a contrastive graph matching network (CGMN) for self-supervised graph similarity learning in order to calculate the similarity between any two input graph objects. Specifically, we generate two augmented views for each graph in a pair respectively. Then, we employ two strategies, namely cross-view interaction and cross-graph interaction, for effective node representation learning. The former is resorted to strengthen the consistency of node representations in two views. The latter is utilized to identify node differences between different graphs. Finally, we transform node representations into graph-level representations via pooling operations for graph similarity computation. We have evaluated CGMN on eight real-world datasets, and the experiment results show that the proposed new approach is superior to the state-of-the-art methods in graph similarity learning downstream tasks.

翻译:图形相似性学习是指计算两个图表之间的相似性评分,这是许多现实应用中所要求的,例如视觉跟踪、图表分类和协作过滤。由于大多数现有的图形神经网络产生一个图形的有效图形表示,因此几乎没有努力联合学习两个图形表示和计算其相似性评分。此外,现有的未经监督的图形相似性学习方法主要以组合为基础,忽略了图表两对中体现的宝贵信息。为此,我们建议建立一个对比图形匹配网络(CGMN),用于自我监督图形相似性学习,以计算任何两个输入图形对象之间的相似性。具体地说,我们为每个图形分别生成了两个强化的图形表示。然后,我们采用了两种战略,即交叉视图互动和交叉图形互动,以有效记分数学习。前者用于加强两种观点中结点表示的一致性。后者用于查明不同图表之间的节点差异。最后,我们通过汇总计算图形相似性计算操作将节点表示变为图形水平表示。我们评估了每个图表的双对两个图形的扩大视图观点。然后,我们采用了两种战略,即交叉视图互动互动和交叉式互动,以显示8个图像的新的图表方法。我们评估了在现实世界中学习新的图表上的结果。