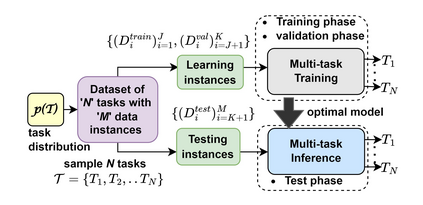

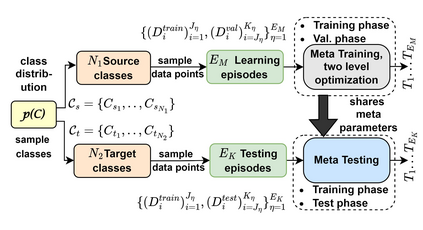

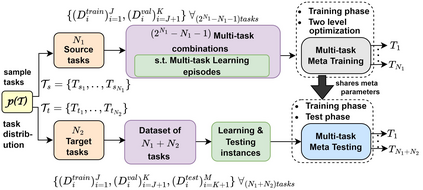

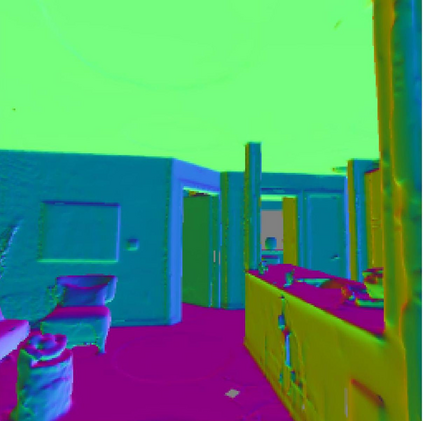

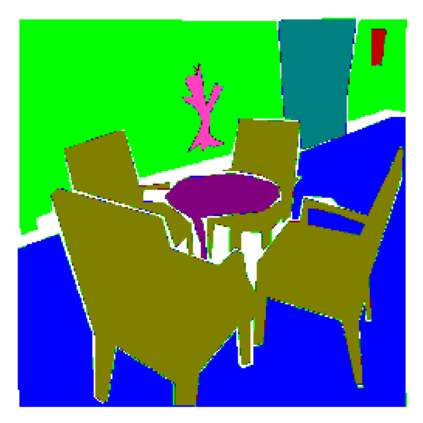

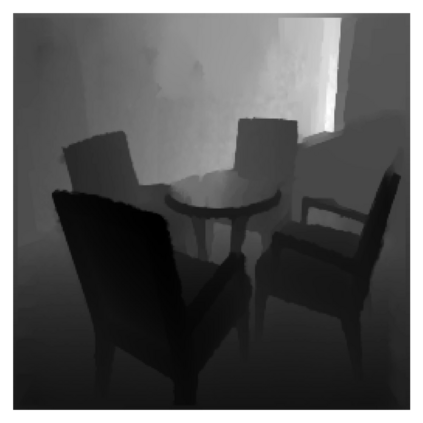

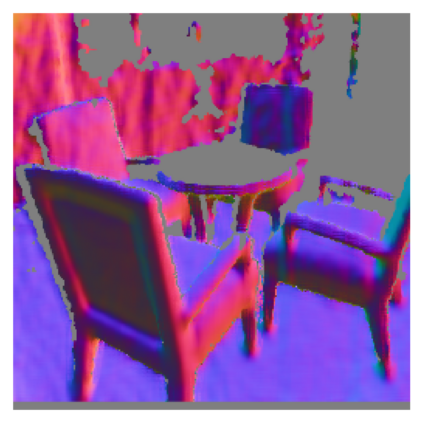

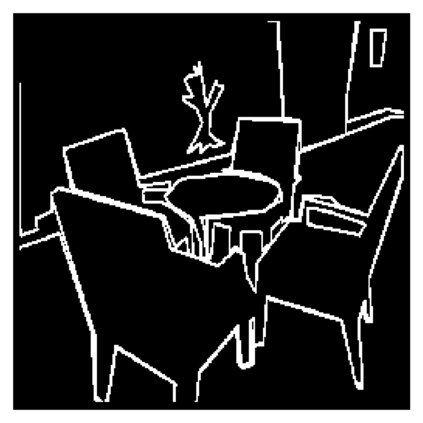

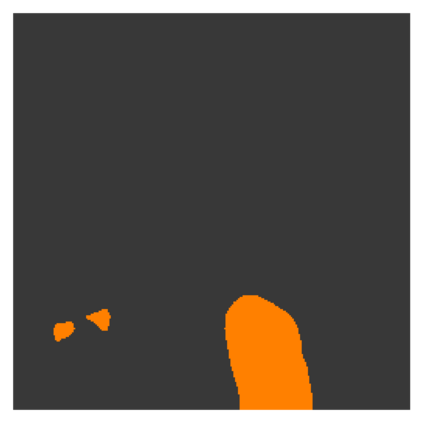

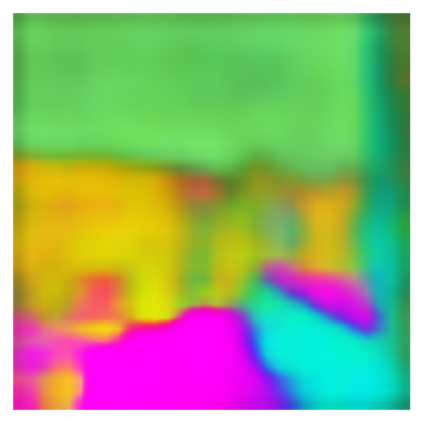

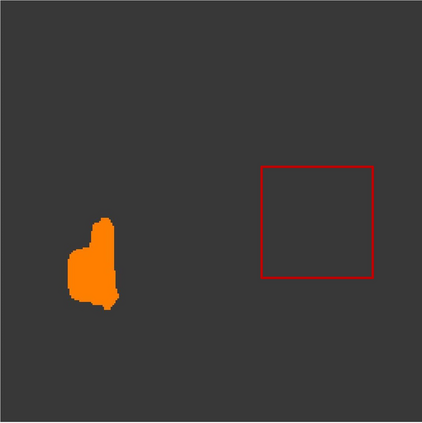

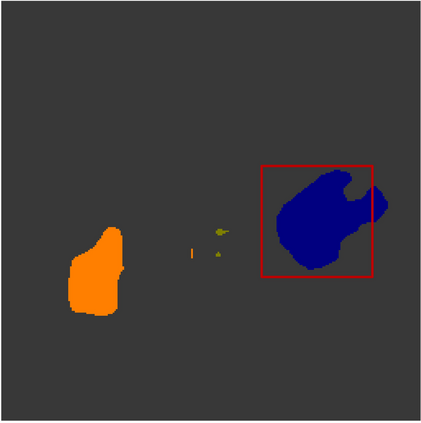

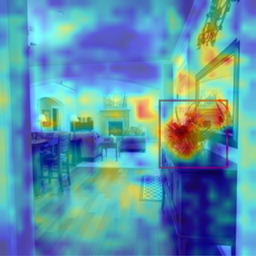

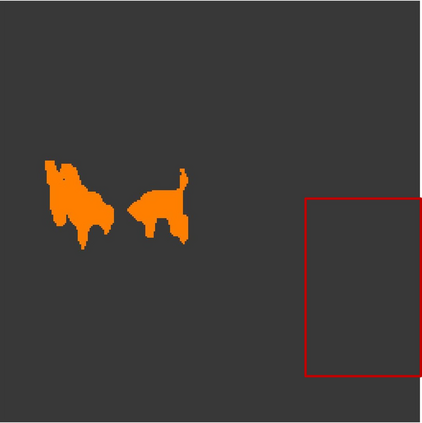

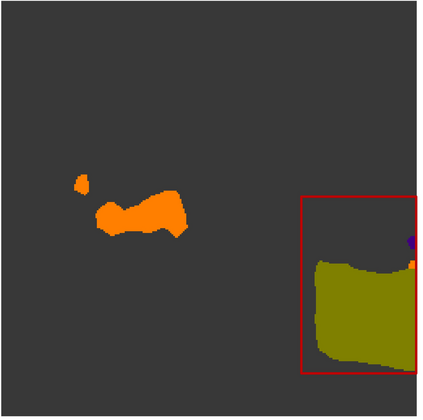

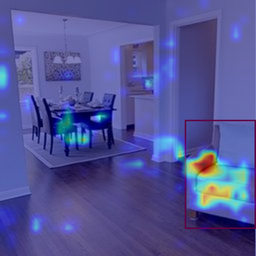

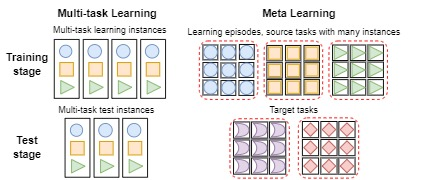

This work proposes Multi-task Meta Learning (MTML), integrating two learning paradigms Multi-Task Learning (MTL) and meta learning, to bring together the best of both worlds. In particular, it focuses simultaneous learning of multiple tasks, an element of MTL and promptly adapting to new tasks with fewer data, a quality of meta learning. It is important to highlight that we focus on heterogeneous tasks, which are of distinct kind, in contrast to typically considered homogeneous tasks (e.g., if all tasks are classification or if all tasks are regression tasks). The fundamental idea is to train a multi-task model, such that when an unseen task is introduced, it can learn in fewer steps whilst offering a performance at least as good as conventional single task learning on the new task or inclusion within the MTL. By conducting various experiments, we demonstrate this paradigm on two datasets and four tasks: NYU-v2 and the taskonomy dataset for which we perform semantic segmentation, depth estimation, surface normal estimation, and edge detection. MTML achieves state-of-the-art results for most of the tasks. Although semantic segmentation suffers quantitatively, our MTML method learns to identify segmentation classes absent in the pseudo labelled ground truth of the taskonomy dataset.

翻译:这项工作建议多任务元学习(MTML),将多种任务学习(MTML)和元学习(MTML)这两个学习模式结合起来,将两个世界的最佳任务汇集在一起,特别是同时学习多种任务(MTL的一个要素),同时学习多种任务,同时学习MTL的一个要素,并迅速适应新任务,数据较少的新任务、元学习的质量。必须强调我们注重不同的任务,这些任务与通常视为同质的任务(例如,如果所有任务都是分类,或者所有任务都是回归任务)不同种类的任务(例如,如果所有任务都是分类,或者所有任务都是回归任务)不同。基本想法是培训一个多任务模式,这样当引入一项不可见的任务时,它可以学习较少的步骤,同时提供至少与传统单一任务学习有关新任务或纳入MTL一样的良好业绩。通过进行各种实验,我们在两个数据集和四项任务(NYU-V2)上展示了这种模式,而任务则是我们进行语系分化、深度估计、表面正常估计和边缘探测。MTML在大多数任务中达到状态的状态,同时确定我们没有的数学段段的定量数据。