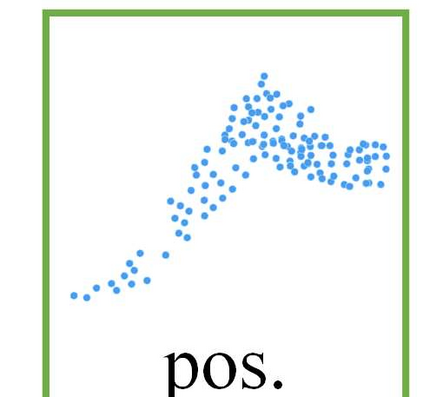

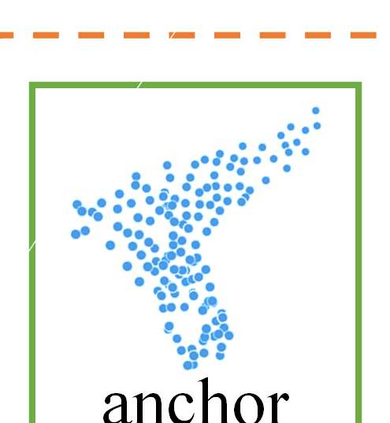

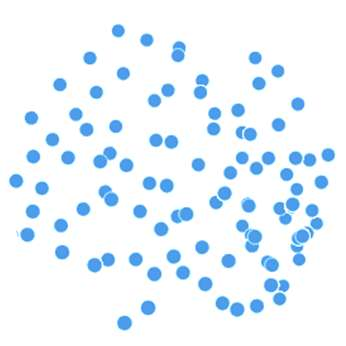

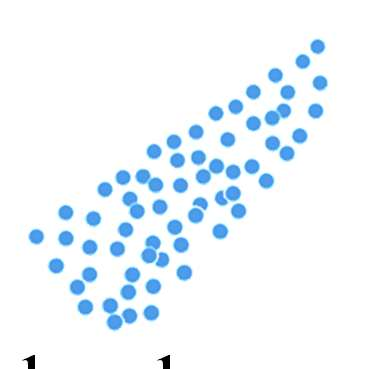

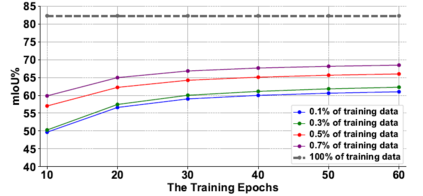

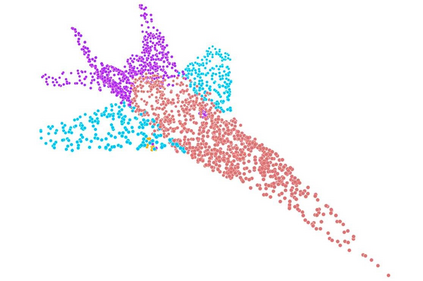

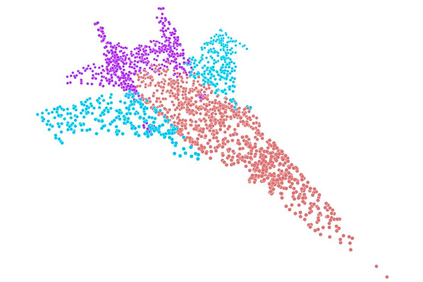

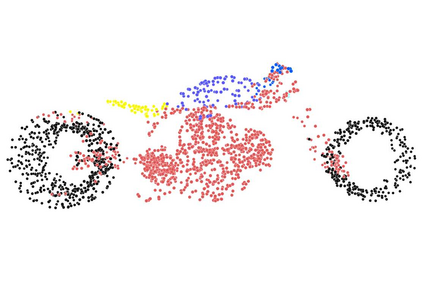

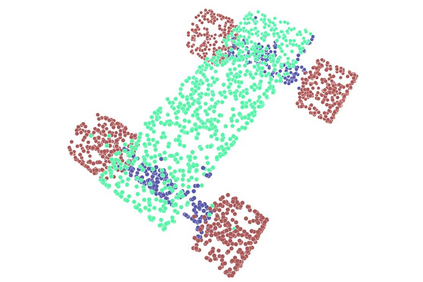

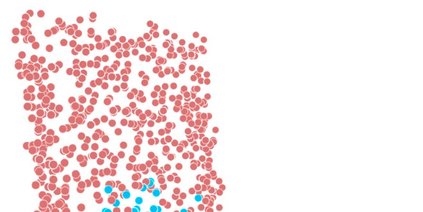

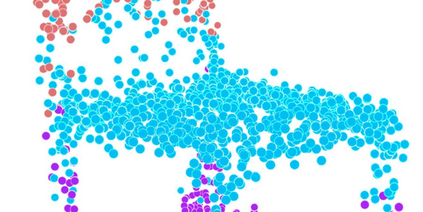

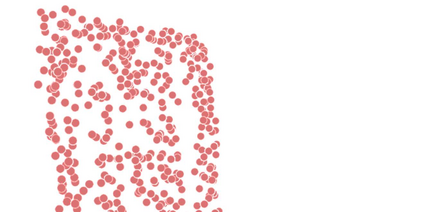

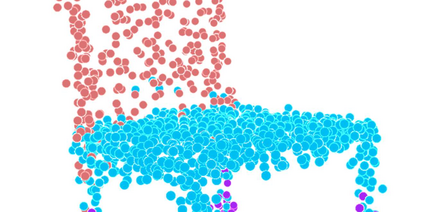

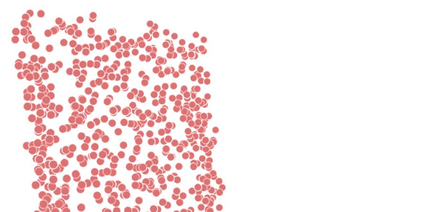

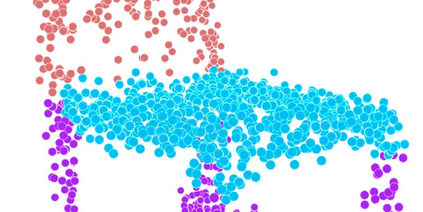

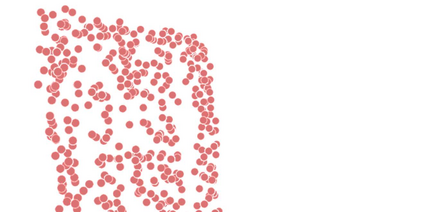

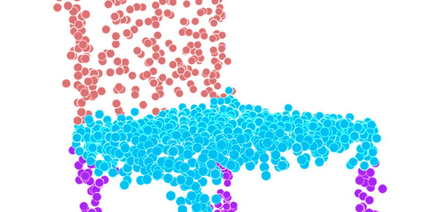

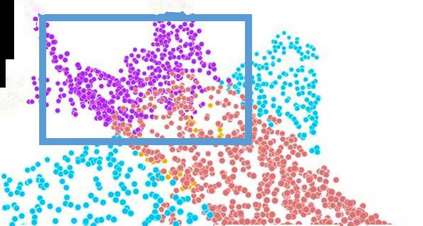

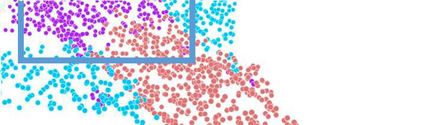

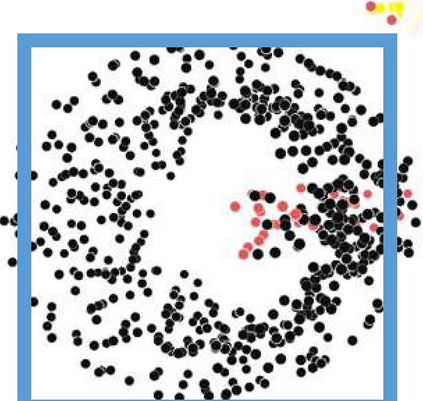

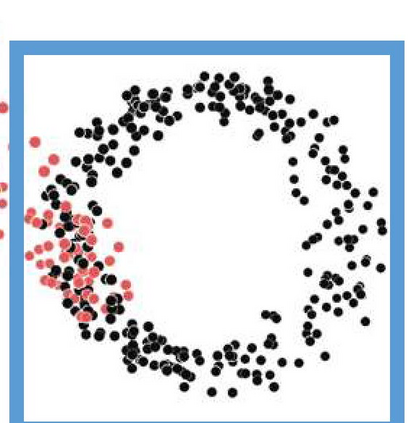

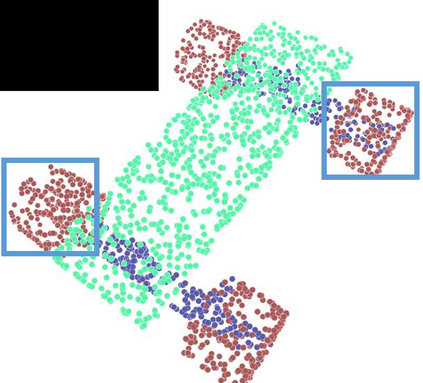

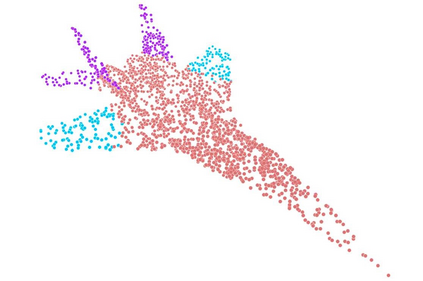

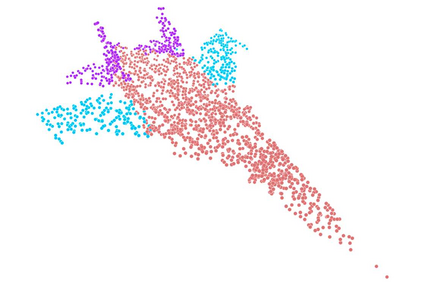

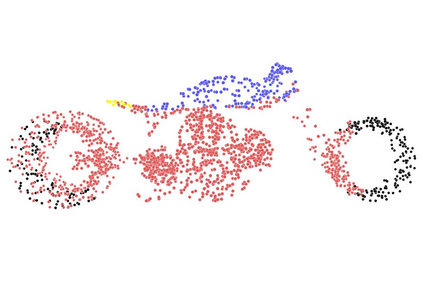

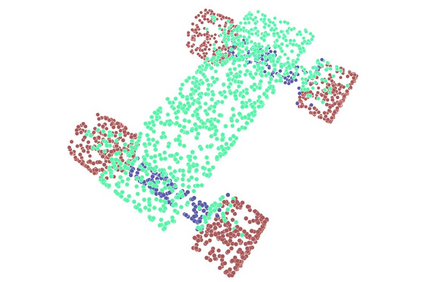

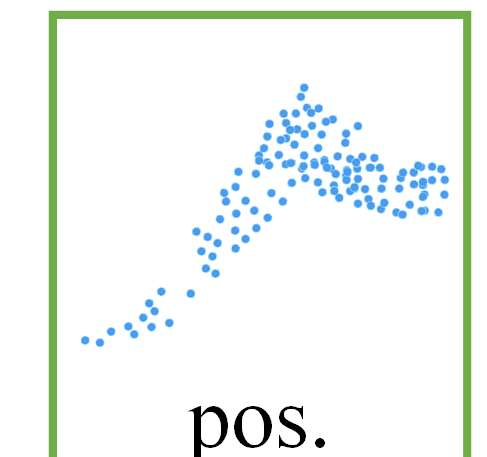

Point clouds have attracted increasing attention as a natural representation of 3D shapes. Significant progress has been made in developing methods for point cloud analysis, which often requires costly human annotation as supervision in practice. To address this issue, we propose a novel self-contrastive learning for self-supervised point cloud representation learning, aiming to capture both local geometric patterns and nonlocal semantic primitives based on the nonlocal self-similarity of point clouds. The contributions are two-fold: on the one hand, instead of contrasting among different point clouds as commonly employed in contrastive learning, we exploit self-similar point cloud patches within a single point cloud as positive samples and otherwise negative ones to facilitate the task of contrastive learning. Such self-contrastive learning is well aligned with the emerging paradigm of self-supervised learning for point cloud analysis. On the other hand, we actively learn hard negative samples that are close to positive samples in the representation space for discriminative feature learning, which are sampled conditional on each anchor patch leveraging on the degree of self-similarity. Experimental results show that the proposed method achieves state-of-the-art performance on widely used benchmark datasets for self-supervised point cloud segmentation and transfer learning for classification.

翻译:作为3D形的自然表示,点云已引起越来越多的注意。在制订点云分析方法方面已取得重大进展,这往往需要花费昂贵的人类笔记,作为实际监督。为了解决这一问题,我们提议为自我监督的点云代表学习进行新的自我自调学习,目的是根据点云的非本地自我相似性,捕捉当地的几何模式和非本地的语义原始体。贡献有两个方面:一方面,我们利用单点云中自相相似的点云块作为正样板,其他方面则是负面的,以便利对比性学习任务。这种自调学习与正在形成的基于点云分析的自我监督学习模式完全吻合。另一方面,我们积极学习在代表空间中接近于正样的硬负面样本,以分析性特征学习,这些样本是每个固定点的云块在自我相似性程度上常用的比值。实验结果显示,拟议的方法在广泛使用的分级数据分类上达到了用于自我升级的状态,以学习分级数据。