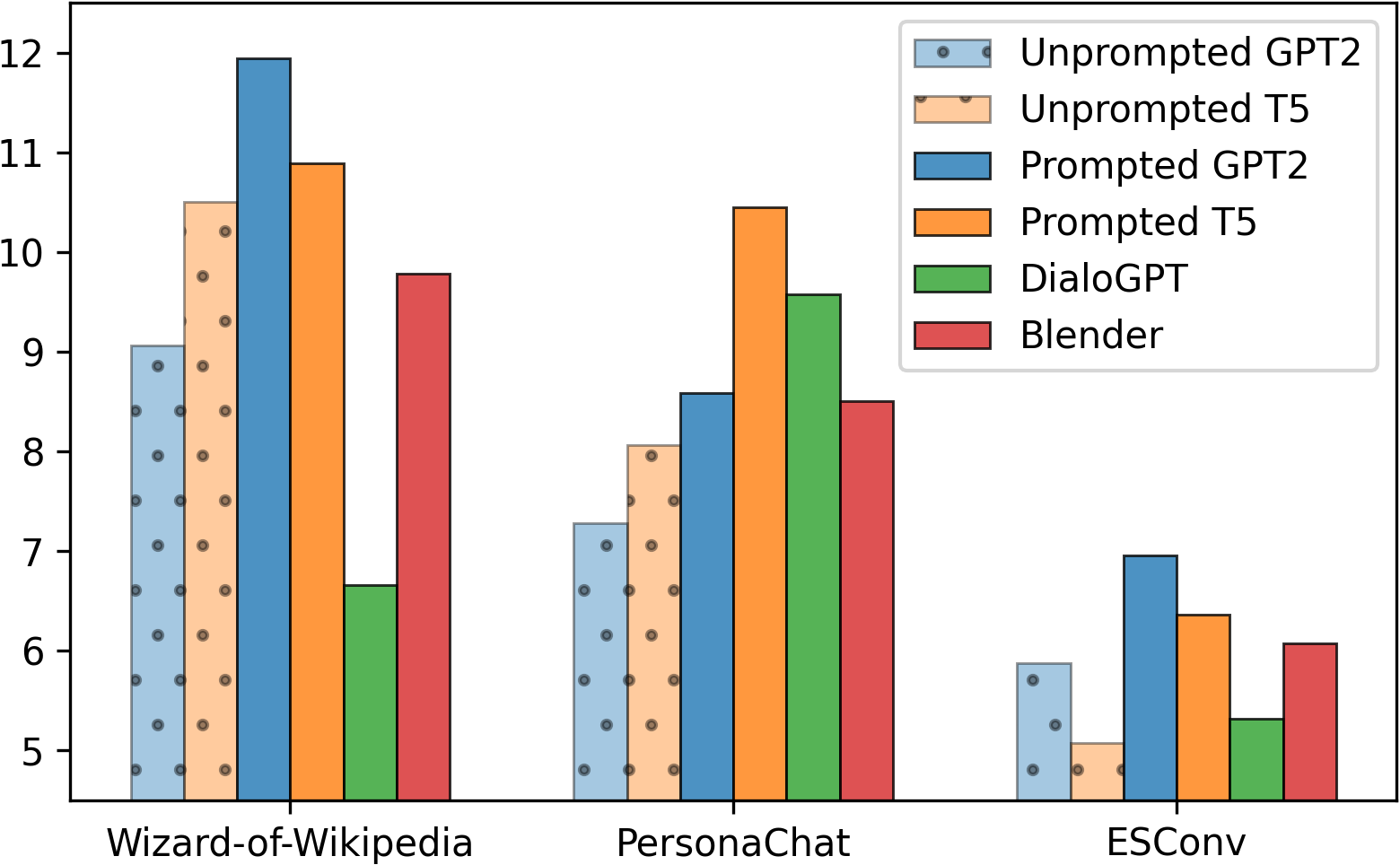

Dialog models can be greatly strengthened through grounding on various external information, but grounded dialog corpora are usually not naturally accessible. In this work, we focus on the few-shot learning for grounded dialog generation (GDG). We first propose a simple prompting method for GDG tasks, where different constructs of model input, such as the grounding source and the conversation context, are distinguished through continuous or discrete prompts. On three typical GDG tasks, we empirically demonstrate and analyze in-depth the effectiveness of our method. We then conduct extensive experiments to thoroughly investigate how our prompting method works with different pre-trained models. We show that prompted language models perform superiorly to conversational models, and further analyze various factors that influence the effects of prompting. Overall, our work introduces a prompt-based perspective to the few-shot learning for GDG tasks, and provides valuable findings and insights for future research.

翻译:通过以各种外部信息为基础,可以大大加强对话模式,但基础性对话公司通常无法自然地进入。在这项工作中,我们侧重于为有基础的对话生成者(GDG)进行微小的学习。我们首先为GDG任务提出一个简单的提示方法,通过连续或离散的提示,对模型投入的不同结构,例如源头和对话背景进行区分。在三种典型的GDG任务中,我们从经验上展示和分析我们方法的有效性。然后我们进行广泛的实验,彻底调查我们的快速方法如何与不同的预先培训的模型相配合。我们显示,激励语言模型优于对话模式,并进一步分析影响推动效应的各种因素。总体而言,我们的工作为GD任务的短片学习引入了快速的视角,并为未来的研究提供了宝贵的发现和洞察力。