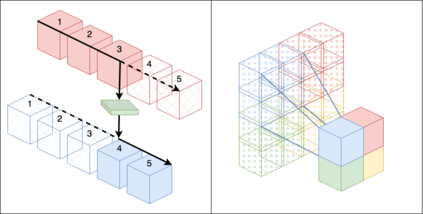

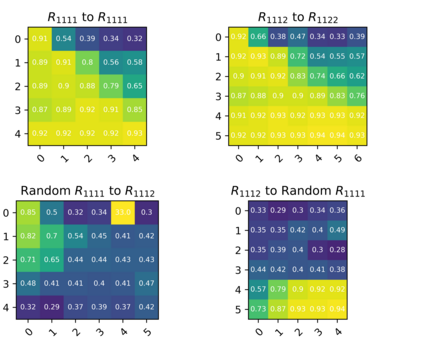

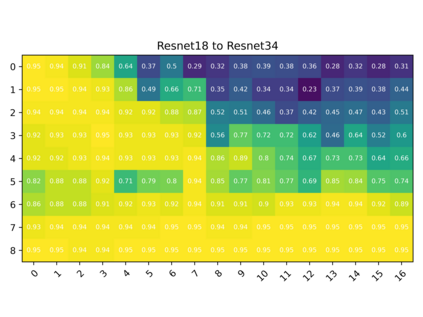

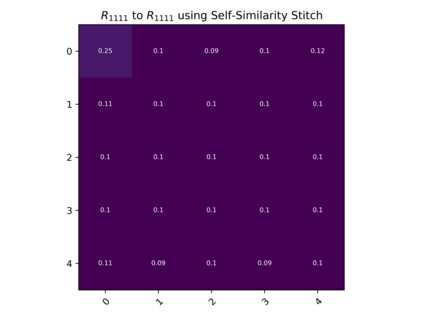

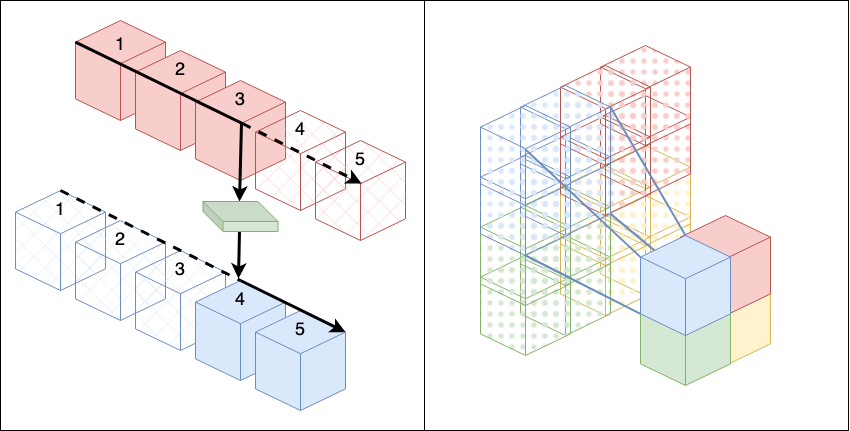

Model stitching (Lenc & Vedaldi 2015) is a compelling methodology to compare different neural network representations, because it allows us to measure to what degree they may be interchanged. We expand on a previous work from Bansal, Nakkiran & Barak which used model stitching to compare representations of the same shapes learned by differently seeded and/or trained neural networks of the same architecture. Our contribution enables us to compare the representations learned by layers with different shapes from neural networks with different architectures. We subsequently reveal unexpected behavior of model stitching. Namely, we find that stitching, based on convolutions, for small ResNets, can reach high accuracy if those layers come later in the first (sender) network than in the second (receiver), even if those layers are far apart.

翻译:模型拼接(Lenc&Vedaldi 2015)是比较不同神经网络表示的强有力的方法,因为它允许我们测量它们可以相互替换的程度。我们扩展了Bansal,Nakkiran&Barak之前的一项工作,该工作使用模型拼接比较由不同架构的神经网络学习的相同形状的表示,这些神经网络具有不同的种子和/或训练。我们的贡献使我们能够比较由具有不同体系结构的神经网络的形状不同的层学习的表示。随后,我们揭示了模型拼接的意外行为。即使那些层相距很远,基于卷积的小ResNets的拼接,如果那些层在第一个(发件人)网络中的位置比在第二个(接收器)网络中的位置后,可以达到很高的准确性。