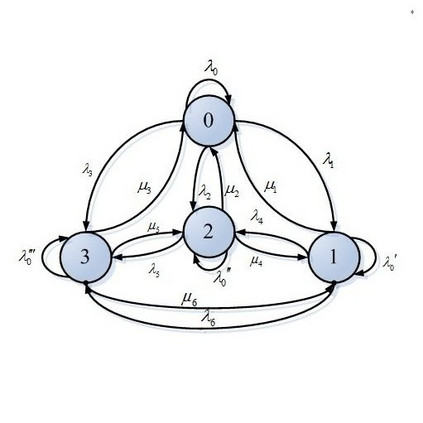

We introduce a new framework that performs decision-making in reinforcement learning (RL) as an iterative reasoning process. We model agent behavior as the steady-state distribution of a parameterized reasoning Markov chain (RMC), optimized with a new tractable estimate of the policy gradient. We perform action selection by simulating the RMC for enough reasoning steps to approach its steady-state distribution. We show our framework has several useful properties that are inherently missing from traditional RL. For instance, it allows agent behavior to approximate any continuous distribution over actions by parameterizing the RMC with a simple Gaussian transition function. Moreover, the number of reasoning steps to reach convergence can scale adaptively with the difficulty of each action selection decision and can be accelerated by re-using past solutions. Our resulting algorithm achieves state-of-the-art performance in popular Mujoco and DeepMind Control benchmarks, both for proprioceptive and pixel-based tasks.

翻译:我们引入了一个在强化学习中进行决策的新框架(RL),作为一个迭接推理过程。我们将代理行为作为参数推理Markov链(RMC)的稳定状态分布模型,以新的政策梯度估计优化为优化。我们通过模拟RMC来进行行动选择,以便采取足够的推理步骤来接近其稳定状态分布。我们展示了我们的框架在传统RL中固有的一些有用属性。例如,它允许代理行为通过简单的高斯过渡功能来将RMC参数参数化,从而可以将任何连续的分布与行动相近。此外,实现趋同的推理步骤数量可以随着每项行动选择决定的难度而适应,并且可以通过重新使用过去的解决方案而加速。我们所产生的算法在流行的 Mujoco 和 DeepMind 控制基准中实现了最先进的性表现,两者都是用于促进和基于像素的任务。