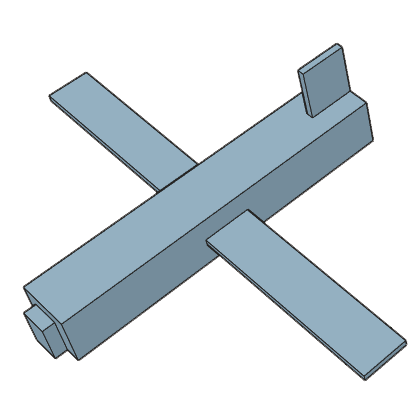

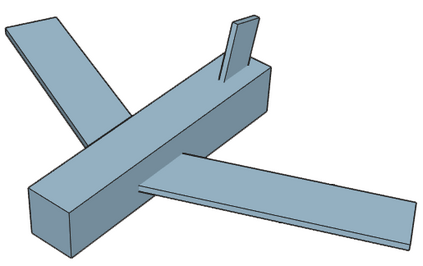

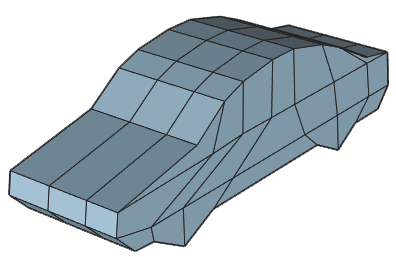

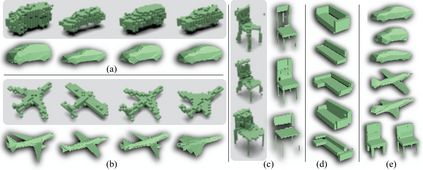

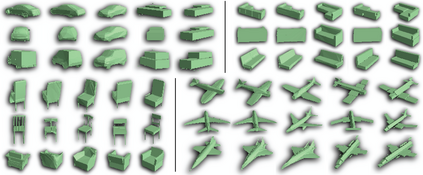

We present a unified framework tackling two problems: class-specific 3D reconstruction from a single image, and generation of new 3D shape samples. These tasks have received considerable attention recently; however, existing approaches rely on 3D supervision, annotation of 2D images with keypoints or poses, and/or training with multiple views of each object instance. Our framework is very general: it can be trained in similar settings to these existing approaches, while also supporting weaker supervision scenarios. Importantly, it can be trained purely from 2D images, without ground-truth pose annotations, and with a single view per instance. We employ meshes as an output representation, instead of voxels used in most prior work. This allows us to exploit shading information during training, which previous 2D-supervised methods cannot. Thus, our method can learn to generate and reconstruct concave object classes. We evaluate our approach on synthetic data in various settings, showing that (i) it learns to disentangle shape from pose; (ii) using shading in the loss improves performance; (iii) our model is comparable or superior to state-of-the-art voxel-based approaches on quantitative metrics, while producing results that are visually more pleasing; (iv) it still performs well when given supervision weaker than in prior works.

翻译:我们提出了一个统一的框架,以解决两个问题:从单一图像中进行班级三维重建,并生成新的三维形状样本。这些任务最近引起了相当大的关注;然而,现有方法依赖于三维监督,用关键点或姿势对二维图像进行注解,和/或以多个视图对每个对象实例进行培训。我们的框架非常笼统:可以在与这些现有方法相似的环境下对其进行培训,同时支持较弱的监督设想。重要的是,它可以纯粹从2D图像中接受培训,没有地面图案的图解,而且每个实例都有一个单一的视角。我们使用meshes作为产出代表,而不是在大多数先前工作中使用的 voxel。这使我们能够在培训期间利用阴影信息,而此前的2D 监督方法无法做到这一点。 因此,我们的方法可以学会生成和重建连结对象课程。我们在不同环境中对合成数据的方法进行评估,表明(一)它学会分解外形形状;(二)在损失表现中使用阴影来改进业绩。 (三)我们的模型可以比较或优于先前2D监督方法,同时进行较弱的排序。