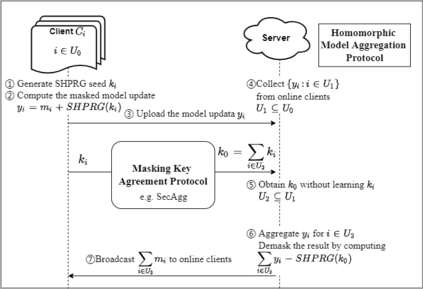

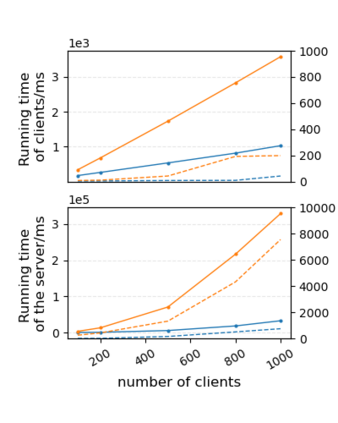

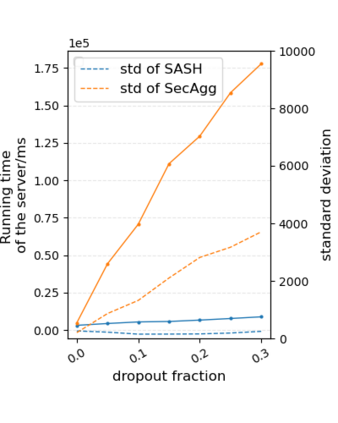

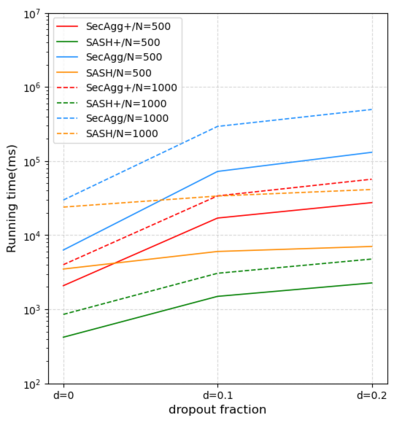

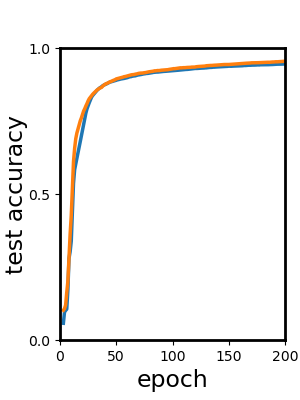

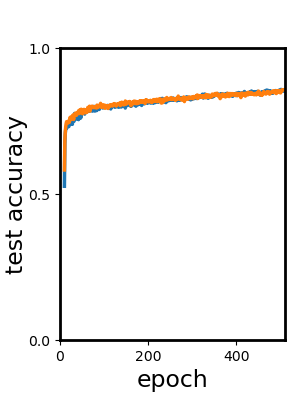

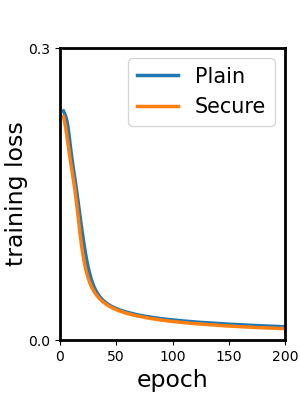

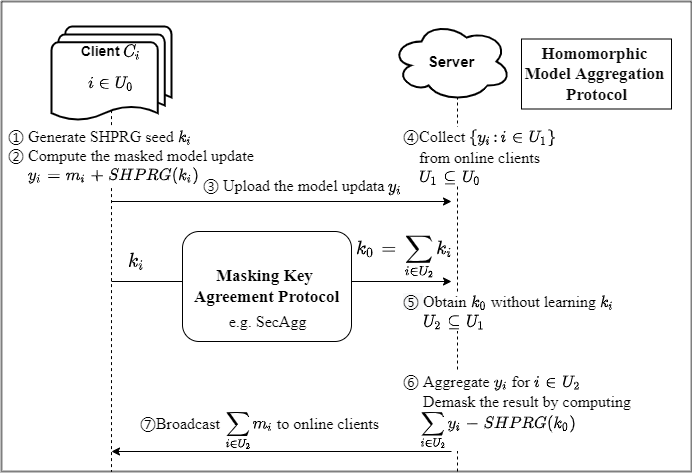

To prevent private training data leakage in Fed?erated Learning systems, we propose a novel se?cure aggregation scheme based on seed homomor?phic pseudo-random generator (SHPRG), named SASH. SASH leverages the homomorphic property of SHPRG to simplify the masking and demask?ing scheme, which for each of the clients and for the server, entails an overhead linear w.r.t model size and constant w.r.t number of clients. We prove that even against worst-case colluding adversaries, SASH preserves training data privacy, while being resilient to dropouts without extra overhead. We experimentally demonstrate SASH significantly improves the efficiency to 20 times over baseline, especially in the more realistic case where the numbers of clients and model size become large, and a cer?tain percentage of clients drop out from the system.

翻译:为了防止美联储的私人培训数据渗漏? 高级学习系统,我们建议采用以SASH(SHS)为名称的种子同族体? 假冒随机发生器(SHPRG)为基础的新的se?cure汇总计划。SASH利用SPRG(SHPRG)的同质特性来简化掩蔽和涂鸦? 计划,这对每个客户和服务器来说,都意味着一个高端的线性模型大小和恒定的客户数量。我们证明,即使针对最坏的串通对手,SASH(SH)也能保护培训数据隐私,同时对辍学者没有额外间接费用的适应能力。我们实验性地证明,SASH大大提高了效率,超过基线20倍,特别是在更现实的情况下,客户数量和模型规模变得庞大,以及一定比例的客户退出系统。