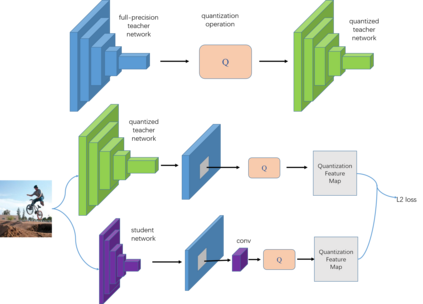

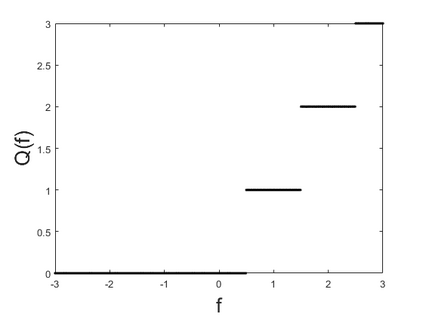

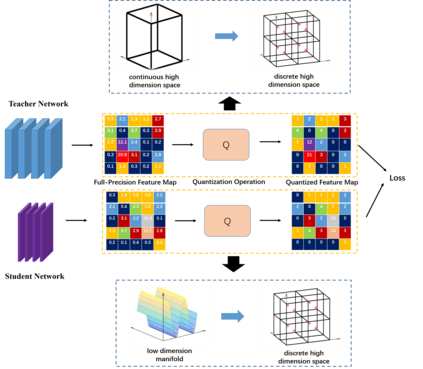

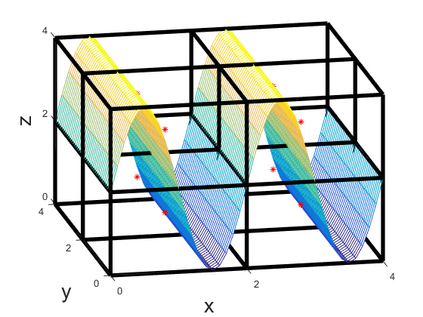

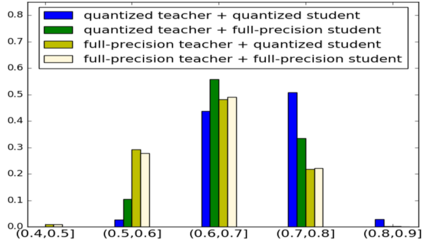

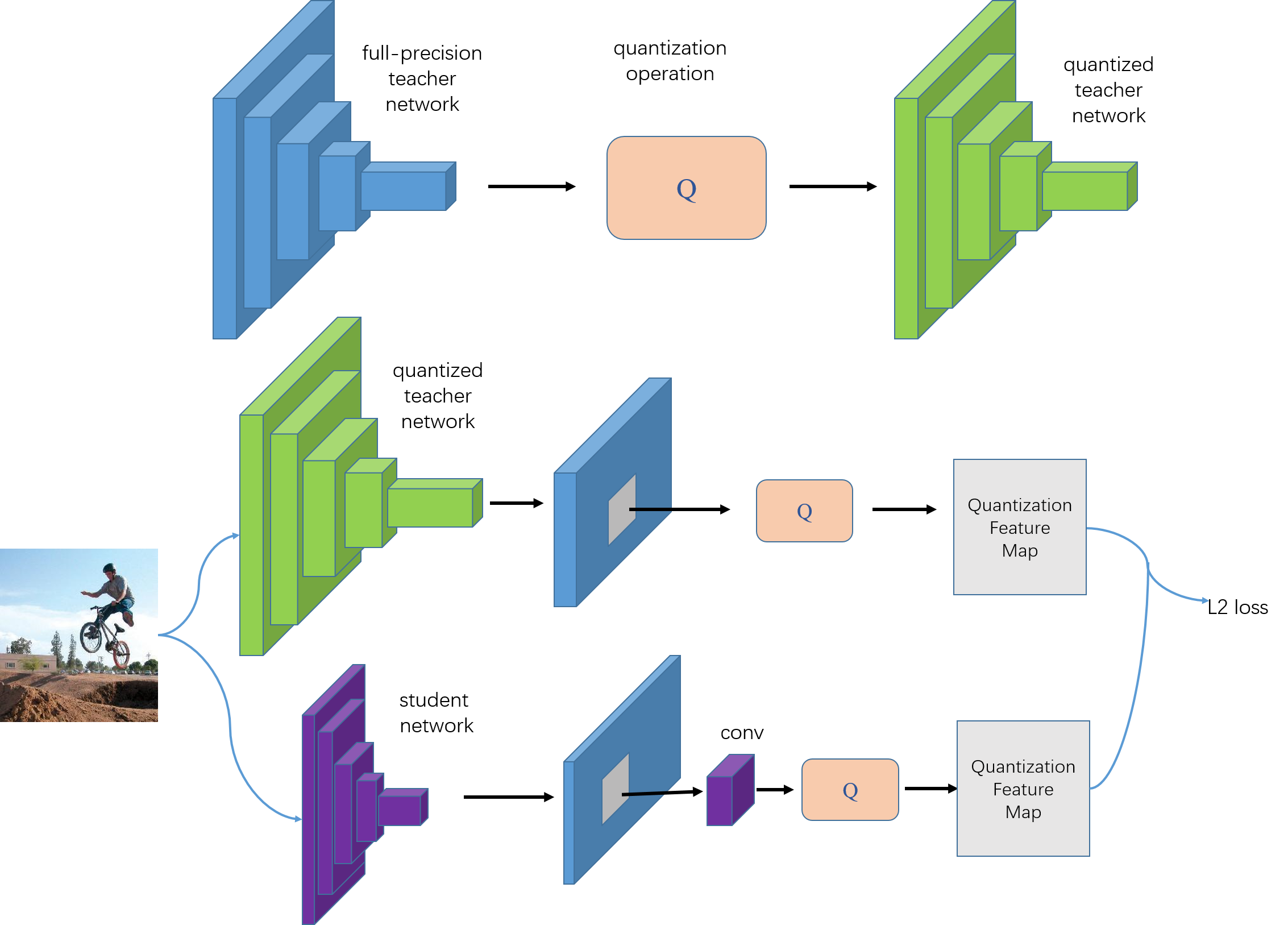

In this paper, we propose a simple and general framework for training very tiny CNNs for object detection. Due to limited representation ability, it is challenging to train very tiny networks for complicated tasks like detection. To the best of our knowledge, our method, called Quantization Mimic, is the first one focusing on very tiny networks. We utilize two types of acceleration methods: mimic and quantization. Mimic improves the performance of a student network by transfering knowledge from a teacher network. Quantization converts a full-precision network to a quantized one without large degradation of performance. If the teacher network is quantized, the search scope of the student network will be smaller. Using this feature of the quantization, we propose Quantization Mimic. It first quantizes the large network, then mimic a quantized small network. The quantization operation can help student network to better match the feature maps from teacher network. To evaluate our approach, we carry out experiments on various popular CNNs including VGG and Resnet, as well as different detection frameworks including Faster R-CNN and R-FCN. Experiments on Pascal VOC and WIDER FACE verify that our Quantization Mimic algorithm can be applied on various settings and outperforms state-of-the-art model acceleration methods given limited computing resouces.

翻译:在本文中,我们提出了一个用于培训非常小的CNN进行对象探测的简单和一般框架。 由于代表能力有限, 培训非常小的网络进行探测等复杂任务的挑战性在于培训非常小的网络。 根据我们的最佳知识, 我们的方法, 叫做量化Micmic, 是第一个侧重于非常小的网络。 我们使用两种类型的加速方法: 模拟和量化。 缩影通过从教师网络传输知识来提高学生网络的性能。 定量化将一个完全精准的网络转换成一个量化的网络, 而不造成性能的大幅退化。 如果教师网络被量化, 学生网络的搜索范围将会缩小。 使用这个量化特性, 我们建议量化 Mimicmic。 首先对大型网络进行量化, 然后模拟一个小网络。 定量化操作可以帮助学生网络更好地匹配教师网络的特征地图。 为了评估我们的方法, 我们对各种广受欢迎的CNN, 包括VGG和Resnet, 以及不同的检测框架, 包括快速的 R-CNN 和 R-QAS- real 快速应用的硬盘算, 和 R- recal- recal- acal 各种系统 系统, 可以校验算。