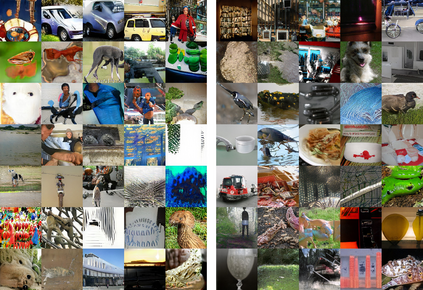

Attention-based models, exemplified by the Transformer, can effectively model long range dependency, but suffer from the quadratic complexity of self-attention operation, making them difficult to be adopted for high-resolution image generation based on Generative Adversarial Networks (GANs). In this paper, we introduce two key ingredients to Transformer to address this challenge. First, in low-resolution stages of the generative process, standard global self-attention is replaced with the proposed multi-axis blocked self-attention which allows efficient mixing of local and global attention. Second, in high-resolution stages, we drop self-attention while only keeping multi-layer perceptrons reminiscent of the implicit neural function. To further improve the performance, we introduce an additional self-modulation component based on cross-attention. The resulting model, denoted as HiT, has a linear computational complexity with respect to the image size and thus directly scales to synthesizing high definition images. We show in the experiments that the proposed HiT achieves state-of-the-art FID scores of 31.87 and 2.95 on unconditional ImageNet $128 \times 128$ and FFHQ $256 \times 256$, respectively, with a reasonable throughput. We believe the proposed HiT is an important milestone for generators in GANs which are completely free of convolutions.

翻译:以变异器为范例的基于关注的模式可以有效地模拟远距离依赖性,但会受到自我注意操作的四重复杂程度的影响,因此难以在创造反反向网络(GANs)的基础上为高分辨率图像生成工作采用这些模式。在本文中,我们为变异器引入了两个关键要素来应对这一挑战。首先,在基因变异过程的低分辨率阶段,标准的全球自我注意被替换为拟议的多轴屏蔽式自我注意,从而能够有效地混合当地和全球的注意力。第二,在高分辨率阶段,我们放弃自我注意,而仅仅保持多层感应,同时只保持隐含神经功能的多层感应。为了进一步改善性,我们引入了基于交叉注意的额外自我调整部分。由此产生的模型(称为HIT)在图像大小上具有线性计算复杂性,从而直接将高定义图像合成。我们在实验中显示,拟议的HIT在31.87和2.95个高端的FID分数中实现了状态,而我们完全相信一个无条件的GAN-256年的G-256年的G-256年的S-256年的S-256年的S-256年的Ax。