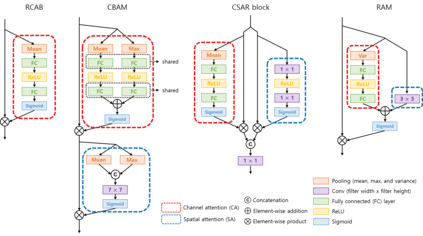

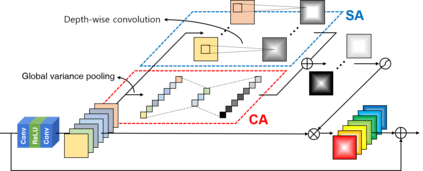

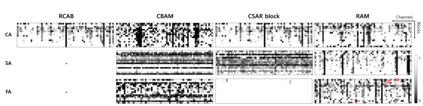

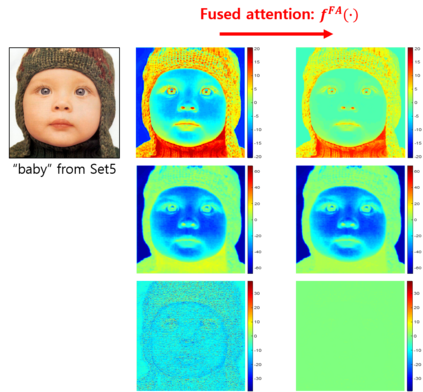

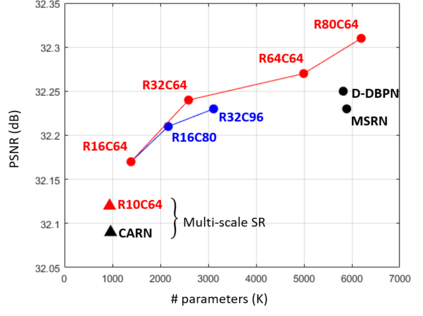

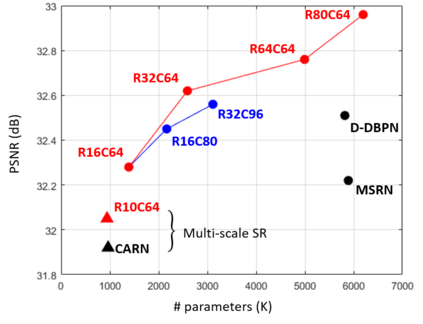

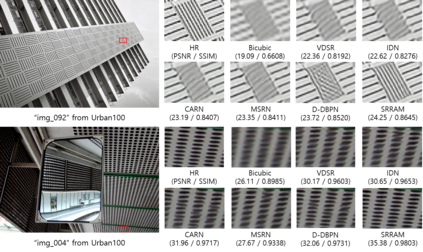

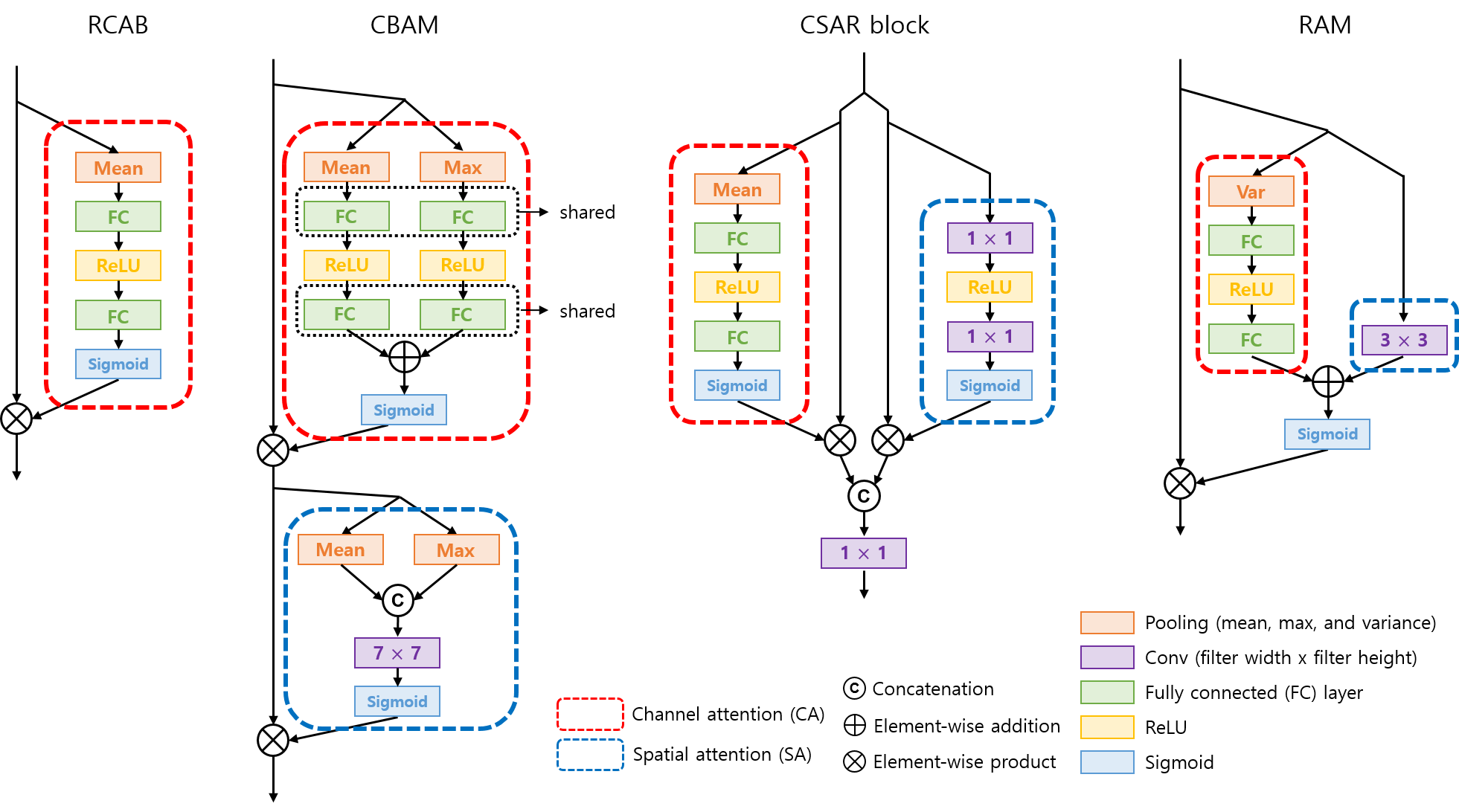

Attention mechanisms are a design trend of deep neural networks that stands out in various computer vision tasks. Recently, some works have attempted to apply attention mechanisms to single image super-resolution (SR) tasks. However, they apply the mechanisms to SR in the same or similar ways used for high-level computer vision problems without much consideration of the different nature between SR and other problems. In this paper, we propose a new attention method, which is composed of new channel-wise and spatial attention mechanisms optimized for SR and a new fused attention to combine them. Based on this, we propose a new residual attention module (RAM) and a SR network using RAM (SRRAM). We provide in-depth experimental analysis of different attention mechanisms in SR. It is shown that the proposed method can construct both deep and lightweight SR networks showing improved performance in comparison to existing state-of-the-art methods.

翻译:最近,一些工作试图将注意力机制应用于单一图像超分辨率(SR)任务,然而,它们以用于高级计算机视觉问题的相同或类似方式对SR适用机制,而没有太多考虑到SR和其他问题的不同性质。我们在本文件中建议一种新的关注方法,它由为SR优化的新频道和空间关注机制组成,并采用新的融合关注机制组成。在此基础上,我们提出一个新的留守模块(RAM)和使用RAM(SRRAM)的SR网络。我们对SR的不同关注机制进行深入的实验性分析。我们表明,拟议的方法可以构建深重和轻重SR网络,表明与现有最新方法相比,其绩效有所改善。