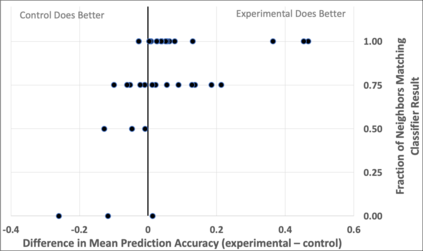

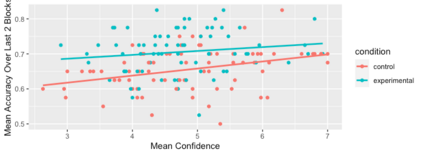

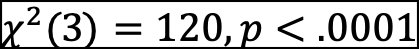

Humans should be able work more effectively with artificial intelligence-based systems when they can predict likely failures and form useful mental models of how the systems work. We conducted a study of human's mental models of artificial intelligence systems using a high-performing image classifier, focusing on participants' ability to predict the classification result for a particular image. Participants viewed individual labeled images in one of two classes and then tried to predict whether the classifier would label them correctly. In this experiment we explored the effect of giving participants additional information about an image's nearest neighbors in a space representing the otherwise uninterpretable features extracted by the lower layers of the classifier's neural network. We found that providing this information did increase participants' prediction performance, and that the performance improvement could be related to the neighbor images' similarity to the target image. We also found indications that the presentation of this information may influence people's own classification of the target image -- that is, rather than just anthropomorphizing the system, in some cases the humans become "mechanomorphized" in their judgements.

翻译:人类应该能够更有效地与人工智能系统合作,当它们能够预测可能的失败,并形成关于这些系统如何运作的有用的心理模型时。我们用高性能图像分类器对人工智能系统的人类心理模型进行了一项研究,重点是参与者预测特定图像分类结果的能力。参与者在两个类别中的一个中查看了贴有个人标签的图像,然后试图预测分类器是否正确贴上标签。在这个实验中,我们探讨了向参与者提供更多关于图像近邻的信息的效果,该图像的近邻空间代表了分类器神经网络下层所提取的无法解释的特征。我们发现,提供这种信息确实提高了参与者的预测性能,而且性能的改进可能与邻居图像与目标图像相似性有关。我们还发现了一些迹象,这种信息的展示可能会影响人们自己对目标图像的分类 -- 也就是说,而不仅仅是将系统进行人类形态化,在某些情况下,人类在判断中变成了“基因化”。