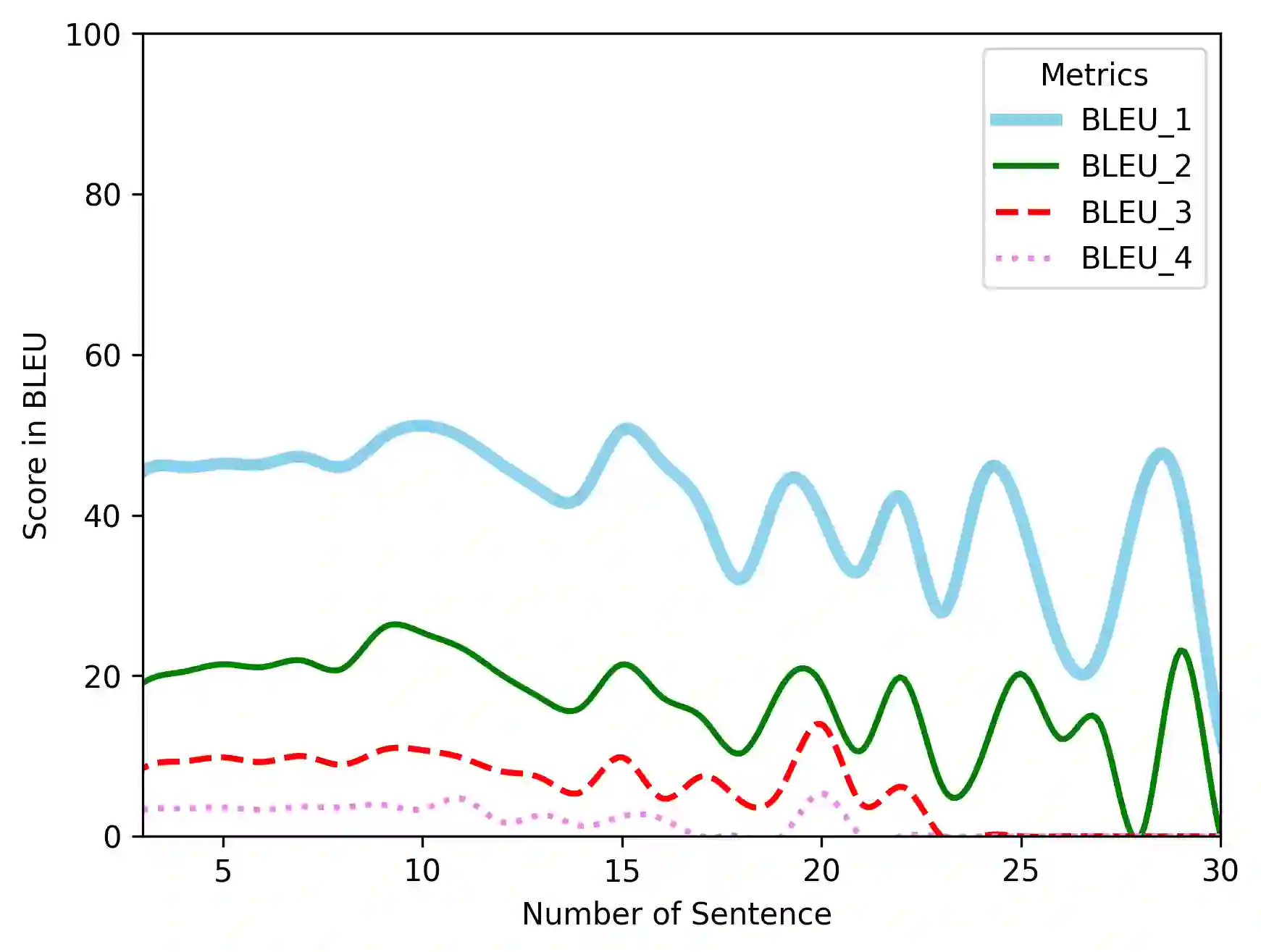

Question generation (QG) is a natural language generation task where a model is trained to ask questions corresponding to some input text. Most recent approaches frame QG as a sequence-to-sequence problem and rely on additional features and mechanisms to increase performance; however, these often increase model complexity, and can rely on auxiliary data unavailable in practical use. A single Transformer-based unidirectional language model leveraging transfer learning can be used to produce high quality questions while disposing of additional task-specific complexity. Our QG model, finetuned from GPT-2 Small, outperforms several paragraph-level QG baselines on the SQuAD dataset by 0.95 METEOR points. Human evaluators rated questions as easy to answer, relevant to their context paragraph, and corresponding well to natural human speech. Also introduced is a new set of baseline scores on the RACE dataset, which has not previously been used for QG tasks. Further experimentation with varying model capacities and datasets with non-identification type questions is recommended in order to further verify the robustness of pretrained Transformer-based LMs as question generators.

翻译:问题生成(QG)是一项天然语言生成任务,在这种任务中,一个模型经过培训,可以提出与某些输入文本相应的问题。大多数最新方法将QG作为一个顺序到顺序的问题,并依靠额外的特征和机制来提高绩效;然而,这些往往会增加模型复杂性,而且可以依赖实际使用中无法获得的辅助数据。一个单一的基于变异器的单向单向语言模式,利用转让学习来生成高质量的问题,同时处理额外任务的复杂性。我们从GPT-2 SmL微调出来的QG模型优于由0.95 METEOR点组成的SQuAD数据集的若干段落级QG基线。人类评价员将问题评为容易回答的问题,与其上下文段落相关,并与自然人讲话相对应。还引入了RACE数据集的一套新的基线分数,该数据集以前没有用于QG任务。建议用不同模型能力和非识别型问题数据集进行进一步实验,以便进一步核实以问题发电机为训练有素前的LMS的精密性。