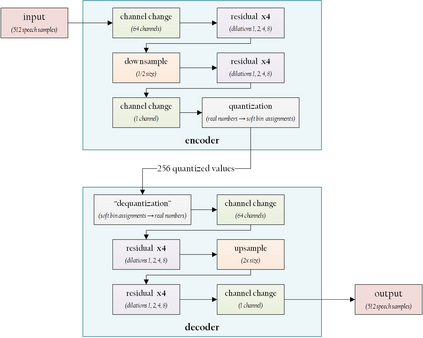

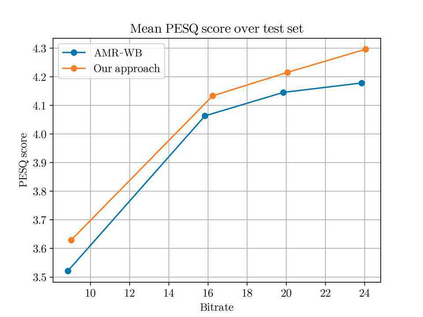

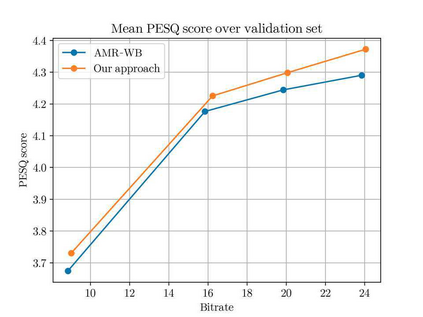

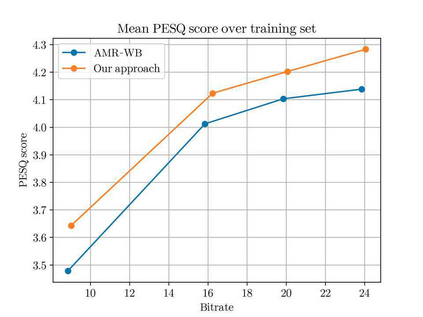

Modern compression algorithms are often the result of laborious domain-specific research; industry standards such as MP3, JPEG, and AMR-WB took years to develop and were largely hand-designed. We present a deep neural network model which optimizes all the steps of a wideband speech coding pipeline (compression, quantization, entropy coding, and decompression) end-to-end directly from raw speech data -- no manual feature engineering necessary, and it trains in hours. In testing, our DNN-based coder performs on par with the AMR-WB standard at a variety of bitrates (~9kbps up to ~24kbps). It also runs in realtime on a 3.8GhZ Intel CPU.

翻译:现代压缩算法往往是费力的域别研究的结果;诸如MP3、JPEG和AMR-WB等行业标准花了多年才制定并在很大程度上是手工设计的。我们展示了一个深层神经网络模型,该模型优化了宽带语音编码管道(压缩、量子化、加密编码和减压)从原始语音数据直接到端端到端,不需要人工地物工程,它用小时进行训练。在测试中,我们基于DNN的编码器在各种比特率(~9kbps到~24kbps)上与AMR-W标准相当。它还在3.8GhZ Intel CPU上实时运行。