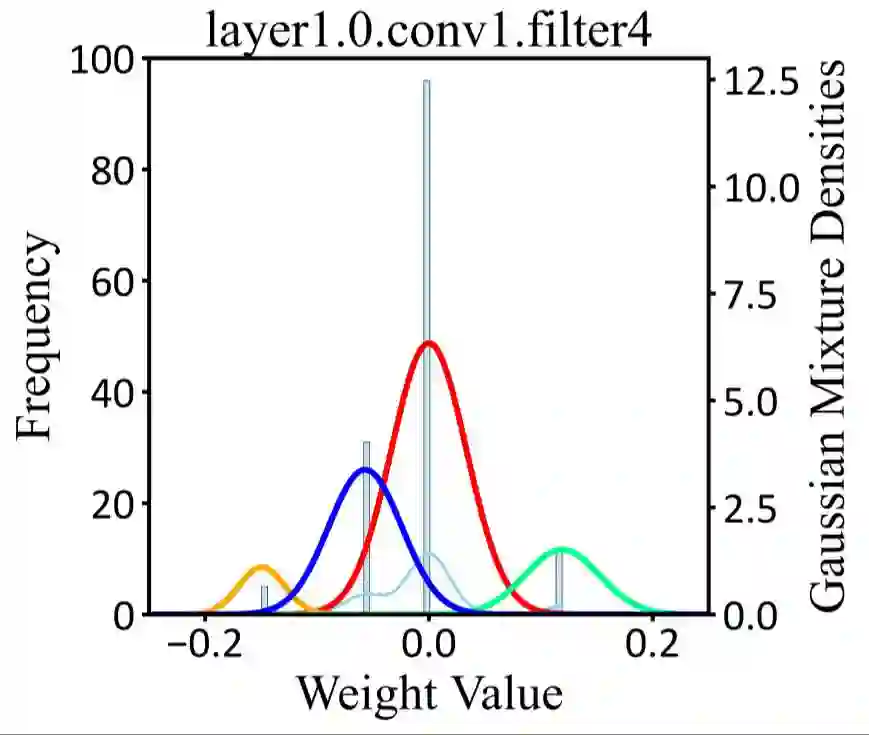

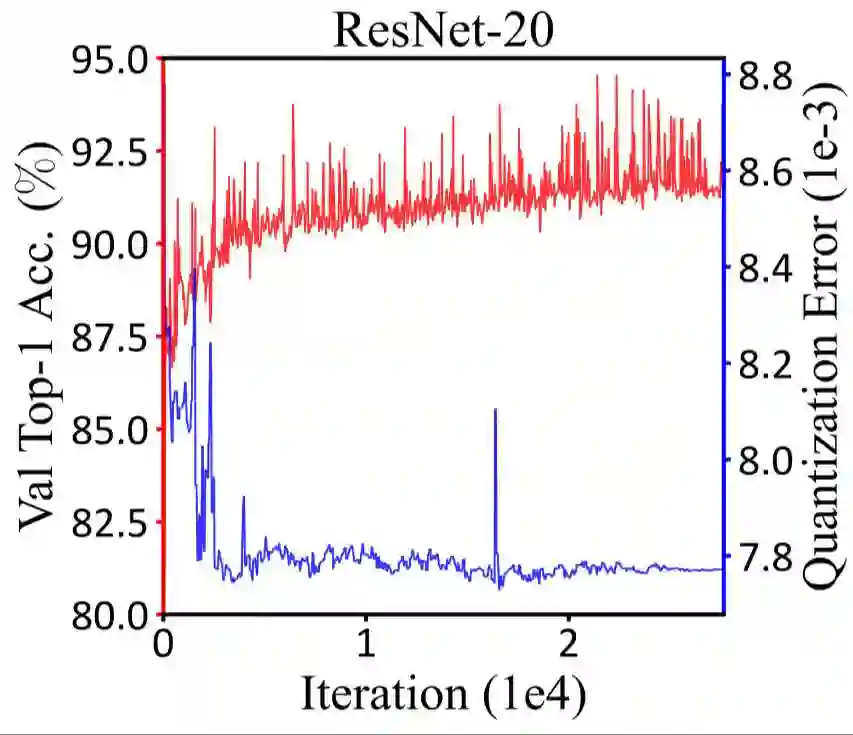

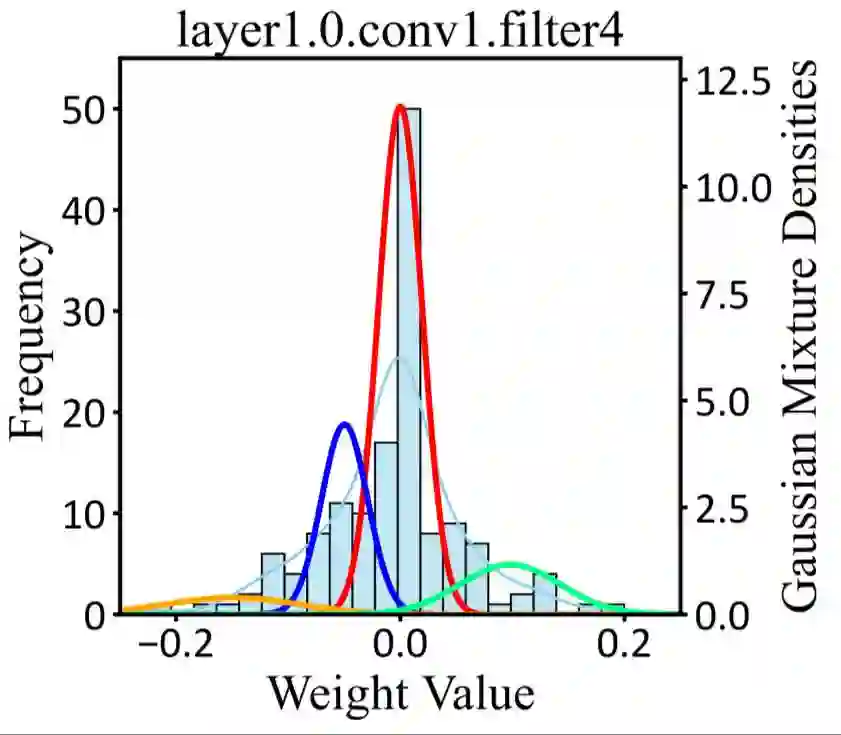

Quantized neural networks typically require smaller memory footprints and lower computation complexity, which is crucial for efficient deployment. However, quantization inevitably leads to a distribution divergence from the original network, which generally degrades the performance. To tackle this issue, massive efforts have been made, but most existing approaches lack statistical considerations and depend on several manual configurations. In this paper, we present an adaptive-mapping quantization method to learn an optimal latent sub-distribution that is inherent within models and smoothly approximated with a concrete Gaussian Mixture (GM). In particular, the network weights are projected in compliance with the GM-approximated sub-distribution. This sub-distribution evolves along with the weight update in a co-tuning schema guided by the direct task-objective optimization. Sufficient experiments on image classification and object detection over various modern architectures demonstrate the effectiveness, generalization property, and transferability of the proposed method. Besides, an efficient deployment flow for the mobile CPU is developed, achieving up to 7.46$\times$ inference acceleration on an octa-core ARM CPU. Our codes have been publicly released at \url{https://github.com/RunpeiDong/DGMS}.

翻译:量化神经网络通常需要较少的记忆足迹和较低的计算复杂性,这对于有效部署至关重要,然而,量化不可避免地导致与原始网络的分布差异,通常会降低性能。为解决这一问题,已经做出了大量努力,但大多数现有方法缺乏统计考虑,并取决于若干人工配置。在本文件中,我们提出了一个适应性绘图量化方法,以学习模型内固有的、与混凝土Gaussian Mixture(GM)相近的最优化潜在子分布,特别是,预测网络重量符合GM-apbloitd分流。这一次分配随着在直接任务目标优化指导下的组合调整系统中更新重量而不断演变。关于图像分类和物体探测的各种现代结构的足够实验显示了拟议方法的有效性、通用属性和可转移性。此外,为移动式CPU开发了高效的部署流动流动流动流动流动流量,达到7.46美元/时间,从而加速了八核心的ARM CPU。我们的代码已在以下公开发布:DGM/OmbUD。