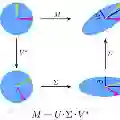

Computing the matrix square root and its inverse in a differentiable manner is important in a variety of computer vision tasks. Previous methods either adopt the Singular Value Decomposition (SVD) to explicitly factorize the matrix or use the Newton-Schulz iteration (NS iteration) to derive the approximate solution. However, both methods are not computationally efficient enough in either the forward pass or the backward pass. In this paper, we propose two more efficient variants to compute the differentiable matrix square root and the inverse square root. For the forward propagation, one method is to use Matrix Taylor Polynomial (MTP), and the other method is to use Matrix Pad\'e Approximants (MPA). The backward gradient is computed by iteratively solving the continuous-time Lyapunov equation using the matrix sign function. A series of numerical tests show that both methods yield considerable speed-up compared with the SVD or the NS iteration. Moreover, we validate the effectiveness of our methods in several real-world applications, including de-correlated batch normalization, second-order vision transformer, global covariance pooling for large-scale and fine-grained recognition, attentive covariance pooling for video recognition, and neural style transfer. The experimental results demonstrate that our methods can also achieve competitive and even slightly better performances. The Pytorch implementation is available at https://github.com/KingJamesSong/FastDifferentiableMatSqrt

翻译:以不同的方式计算矩阵平方根及其反向在各种计算机视觉任务中很重要。 以往的方法要么采用星值分解( SVD) 来明确对矩阵进行分解, 要么使用牛顿- 舒尔茨循环( NS 迭代) 来得出近似解决方案。 但是, 这两种方法的计算效率都不够高, 无论是在远端路口还是后向路口。 在本文中, 我们建议两种更高效的变量来计算不同的矩阵平方根和反方根。 对于前方传播, 一种方法是使用Taylor 质调调调调( MTP), 而另一种方法是使用 Mexm Pad\' e Approximants( MMPA) 。 后向梯度的计算方法是通过迭接式解决连续时间的 Lyapunov 方程式, 使用矩阵符号函数。 一系列数字测试显示, 这两种方法与 SVD 或NS Iteration相比, 都具有相当大的超速效果。 此外, 我们验证了我们的方法在一些真实世界应用程序应用程序应用中的有效性, 包括与分级的分级对等平级平级平级平级平级平级平级平级平级平级平级平流/ 的平级平级图像的图像视觉视觉视觉视觉视觉视野, 视野的视觉可转换,,, 和同步同步同步同步的图像可展示的图像可展示的图像可演化, 实验性平流性平流性平流性平流性平流性平流法度可演化, 。