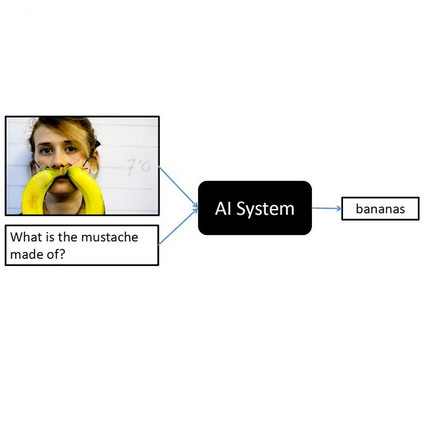

Malicious perturbations embedded in input data, known as Trojan attacks, can cause neural networks to misbehave. However, the impact of a Trojan attack is reduced during fine-tuning of the model, which involves transferring knowledge from a pretrained large-scale model like visual question answering (VQA) to the target model. To mitigate the effects of a Trojan attack, replacing and fine-tuning multiple layers of the pretrained model is possible. This research focuses on sample efficiency, stealthiness and variation, and robustness to model fine-tuning. To address these challenges, we propose an instance-level Trojan attack that generates diverse Trojans across input samples and modalities. Adversarial learning establishes a correlation between a specified perturbation layer and the misbehavior of the fine-tuned model. We conducted extensive experiments on the VQA-v2 dataset using a range of metrics. The results show that our proposed method can effectively adapt to a fine-tuned model with minimal samples. Specifically, we found that a model with a single fine-tuning layer can be compromised using a single shot of adversarial samples, while a model with more fine-tuning layers can be compromised using only a few shots.

翻译:恶意伪装在输入数据中,也称为特洛伊攻击,可能导致神经网络不正常运作。然而,在微调模型期间,即将知识从预训练的大规模模型(如视觉问答(VQA))转移到目标模型时,特洛伊攻击的影响会减小。为减轻特洛伊攻击的影响,可以更换和微调预训练模型的多个层。本研究着重解决的挑战是,提高样本效率、隐蔽性、多样性以及对模型微调的鲁棒性。为应对这些挑战,我们提出了一种基于实例级的特洛伊攻击,可以在输入样本和模态之间生成多样化的特洛伊。对抗学习在指定的扰动层和微调模型的失效之间建立了联系。我们在VQA-v2数据集上进行了大量实验,使用了一系列度量标准。结果表明,我们提出的方法可以在最少的样本下有效适应微调模型。具体而言,我们发现,在单层微调模型的情况下,只需一次对抗样本即可破坏模型,而在更多的微调层模型下,只需要几次对抗样本即可破坏模型。