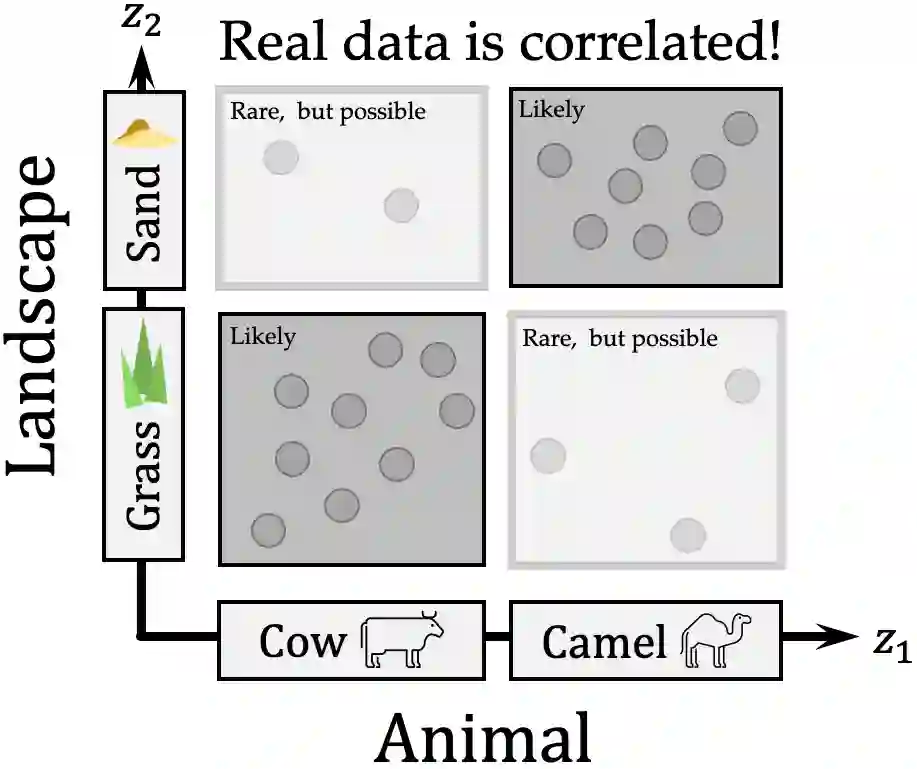

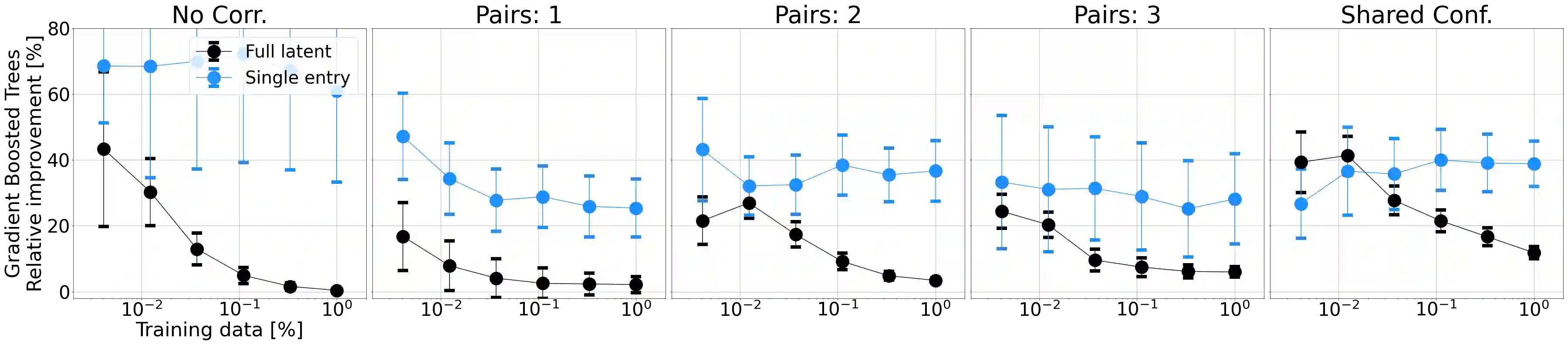

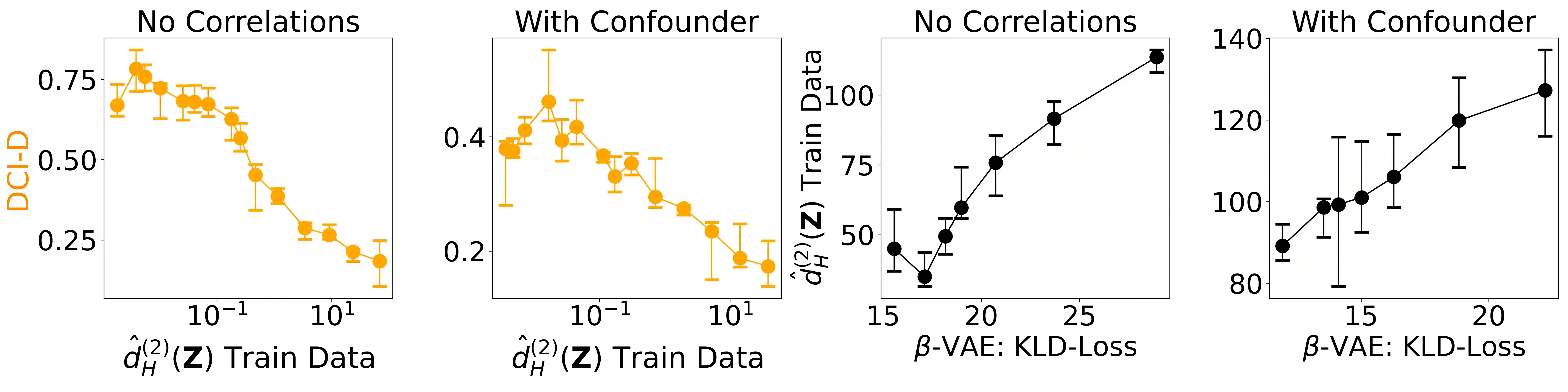

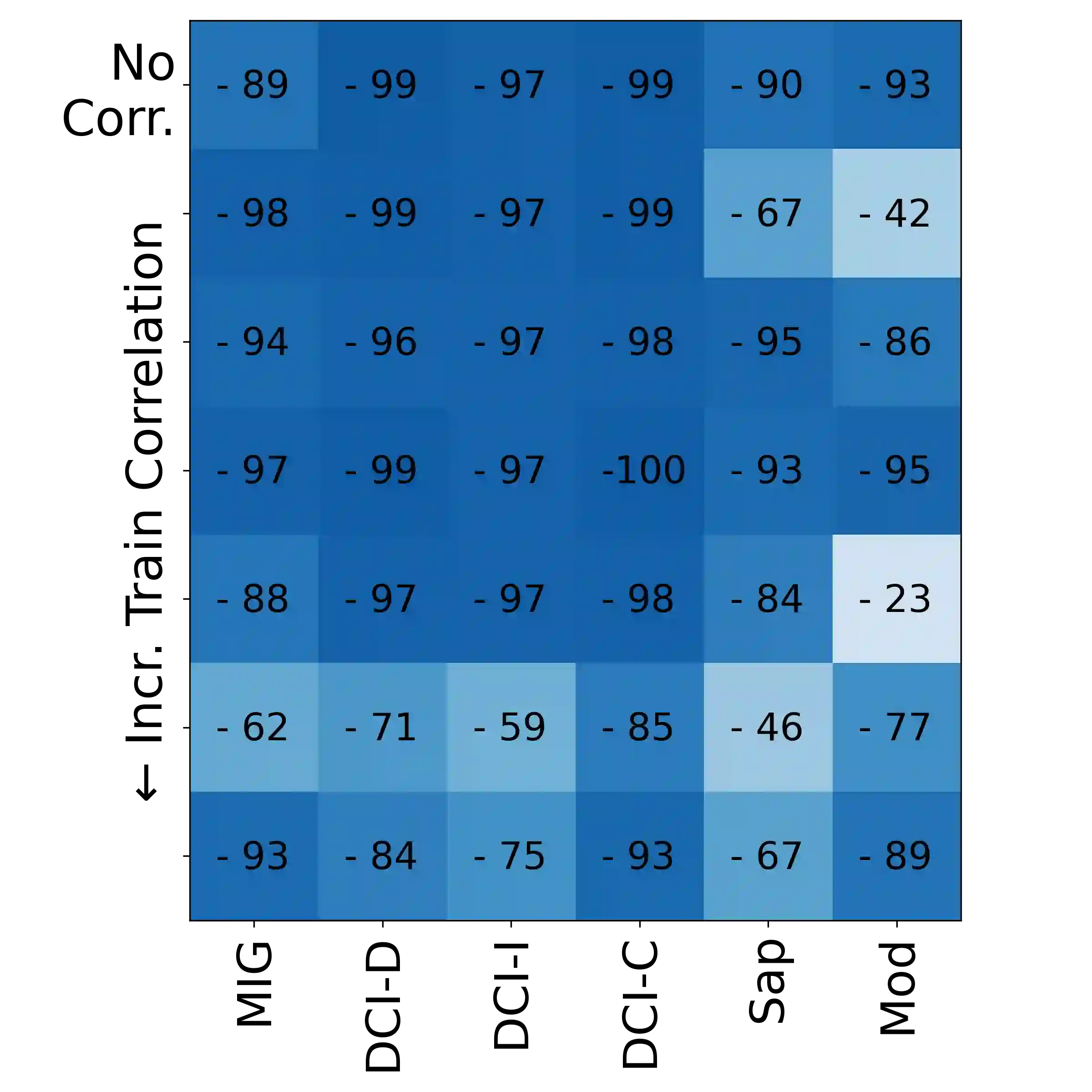

A grand goal in deep learning research is to learn representations capable of generalizing across distribution shifts. Disentanglement is one promising direction aimed at aligning a models representations with the underlying factors generating the data (e.g. color or background). Existing disentanglement methods, however, rely on an often unrealistic assumption: that factors are statistically independent. In reality, factors (like object color and shape) are correlated. To address this limitation, we propose a relaxed disentanglement criterion - the Hausdorff Factorized Support (HFS) criterion - that encourages a factorized support, rather than a factorial distribution, by minimizing a Hausdorff distance. This allows for arbitrary distributions of the factors over their support, including correlations between them. We show that the use of HFS consistently facilitates disentanglement and recovery of ground-truth factors across a variety of correlation settings and benchmarks, even under severe training correlations and correlation shifts, with in parts over +60% in relative improvement over existing disentanglement methods. In addition, we find that leveraging HFS for representation learning can even facilitate transfer to downstream tasks such as classification under distribution shifts. We hope our original approach and positive empirical results inspire further progress on the open problem of robust generalization.

翻译:深层学习研究的一个宏伟目标是学习能够普遍分布分布式转换的表达方式。分解是一个很有希望的方向,目的是将模型的表述方式与产生数据的基本因素(如颜色或背景)相匹配。但是,现有的分解方法依赖于一种往往不现实的假设:这些因素在统计上是独立的。在现实中,各种因素(如对象颜色和形状)是相互关联的。为了解决这一限制,我们建议采用一个松散的分解标准----Hausdorf 集成支持(HOusdorf 集成支持(HFS)标准),通过尽可能减少Hausdorff距离,而不是因子分布来鼓励一种分化的支持,而不是因子分布。这允许任意分配其支持的因素,包括它们之间的相互关系。我们表明,使用HFS始终有助于分解和复原各种关联环境和基准的地面分界因素,即使处于严格的培训相关性和关联性变化之下,在部分上超过+60 %的相对改进比现有的公开分解方法。此外,我们发现,利用HFS的代表性学习甚至能够促进向下游任务转移,例如强劲分配下的经验转变。我们希望我们最初的方法和积极的方法。