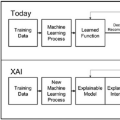

Why do explainable AI (XAI) explanations in radiology, despite their promise of transparency, still fail to gain human trust? Current XAI approaches provide justification for predictions, however, these do not meet practitioners' needs. These XAI explanations lack intuitive coverage of the evidentiary basis for a given classification, posing a significant barrier to adoption. We posit that XAI explanations that mirror human processes of reasoning and justification with evidence may be more useful and trustworthy than traditional visual explanations like heat maps. Using a radiology case study, we demonstrate how radiology practitioners get other practitioners to see a diagnostic conclusion's validity. Machine-learned classifications lack this evidentiary grounding and consequently fail to elicit trust and adoption by potential users. Insights from this study may generalize to guiding principles for human-centered explanation design based on human reasoning and justification of evidence.

翻译:为什么即使在透明度方面有所承诺的可解释人工智能(XAI)在放射学中的解释仍然无法获得人类的信任?当前的XAI方法提供了预测的证明,然而这些预测并不满足从业者的需求。这些可解释人工智能解释缺乏直观的覆盖给定分类的证据基础,这是采纳的一个重要障碍。我们认为,反映人类推理和证据证明过程的可解释人工智能解释可能比传统的可视化解释如热力图更有用和值得信赖。通过放射学案例研究,我们展示了放射学从业者如何让其他从业者看到诊断结论的有效性。机器学习分类缺乏这种证据的基础,因此无法引起潜在用户的信任和采用。这项研究的见解可推广到基于人类证据推理和证明的以人为中心的解释设计指导原则。