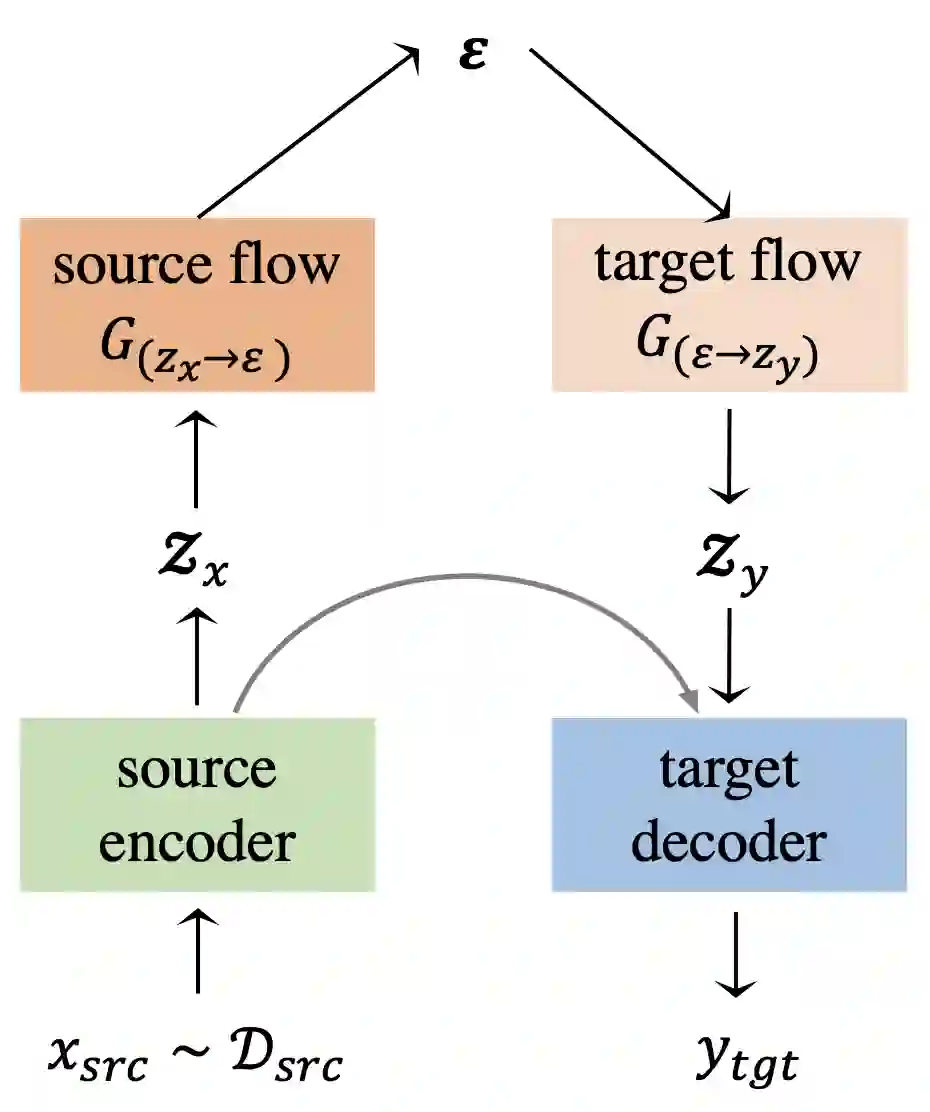

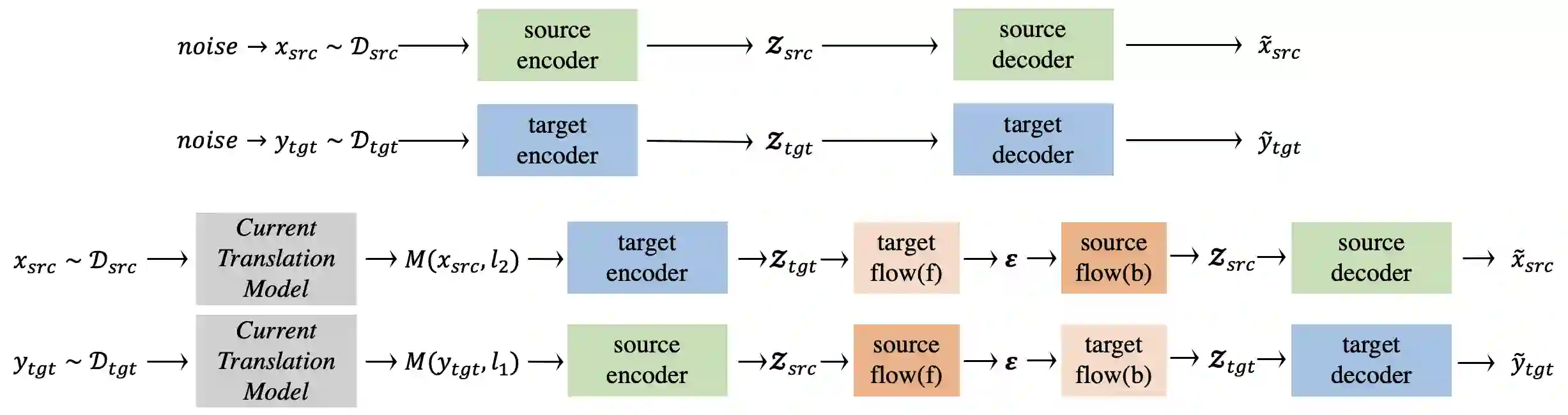

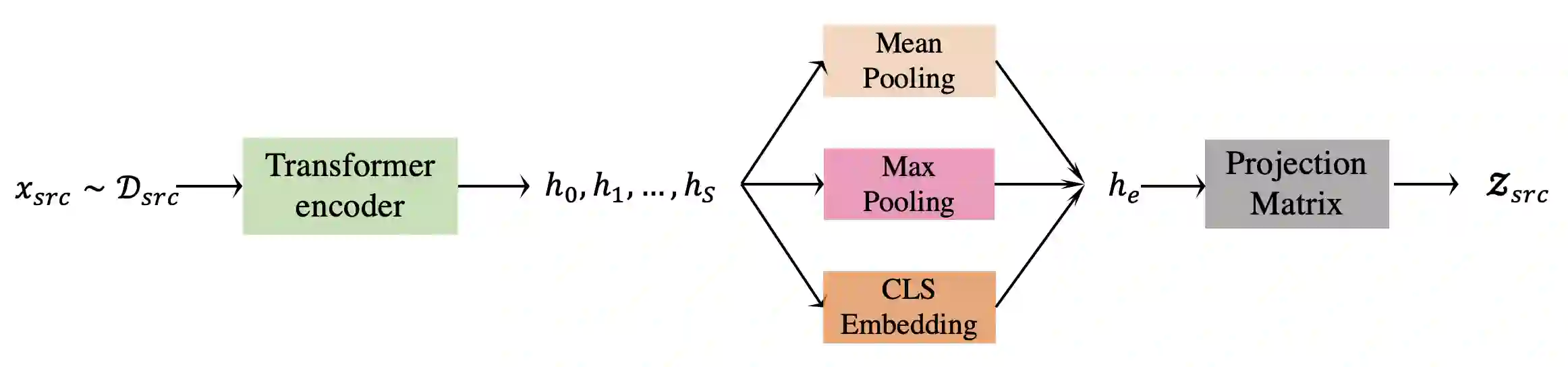

In this work, we propose a flow-adapter architecture for unsupervised NMT. It leverages normalizing flows to explicitly model the distributions of sentence-level latent representations, which are subsequently used in conjunction with the attention mechanism for the translation task. The primary novelties of our model are: (a) capturing language-specific sentence representations separately for each language using normalizing flows and (b) using a simple transformation of these latent representations for translating from one language to another. This architecture allows for unsupervised training of each language independently. While there is prior work on latent variables for supervised MT, to the best of our knowledge, this is the first work that uses latent variables and normalizing flows for unsupervised MT. We obtain competitive results on several unsupervised MT benchmarks.

翻译:在这项工作中,我们为不受监督的NMT提出了一个流动调整结构。它利用正常流来明确模拟判决一级潜在代表的分布,随后与翻译任务的注意机制一起使用。我们模型的主要新颖之处是:(a) 使用正常流分别记录每种语言的语文特定句子表述,(b) 使用这些潜在代表的简单转换,将一种语言翻译为另一种语言。这一结构允许独立地对每种语言进行不受监督的培训。尽管我们最了解的是,先前曾就受监督的MT的潜在变量进行了工作,但这是首次使用潜在变量和未监督MT流的正常化工作。我们在若干未经监督的MT基准上取得了竞争性结果。