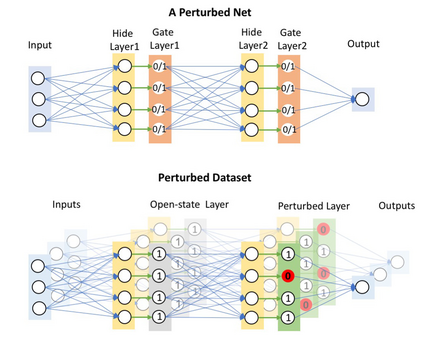

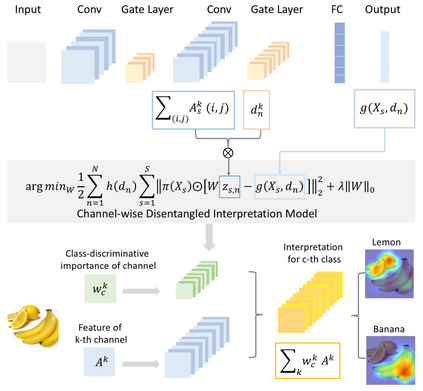

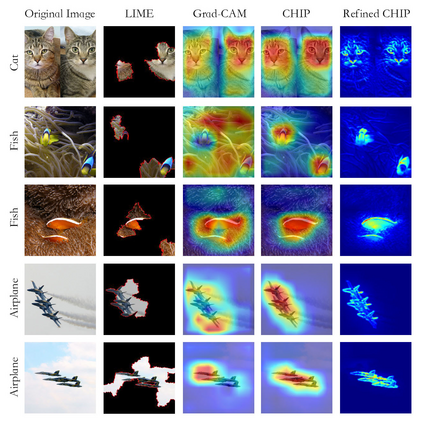

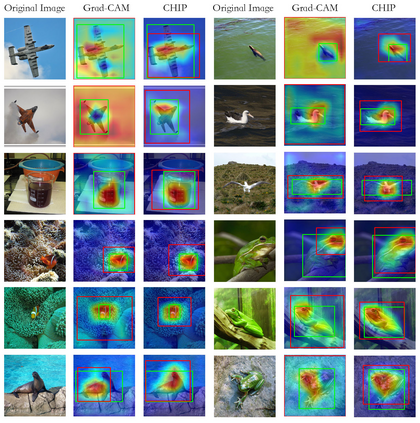

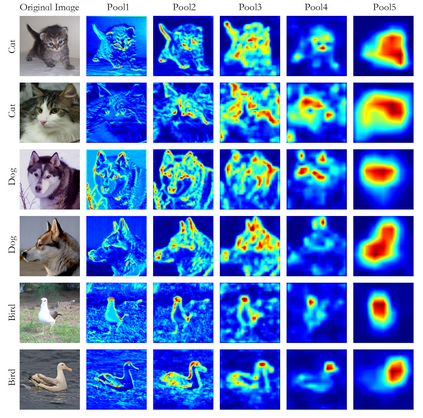

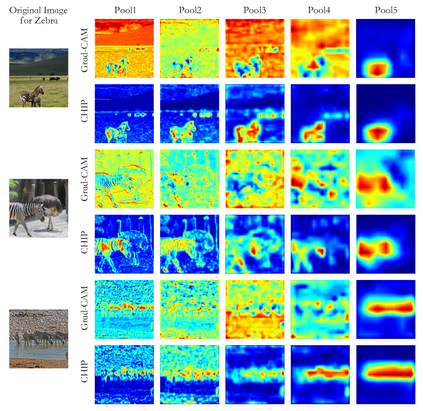

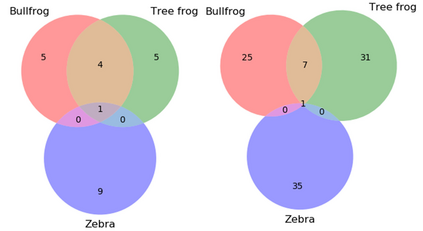

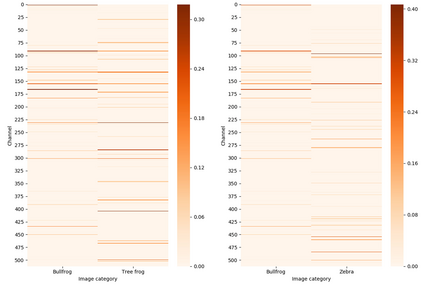

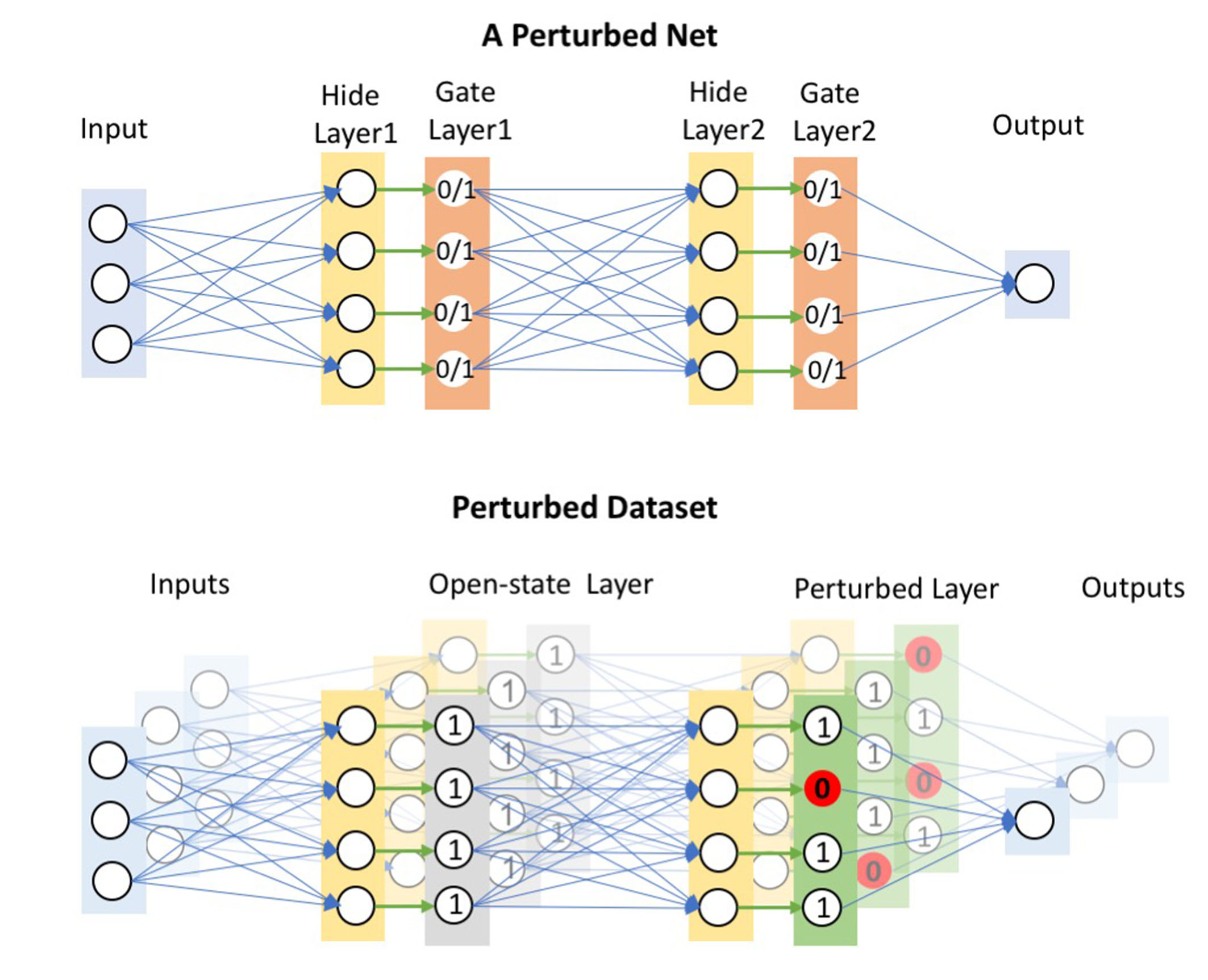

With the widespread applications of deep convolutional neural networks (DCNNs), it becomes increasingly important for DCNNs not only to make accurate predictions but also to explain how they make their decisions. In this work, we propose a CHannel-wise disentangled InterPretation (CHIP) model to give the visual interpretation to the predictions of DCNNs. The proposed model distills the class-discriminative importance of channels in networks by utilizing the sparse regularization. Here, we first introduce the network perturbation technique to learn the model. The proposed model is capable to not only distill the global perspective knowledge from networks but also present the class-discriminative visual interpretation for specific predictions of networks. It is noteworthy that the proposed model is able to interpret different layers of networks without re-training. By combining the distilled interpretation knowledge in different layers, we further propose the Refined CHIP visual interpretation that is both high-resolution and class-discriminative. Experimental results on the standard dataset demonstrate that the proposed model provides promising visual interpretation for the predictions of networks in image classification task compared with existing visual interpretation methods. Besides, the proposed method outperforms related approaches in the application of ILSVRC 2015 weakly-supervised localization task.

翻译:随着深层神经神经网络(DCNNS)的广泛应用,对于DCNNS来说,越来越重要的是不仅准确预测,而且解释他们如何做出决策。在这项工作中,我们提议了一个CHannel明智的分解干涉(CHIP)模型,以便对DCNNS的预测进行直观解释。拟议的模型利用稀疏的正规化,将网络中各频道的分类差异性重要性蒸馏出来。在这里,我们首先采用网络扰动技术来学习模型。拟议的模型不仅能够从网络中提取全球视角知识,而且能够展示对网络具体预测的阶级差异性直观解释。值得注意的是,拟议的模型能够在不经过再培训的情况下解释不同层次的网络。通过将精选的解释知识结合到不同层次,我们进一步提出对CHIP的分类进行精密的视觉解释,既能解度高,又具有阶级差异性。标准数据集的实验结果显示,拟议的模型不仅能够从网络中提炼全球视角知识,而且能够展示对网络进行分辨的分类。值得注意的是,拟议的模型能够对网络进行分解,而比现有的ILS相关的视觉分析方法。