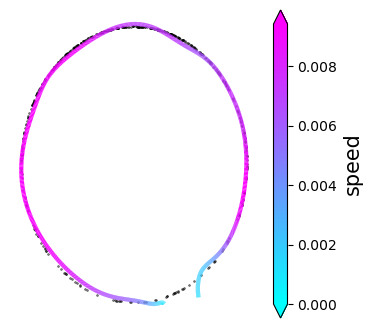

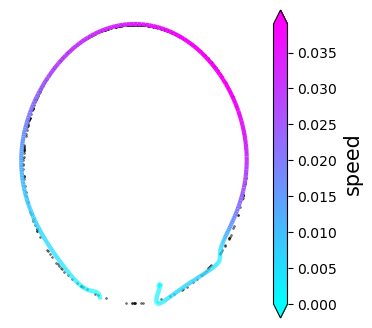

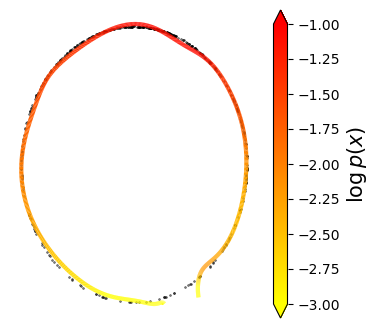

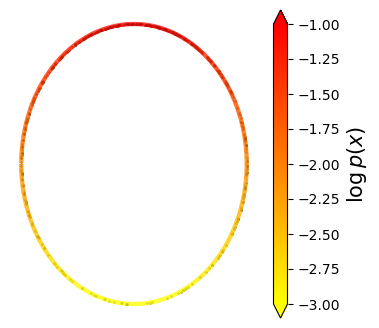

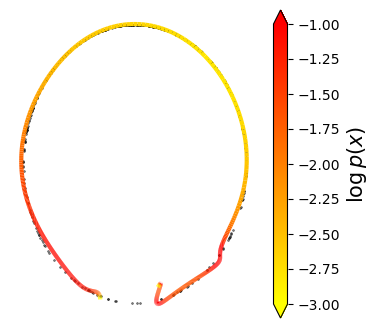

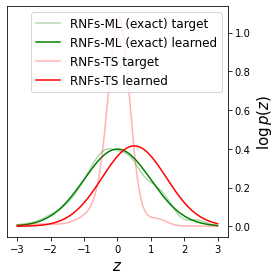

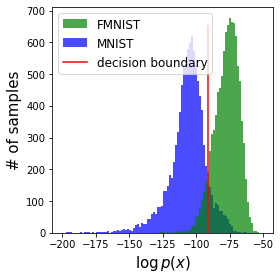

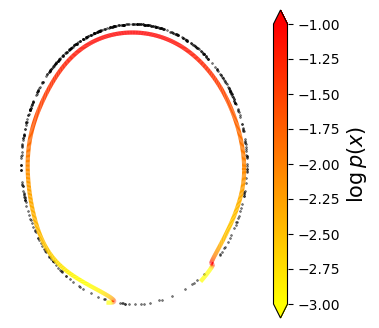

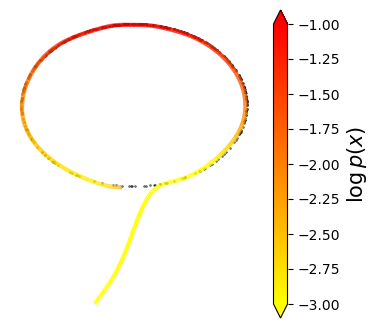

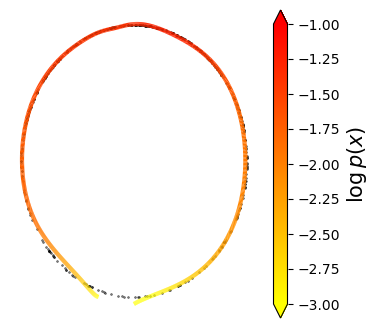

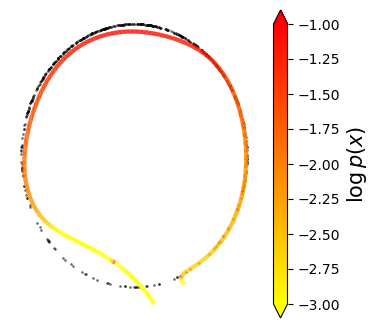

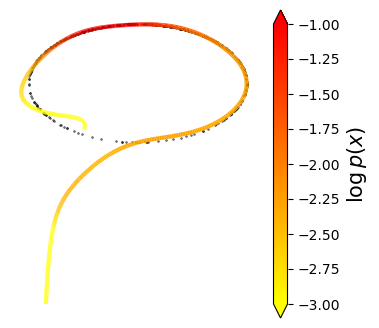

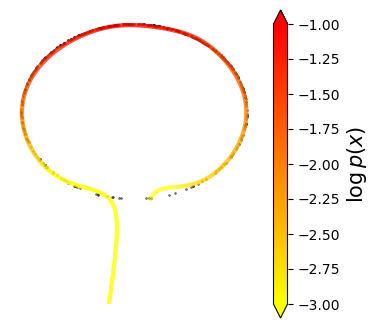

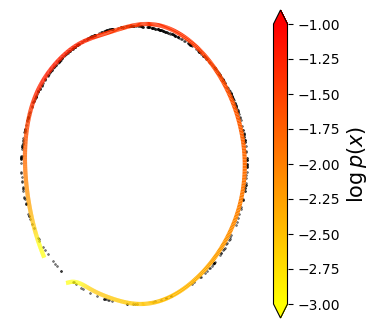

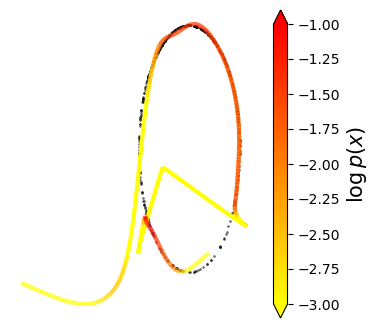

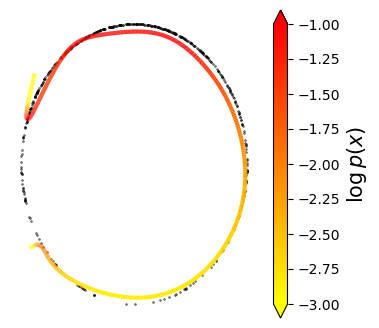

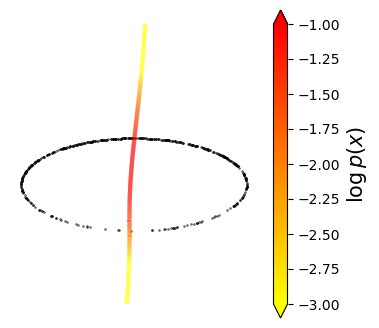

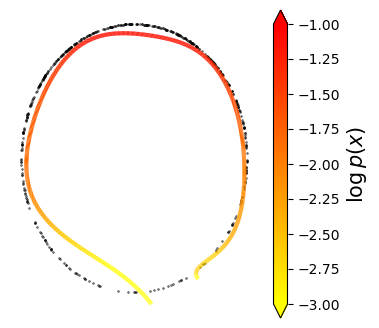

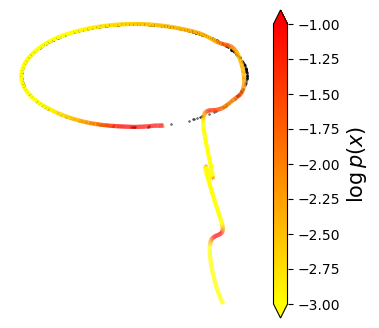

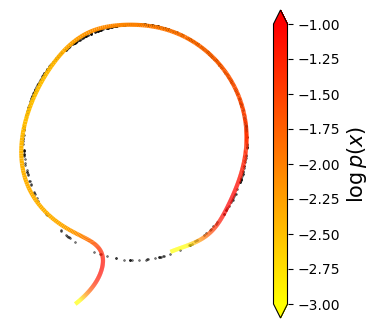

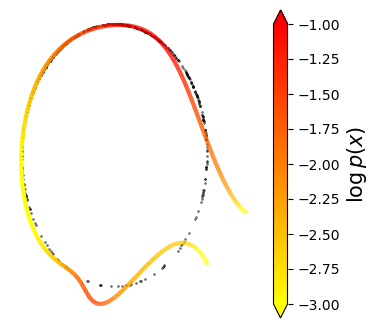

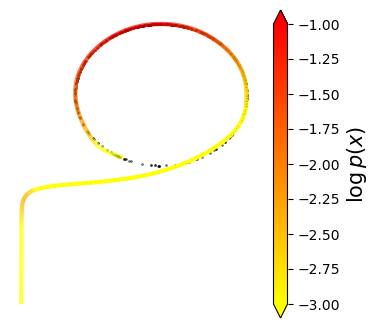

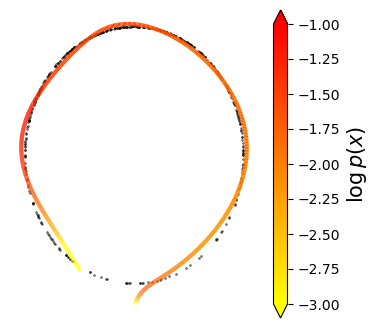

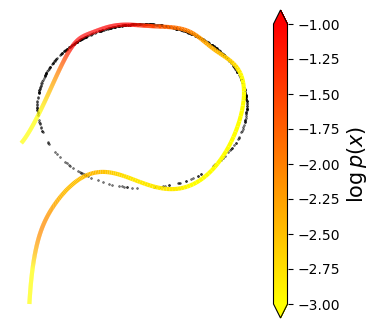

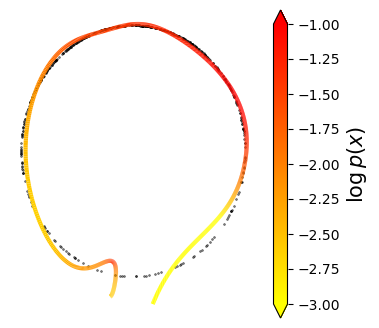

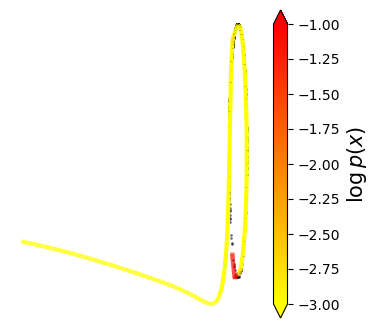

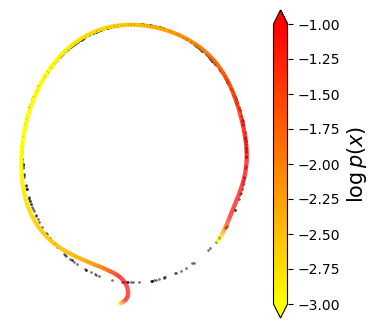

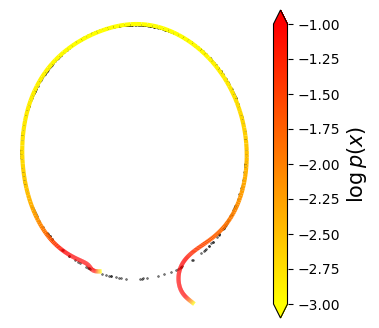

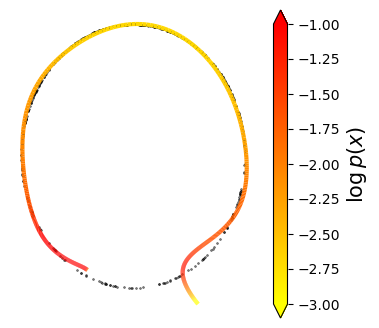

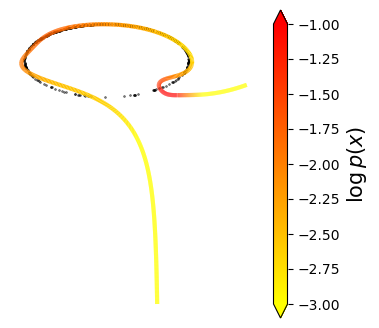

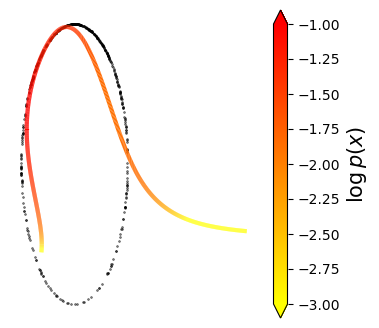

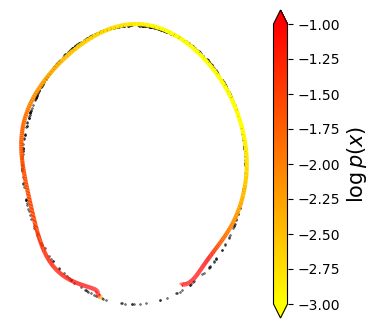

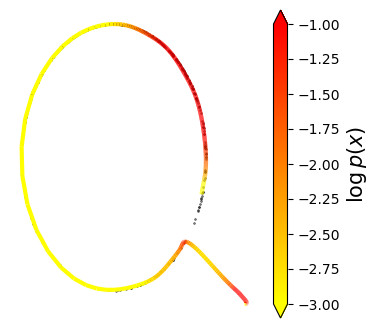

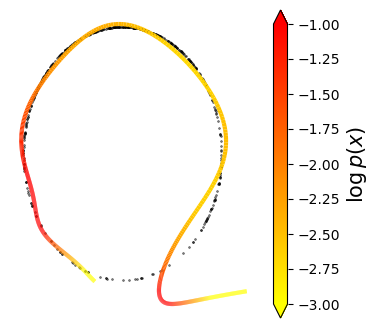

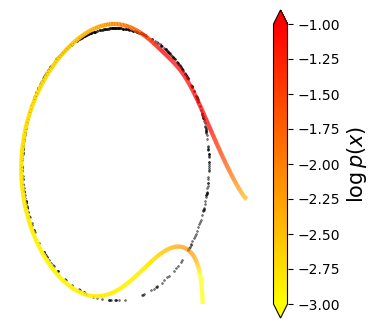

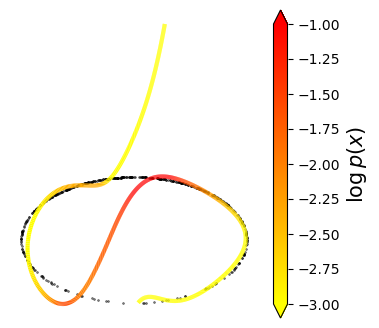

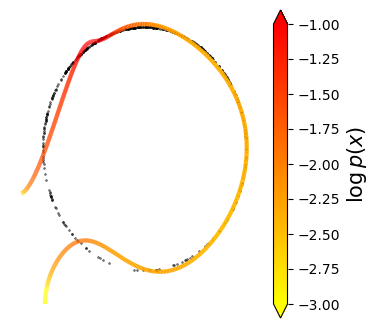

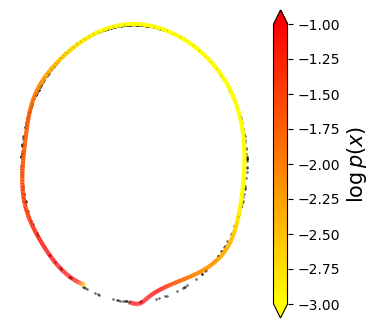

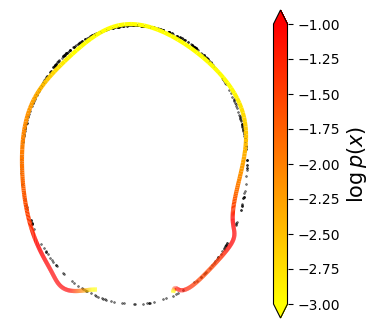

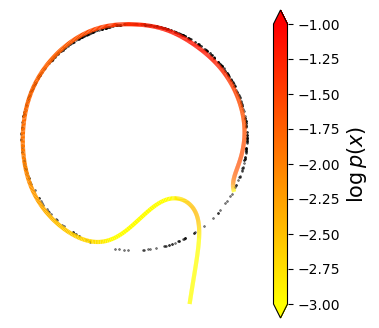

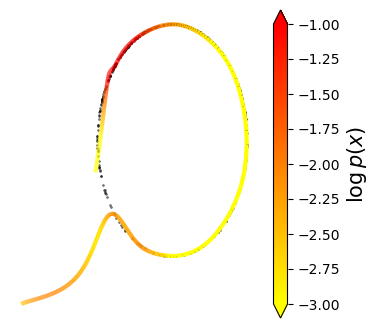

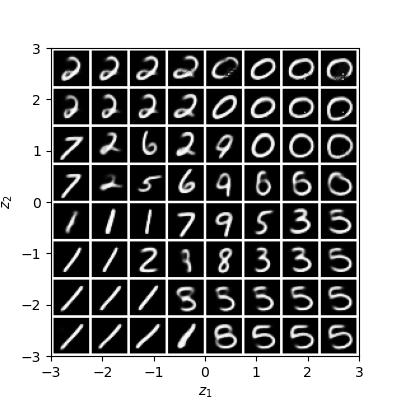

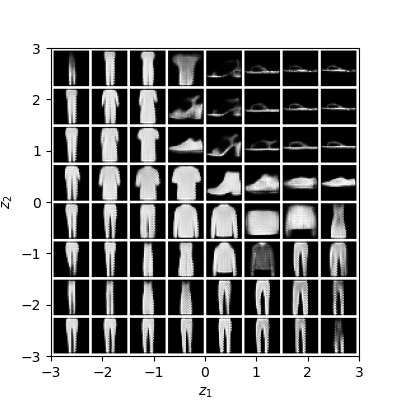

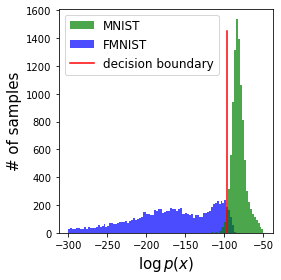

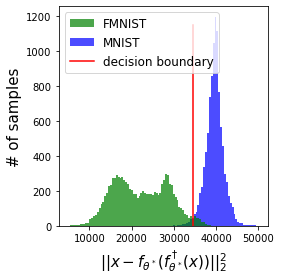

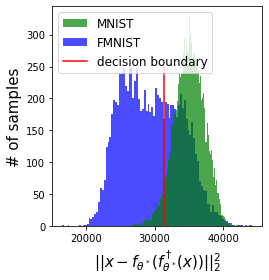

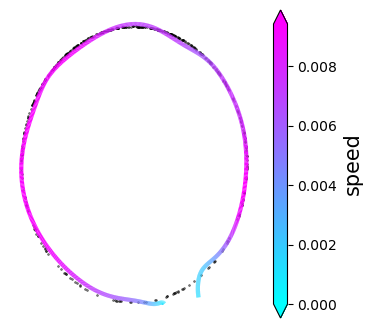

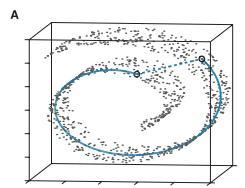

Normalizing flows are inevitable neural networks with tractable change-of-volume terms, which allow optimization of their parameters to be efficiently performed via maximum likelihood. However, data of interest are typically assumed to live in some (often unknown) low-dimensional manifold embedded in a high-dimensional ambient space. The result is a modelling mismatch since -- by construction -- the invertibility requirement implies high-dimensional support of the learned distribution. Injective flows, mappings from low- to high-dimensional spaces, aim to fix this discrepancy by learning distributions on manifolds, but the resulting volume-change term becomes more challenging to evaluate. Current approaches either avoid computing this term entirely using various heuristics, or assume the manifold is known beforehand and therefore are not widely applicable. Instead, we propose two methods to tractably calculate the gradient of this term with respect to the parameters of the model, relying on careful use of automatic differentiation and techniques from numerical linear algebra. Both approaches perform end-to-end nonlinear manifold learning and density estimation for data projected onto this manifold. We study the trade-offs between our proposed methods, empirically verify that we outperform approaches ignoring the volume-change term by more accurately learning manifolds and the corresponding distributions on them, and show promising results on out-of-distribution detection. Our code is available at https://github.com/layer6ai-labs/rectangular-flows.

翻译:正常化的流程是不可避免的神经网络,具有可移动的体积变化条件,因此能够以最大的可能性有效地实现参数的优化。然而,人们通常认为,感兴趣的数据存在于高维环境空间内的一些(通常不为人知的)低维多元体中。结果是一种建模不匹配,因为通过建筑,不可逆性要求意味着对所学分布的高度支持。从低维空间到高维空间的绘图,目的是通过在多元数据上学习分布来纠正这一差异,但由此产生的量变化术语则变得更加难以评估。目前的方法要么是避免完全使用各种超常法计算这一术语,要么是假设该元数据事先已知,因此不具有广泛适用性。相反,我们提出了两种方法,可以随意计算该术语相对于模型参数的梯度,依靠谨慎地使用数字线性升数的自动区分和技术。两种方法都对预测到这个多元数据进行端到端的非线性多重学习和密度估计。我们研究了我们拟议的方法之间的交易,从实验性地核查了我们超越了公式的跨度的路径分布,从而忽略了我们所能获得的公式的公式。