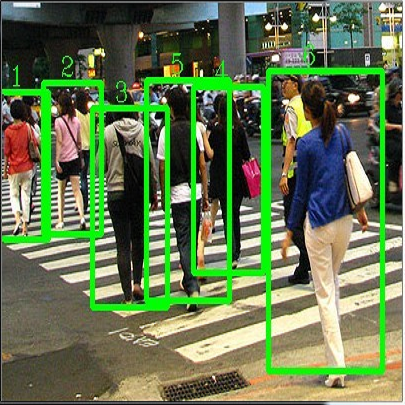

Adversarial attacks against deep learning-based object detectors have been studied extensively in the past few years. The proposed attacks aimed solely at compromising the models' integrity (i.e., trustworthiness of the model's prediction), while adversarial attacks targeting the models' availability, a critical aspect in safety-critical domains such as autonomous driving, have not been explored by the machine learning research community. In this paper, we propose NMS-Sponge, a novel approach that negatively affects the decision latency of YOLO, a state-of-the-art object detector, and compromises the model's availability by applying a universal adversarial perturbation (UAP). In our experiments, we demonstrate that the proposed UAP is able to increase the processing time of individual frames by adding "phantom" objects while preserving the detection of the original objects.

翻译:过去几年来,对深层学习天体探测器的反向攻击进行了广泛研究,拟议的攻击完全是为了损害模型的完整性(即模型预测的可信赖性),而机器学习研究界尚未探讨这些模型的可用性(自主驾驶等安全关键领域的一个关键方面)的对抗性攻击。在本论文中,我们提出了NMS-Sponge,这是一个新颖的办法,对YOLO(最先进的天体探测器)的决定延缓性产生了负面影响,并且通过应用通用对抗干扰(UAP)来损害模型的可用性。在我们的实验中,我们证明拟议的UAP能够增加单个框架的处理时间,在保留原始物体探测的同时加上“幻影”物体。