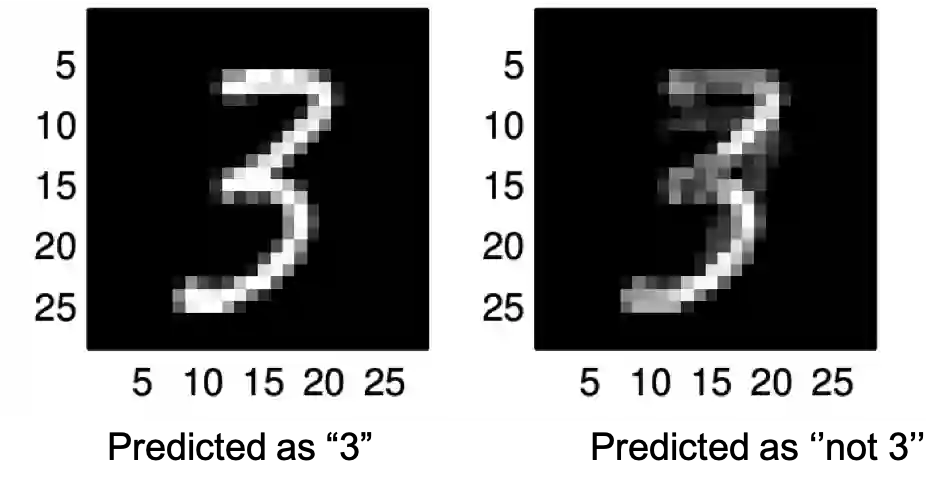

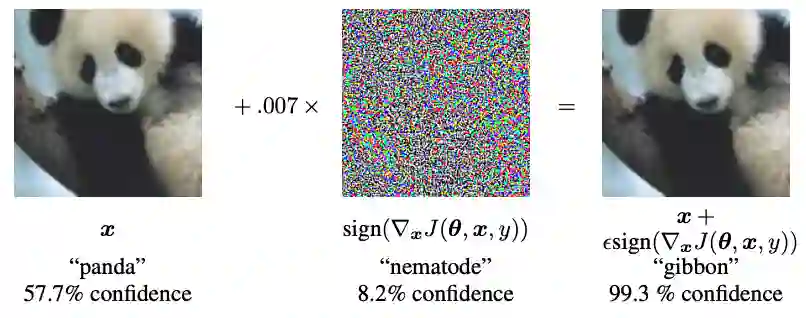

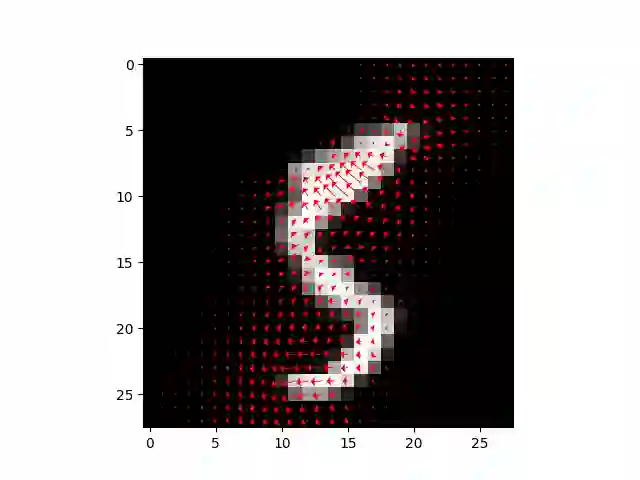

Deep neural networks (DNN) have achieved unprecedented success in numerous machine learning tasks in various domains. However, the existence of adversarial examples has raised concerns about applying deep learning to safety-critical applications. As a result, we have witnessed increasing interests in studying attack and defense mechanisms for DNN models on different data types, such as images, graphs and text. Thus, it is necessary to provide a systematic and comprehensive overview of the main threats of attacks and the success of corresponding countermeasures. In this survey, we review the state of the art algorithms for generating adversarial examples and the countermeasures against adversarial examples, for the three popular data types, i.e., images, graphs and text.

翻译:深神经网络(DNN)在各个领域的许多机器学习任务中取得了前所未有的成功,然而,存在对抗性实例使人们对如何将深层次学习应用到安全关键应用产生了关切,结果,我们看到人们越来越有兴趣研究DNN模型在不同数据类型(如图像、图表和文本)上的攻击和防御机制,因此,有必要对攻击的主要威胁和相应反措施的成功情况进行系统、全面的概述。在这次调查中,我们审查了制作对抗性实例的先进算法的现状,以及针对三种流行数据类型(如图像、图表和文本)的对抗性实例采取的反措施。