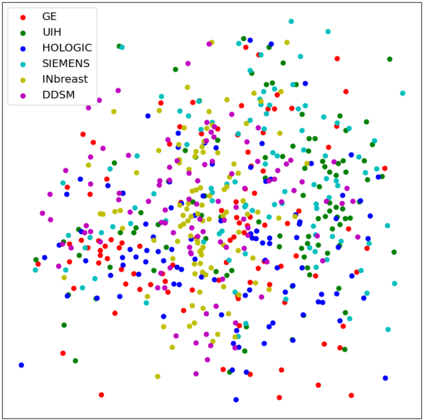

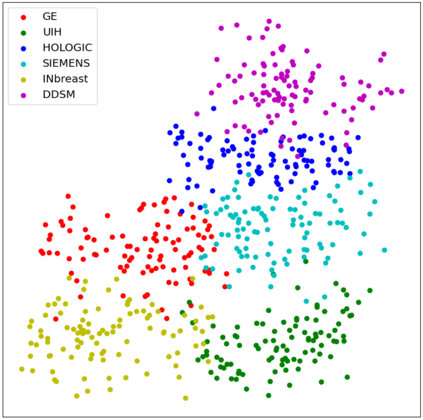

The deep learning technique has been shown to be effective in addressing several image analysis tasks within the computer-aided diagnosis scheme for mammography. The training of an efficacious deep learning model requires large amounts of data with sufficient diversity in terms of image style and quality. In particular, the diversity of image styles may be primarily attributed to the vendor factor. However, the collection of mammograms from large and diverse vendors is very expensive and sometimes impractical. Motivatedly, a novel contrastive learning method is developed to equip the deep learning models with better generalization capability. Specifically, the multi-style and multi-view unsupervised self-learning scheme is carried out to seek robust feature embedding against various vendor styles as a pre-trained model. Afterward, the pre-trained network is further fine-tuned to the downstream tasks, e.g., mass detection, matching, BI-RADS rating, and breast density classification. The proposed method has been extensively and rigorously evaluated with mammograms from various vendor-style domains and several public datasets. The experimental results suggest that the proposed domain generalization method can effectively improve the performance of four mammographic image tasks on data from either seen or unseen domains and outperform many state-of-the-art (SOTA) generalization methods.

翻译:暂无翻译