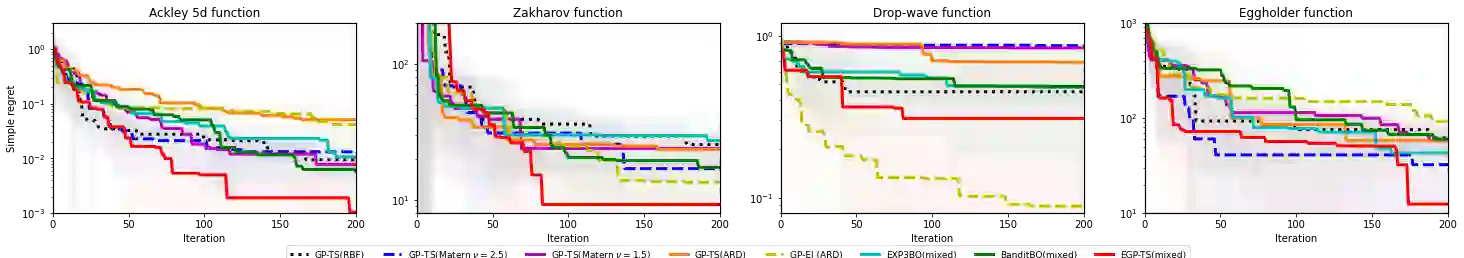

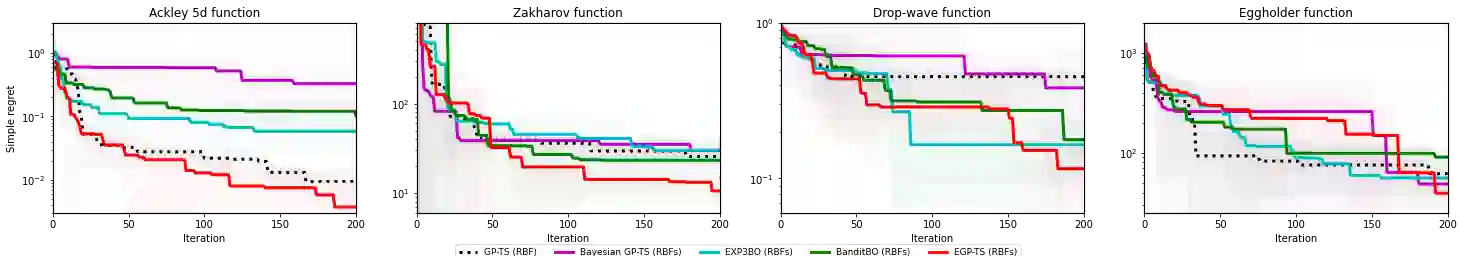

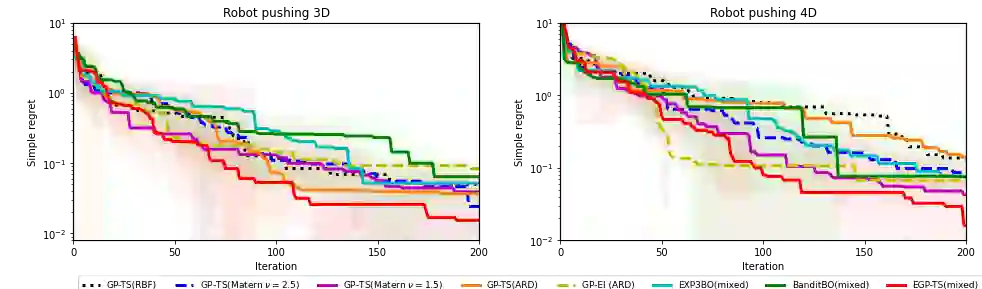

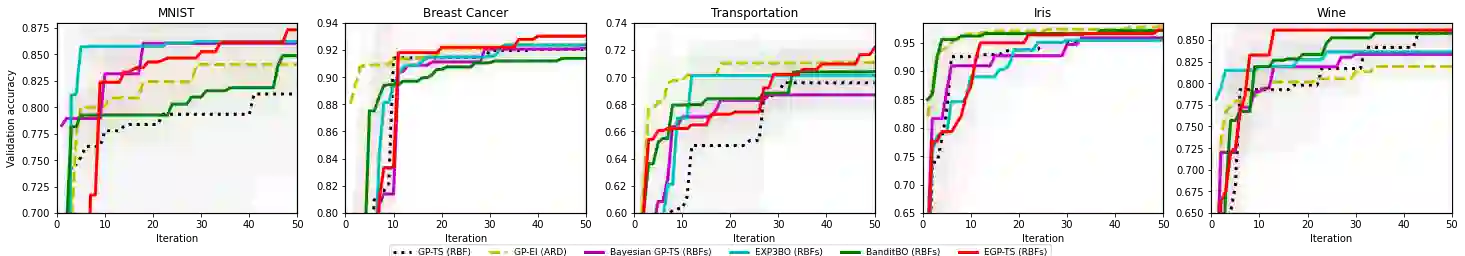

Bayesian optimization (BO) has well-documented merits for optimizing black-box functions with an expensive evaluation cost. Such functions emerge in applications as diverse as hyperparameter tuning, drug discovery, and robotics. BO hinges on a Bayesian surrogate model to sequentially select query points so as to balance exploration with exploitation of the search space. Most existing works rely on a single Gaussian process (GP) based surrogate model, where the kernel function form is typically preselected using domain knowledge. To bypass such a design process, this paper leverages an ensemble (E) of GPs to adaptively select the surrogate model fit on-the-fly, yielding a GP mixture posterior with enhanced expressiveness for the sought function. Acquisition of the next evaluation input using this EGP-based function posterior is then enabled by Thompson sampling (TS) that requires no additional design parameters. To endow function sampling with scalability, random feature-based kernel approximation is leveraged per GP model. The novel EGP-TS readily accommodates parallel operation. To further establish convergence of the proposed EGP-TS to the global optimum, analysis is conducted based on the notion of Bayesian regret for both sequential and parallel settings. Tests on synthetic functions and real-world applications showcase the merits of the proposed method.

翻译:贝叶斯优化(BO)在优化黑箱功能方面有很好的优点,其评估成本昂贵。这些功能出现在超参数调制、药物发现和机器人等多种应用中。BO依靠一种贝叶西亚代孕模型,按顺序选择查询点,以平衡探索与搜索空间的利用。大多数现有工程都依赖于一个单一的高萨进程(GP)代孕模型,其中内核函数形式通常使用域知识预先选择。为了绕过这样一个设计过程,本文利用了一批通用通用的全套GP,以适应性选择适合飞行的替代模型,产生一种GP混合物代孕模型,并增强所寻求的功能的清晰度。随后,使用基于该通用定位的功能获取下一个评价投入,由不需要额外设计参数的汤普森采样(TS)提供。对于具有可缩放性、随机地心基内核近效的端功能,则利用每个GPPOP-TS模型的全套合体(EEGP-TS)来适应适合适量性地选择适合飞行的替代模型,从而产生GPPGPM-TS的平行操作,在最佳的同步模型上进一步整合模型上进行模拟模拟的同步的同步的模拟,在最佳的合成模型上,在最佳的合成模型上,在最佳的合成的合成模型上,在最佳的合成的合成的模型上,在最佳的模型上,在最佳的合成的模型上,在最佳的模型上,在最佳的模型上,在最佳的合成的模型上,在最佳的模型上,在最佳的模型上,在最佳的合成的模型上进行模拟式的模型上,在最佳的模拟式的模型上进行。