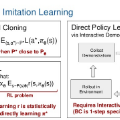

Approaches for teaching learning agents via human demonstrations have been widely studied and successfully applied to multiple domains. However, the majority of imitation learning work utilizes only behavioral information from the demonstrator, i.e. which actions were taken, and ignores other useful information. In particular, eye gaze information can give valuable insight towards where the demonstrator is allocating visual attention, and holds the potential to improve agent performance and generalization. In this work, we propose Gaze Regularized Imitation Learning (GRIL), a novel context-aware, imitation learning architecture that learns concurrently from both human demonstrations and eye gaze to solve tasks where visual attention provides important context. We apply GRIL to a visual navigation task, in which an unmanned quadrotor is trained to search for and navigate to a target vehicle in a photorealistic simulated environment. We show that GRIL outperforms several state-of-the-art gaze-based imitation learning algorithms, simultaneously learns to predict human visual attention, and generalizes to scenarios not present in the training data. Supplemental videos can be found at project https://sites.google.com/view/gaze-regularized-il/ and code at https://github.com/ravikt/gril.

翻译:利用人类演示教学智能代理的方法已被广泛研究并成功应用于多个领域。然而,大多数模仿学习工作仅利用演示者的行为信息,即采取了哪些行动,并忽略了其他有用的信息。特别地,眼球注视信息可以为了解演示者的视觉关注点提供宝贵的洞察力,并有潜力提高代理的性能和泛化能力。在这项研究中,我们提出了Gaze Regularized Imitation Learning(GRIL),这是一种新的上下文感知,模仿学习的架构,它同时从人类演示和眼球注视中学习,以解决视觉关注点提供重要上下文的任务。我们将GRIL应用于视觉导航任务,在这个任务中,一个未被控制的四旋翼被训练在逼真的模拟环境中搜索并导航到目标车辆。我们展示了GRIL优于几种最先进的基于注视的模仿学习算法,同时学习预测人类视觉关注,并且推广到训练数据中不存在的场景。项目中可以找到补充视频https://sites.google.com/view/gaze-regularized-il/和代码https://github.com/ravikt/gril。