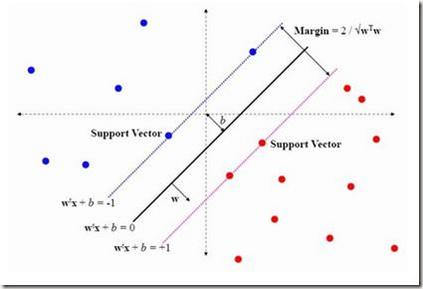

Quadratic programming is a ubiquitous prototype in convex programming. Many combinatorial optimizations on graphs and machine learning problems can be formulated as quadratic programming; for example, Support Vector Machines (SVMs). Linear and kernel SVMs have been among the most popular models in machine learning over the past three decades, prior to the deep learning era. Generally, a quadratic program has an input size of $\Theta(n^2)$, where $n$ is the number of variables. Assuming the Strong Exponential Time Hypothesis ($\textsf{SETH}$), it is known that no $O(n^{2-o(1)})$ algorithm exists (Backurs, Indyk, and Schmidt, NIPS'17). However, problems such as SVMs usually feature much smaller input sizes: one is given $n$ data points, each of dimension $d$, with $d \ll n$. Furthermore, SVMs are variants with only $O(1)$ linear constraints. This suggests that faster algorithms are feasible, provided the program exhibits certain underlying structures. In this work, we design the first nearly-linear time algorithm for solving quadratic programs whenever the quadratic objective has small treewidth or admits a low-rank factorization, and the number of linear constraints is small. Consequently, we obtain a variety of results for SVMs: * For linear SVM, where the quadratic constraint matrix has treewidth $\tau$, we can solve the corresponding program in time $\widetilde O(n\tau^{(\omega+1)/2}\log(1/\epsilon))$; * For linear SVM, where the quadratic constraint matrix admits a low-rank factorization of rank-$k$, we can solve the corresponding program in time $\widetilde O(nk^{(\omega+1)/2}\log(1/\epsilon))$; * For Gaussian kernel SVM, where the data dimension $d = \Theta(\log n)$ and the squared dataset radius is small, we can solve it in time $O(n^{1+o(1)}\log(1/\epsilon))$. We also prove that when the squared dataset radius is large, then $\Omega(n^{2-o(1)})$ time is required.

翻译:暂无翻译