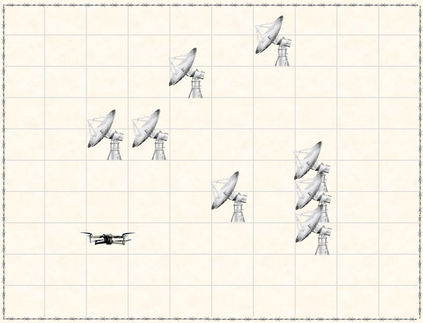

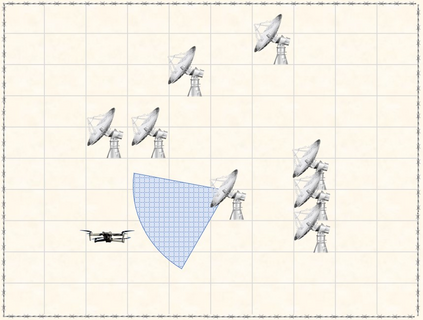

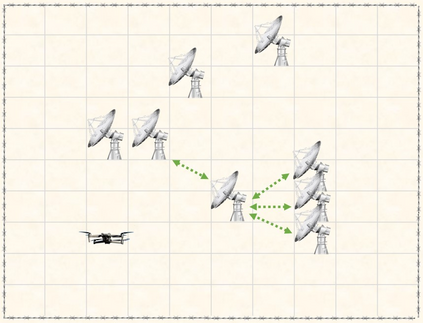

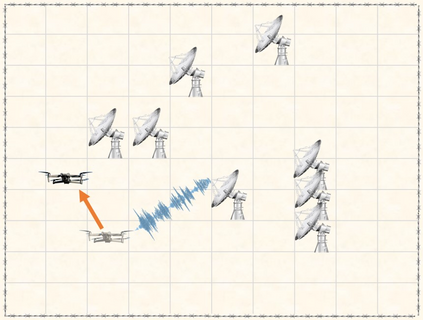

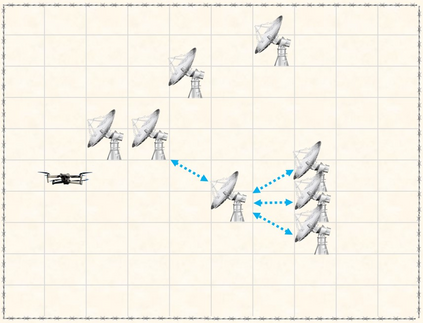

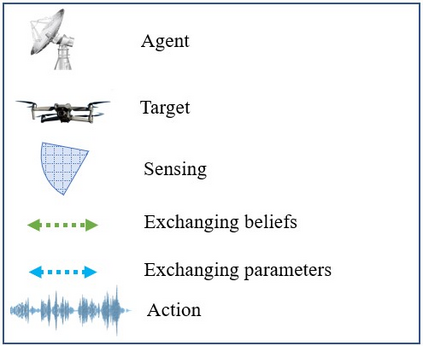

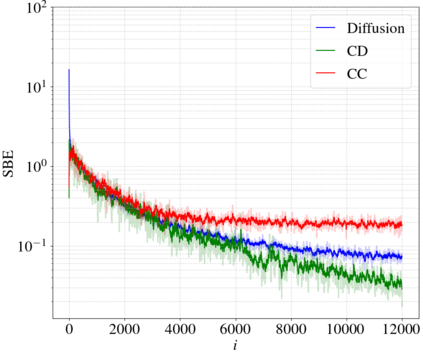

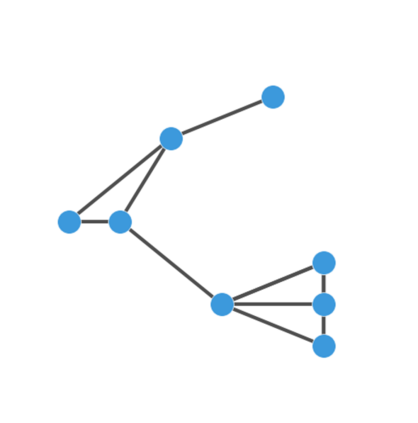

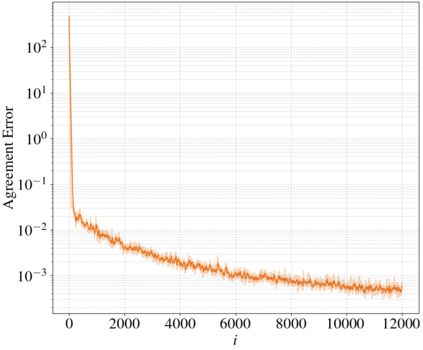

Most works on multi-agent reinforcement learning focus on scenarios where the state of the environment is fully observable. In this work, we consider a cooperative policy evaluation task in which agents are not assumed to observe the environment state directly. Instead, agents can only have access to noisy observations and to belief vectors. It is well-known that finding global posterior distributions under multi-agent settings is generally NP-hard. As a remedy, we propose a fully decentralized belief forming strategy that relies on individual updates and on localized interactions over a communication network. In addition to the exchange of the beliefs, agents exploit the communication network by exchanging value function parameter estimates as well. We analytically show that the proposed strategy allows information to diffuse over the network, which in turn allows the agents' parameters to have a bounded difference with a centralized baseline. A multi-sensor target tracking application is considered in the simulations.

翻译:多数多试剂强化学习工作的重点是环境状况完全可见的情景。 在这项工作中,我们考虑合作性的政策评价任务,即不假定代理商直接观测环境状态。相反,代理商只能获得噪音的观测和信仰矢量。众所周知,在多试剂环境中找到全球后方分布是普通的NP-硬体。作为一种补救措施,我们建议完全分散的信念形成战略,依靠个人更新和通信网络的局部互动。除了交流信仰外,代理商还利用通信网络交换价值函数参数估计。我们分析表明,拟议战略允许信息在网络上传播,从而使得代理商参数与集中基线存在界限差异。模拟中还考虑了多传感器目标跟踪应用。