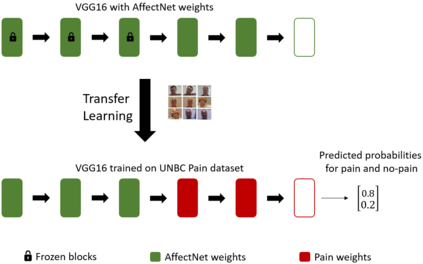

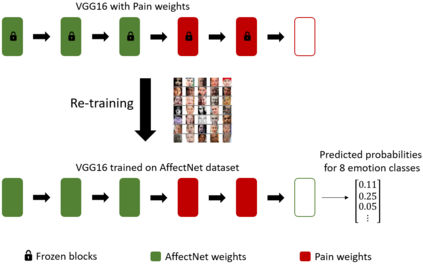

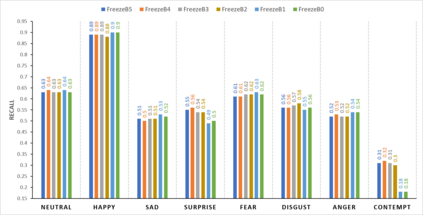

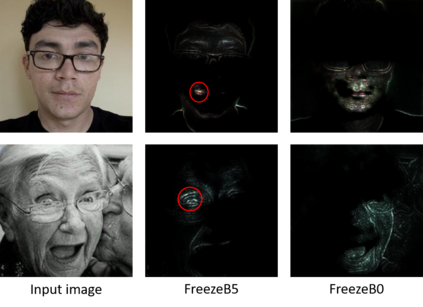

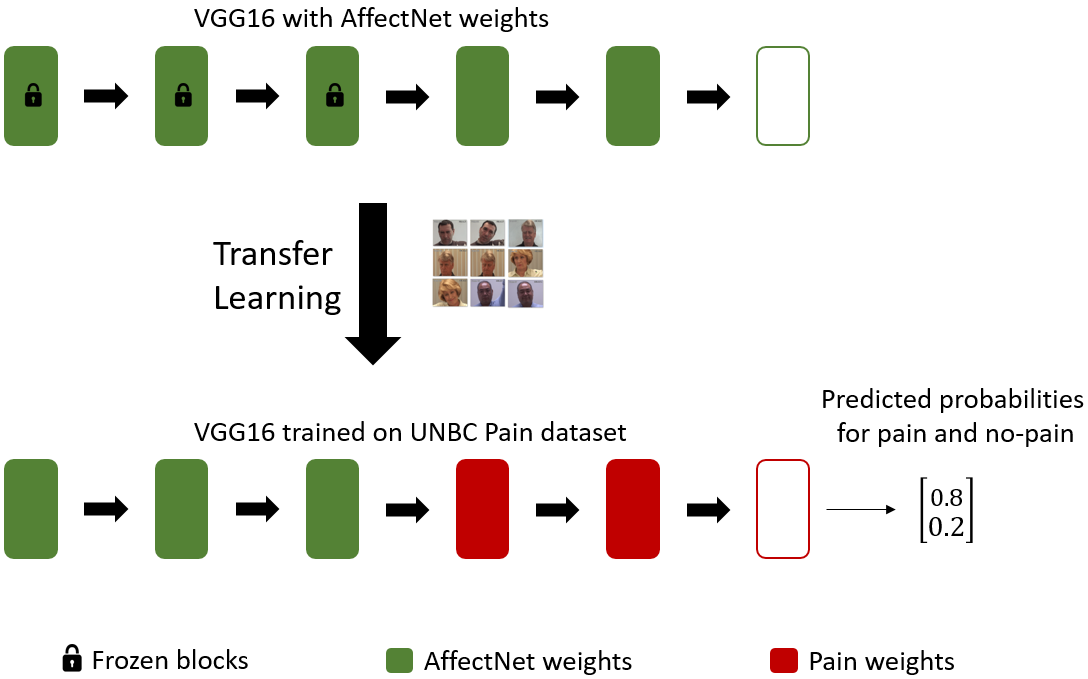

In this paper, we present a process to investigate the effects of transfer learning for automatic facial expression recognition from emotions to pain. To this end, we first train a VGG16 convolutional neural network to automatically discern between eight categorical emotions. We then fine-tune successively larger parts of this network to learn suitable representations for the task of automatic pain recognition. Subsequently, we apply those fine-tuned representations again to the original task of emotion recognition to further investigate the differences in performance between the models. In the second step, we use Layer-wise Relevance Propagation to analyze predictions of the model that have been predicted correctly previously but are now wrongly classified. Based on this analysis, we rely on the visual inspection of a human observer to generate hypotheses about what has been forgotten by the model. Finally, we test those hypotheses quantitatively utilizing concept embedding analysis methods. Our results show that the network, which was fully fine-tuned for pain recognition, indeed payed less attention to two action units that are relevant for expression recognition but not for pain recognition.

翻译:在本文中, 我们提出一个程序, 来调查感化和痛苦之间 自动面部表达识别的转移学习效果。 为此, 我们首先训练VGG16 进化神经网络, 以便自动辨别八种绝对情感。 然后我们再细调这个网络的更大部分, 以学习自动疼痛识别任务的适当表达方式。 随后, 我们将这些微调的表达方式再次应用到情感识别的最初任务中, 以进一步调查模型的性能差异。 在第二步, 我们使用图层智慧的“ 相关性宣传” 来分析先前正确预测的模型预测, 但现在却被错误地分类了。 根据这项分析, 我们依靠对一位人类观察者的视觉检查, 来生成关于模型所遗忘的假设。 最后, 我们用概念嵌入分析方法从数量上测试这些假设。 我们的结果表明, 网络对痛苦识别非常精确, 确实较少注意两个与表达识别有关的行动单位, 但与痛苦识别无关的行动单位。