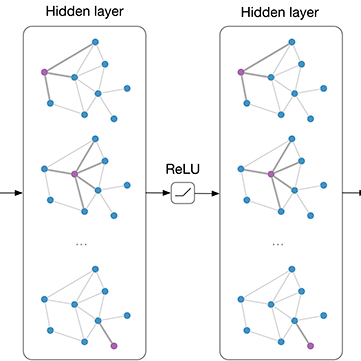

Increasing the depth of GCN, which is expected to permit more expressivity, is shown to incur performance detriment especially on node classification. The main cause of this lies in over-smoothing. The over-smoothing issue drives the output of GCN towards a space that contains limited distinguished information among nodes, leading to poor expressivity. Several works on refining the architecture of deep GCN have been proposed, but it is still unknown in theory whether or not these refinements are able to relieve over-smoothing. In this paper, we first theoretically analyze how general GCNs act with the increase in depth, including generic GCN, GCN with bias, ResGCN, and APPNP. We find that all these models are characterized by a universal process: all nodes converging to a cuboid. Upon this theorem, we propose DropEdge to alleviate over-smoothing by randomly removing a certain number of edges at each training epoch. Theoretically, DropEdge either reduces the convergence speed of over-smoothing or relieves the information loss caused by dimension collapse. Experimental evaluations on simulated dataset have visualized the difference in over-smoothing between different GCNs. Moreover, extensive experiments on several real benchmarks support that DropEdge consistently improves the performance on a variety of both shallow and deep GCNs.

翻译:提高GCN的深度,这有望让GCN更加直观,但从理论上看,这些改进是否能够缓解过度抽动的边缘,仍然不为人知。在本文中,我们首先从理论上分析通用GCN如何随着深度的增加而发挥作用,包括通用GCN、GCN和偏向性、ResGCN和APP。我们发现,所有这些模型的特点是一个普遍过程:所有节点都集中在结结点之间,导致表达性不强。在这个理论上,我们建议通过随机清除每次培训的某一部分边缘来缓解过度抽动GCN结构,但从理论上看,这些改进是否能够缓解过度抽动。从理论上讲,我们首先从理论上分析通用GCN如何随着深度的深度的提高而发挥作用,包括通用GCN、GCN有偏向的GCN、ResGCN和APNP。我们发现所有这些模型的特征都是一个普遍过程:所有节点都集中在结点之间,导致显小的表达性。在这个理论上,我们建议通过随机地消除每次培训中的某些边缘。理论上,降低超近速度速度速度速度速度速度速度或减轻GE在水平上的各种模拟实验上造成不同水平上的差异。