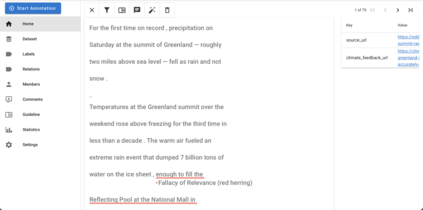

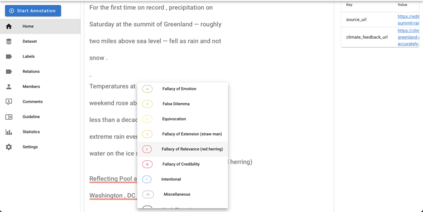

Reasoning is central to human intelligence. However, fallacious arguments are common, and some exacerbate problems such as spreading misinformation about climate change. In this paper, we propose the task of logical fallacy detection, and provide a new dataset (Logic) of logical fallacies generally found in text, together with an additional challenge set for detecting logical fallacies in climate change claims (LogicClimate). Detecting logical fallacies is a hard problem as the model must understand the underlying logical structure of the argument. We find that existing pretrained large language models perform poorly on this task. In contrast, we show that a simple structure-aware classifier outperforms the best language model by 5.46% on Logic and 4.51% on LogicClimate. We encourage future work to explore this task as (a) it can serve as a new reasoning challenge for language models, and (b) it can have potential applications in tackling the spread of misinformation. Our dataset and code are available at https://github.com/causalNLP/logical-fallacy.

翻译:理性是人类智慧的核心。 但是,错误的论调是常见的,有些问题则加剧,比如散布有关气候变化的错误信息。 在本文中,我们提出逻辑谬误检测的任务,并提供文本中通常发现的逻辑谬误的新数据集(Logic),以及发现气候变化索赔(LogicClimate)逻辑谬误的附加挑战。发现逻辑谬误是一个棘手的问题,因为模型必须理解该参数的基本逻辑结构。我们发现,现有的未经训练的大语言模型在这项工作上表现不佳。相比之下,我们显示一个简单的结构认知分类器在逻辑学上比最佳语言模型高出5.46%,在逻辑气候学上超出4.51%。我们鼓励今后探讨这项任务的工作是:(a) 它可以作为语言模型的新的推理挑战,以及(b) 它在解决错误传播方面可能具有潜在的应用。我们的数据集和代码可以在https://github.com/causailNP/logiafallacy。