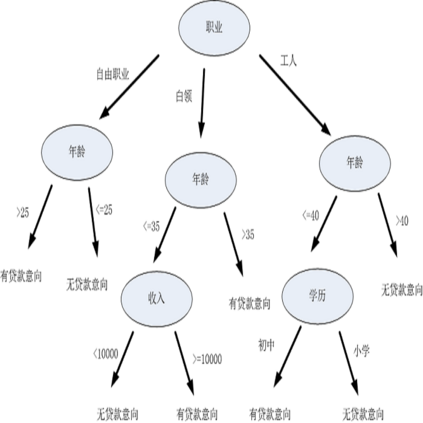

The classification and regression tree (CART) and Random Forest (RF) are arguably the most popular pair of statistical learning methods. However, their statistical consistency can only be proved under very restrictive assumption on the underlying regression function. As an extension of the standard CART, Breiman (1984) suggested using linear combinations of predictors as splitting variables. The method became known as the oblique decision tree (ODT) and has received lots of attention. ODT tends to perform better than CART and requires fewer partitions. In this paper, we further show that ODT is consistent for very general regression functions as long as they are continuous. We also prove the consistency of ODT-based random forests (ODRF) that uses either fixed-size or random-size subset of features in the features bagging, the latter of which is also guaranteed to be consistent for general regression functions, but the former is consistent only for functions with specific structures. After refining the existing software package according to the established theory, our numerical experiments also show that ODRF has a noticeable overall improvement over RF and other decision forests.

翻译:分类和回归树(CART)和随机森林(Rand Forest)(RF)可以说是最受欢迎的统计学习方法。然而,它们的统计一致性只能通过对基本回归功能的非常限制性的假设来证明。作为标准的 CART的延伸,布雷曼(1984年)建议使用预测器的线性组合作为分解变量。这种方法被称为斜坡决定树(ODT),并得到了很大的注意。ODT往往比CART表现更好,需要较少的分区。在本文中,我们进一步表明,只要具有非常普遍的回归功能,ODT就具有一致性。我们也证明基于ODDRF的随机森林(ODRF)具有一致性,这些森林使用固定大小或随机大小的特征组合,后者也保证在一般回归功能方面保持一致,但前者仅与特定结构的职能相一致。在根据既定理论改进现有软件包之后,我们的数字实验还表明,ERDF在RF和其他决定森林方面有明显的全面改进。