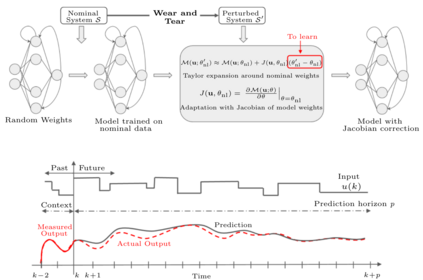

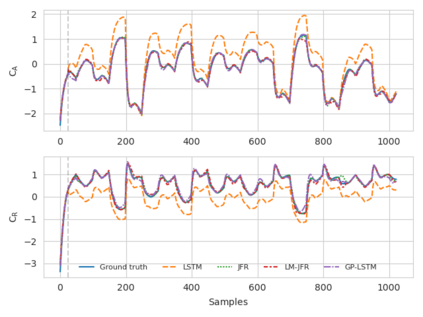

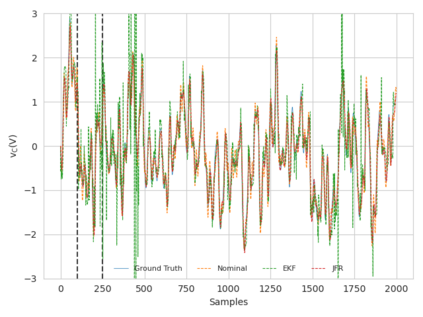

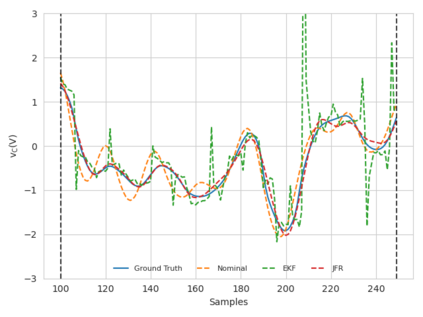

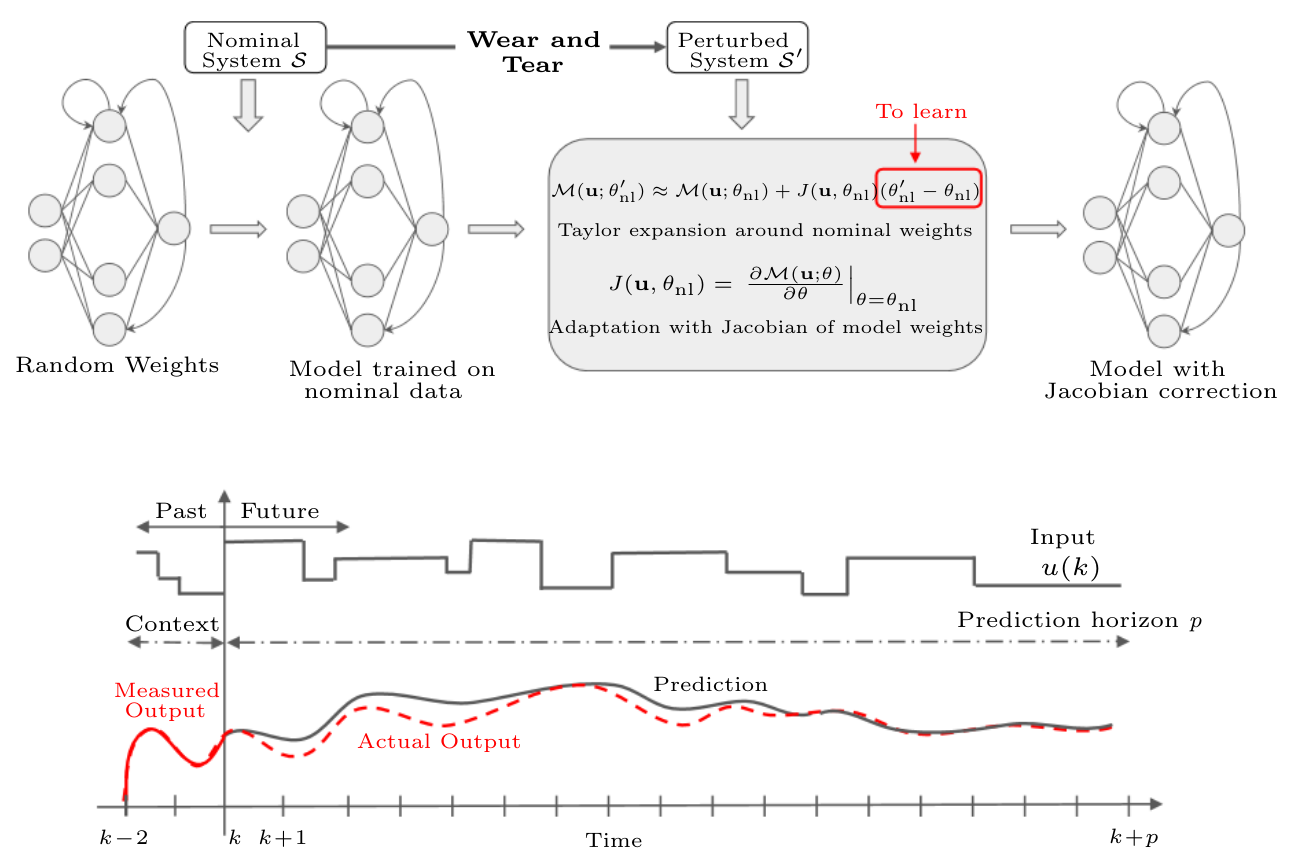

This paper presents a transfer learning approach which enables fast and efficient adaptation of Recurrent Neural Network (RNN) models of dynamical systems. A nominal RNN model is first identified using available measurements. The system dynamics are then assumed to change, leading to an unacceptable degradation of the nominal model performance on the perturbed system. To cope with the mismatch, the model is augmented with an additive correction term trained on fresh data from the new dynamic regime. The correction term is learned through a Jacobian Feature Regression (JFR) method defined in terms of the features spanned by the model's Jacobian with respect to its nominal parameters. A non-parametric view of the approach is also proposed, which extends recent work on Gaussian Process (GP) with Neural Tangent Kernel (NTK-GP) to the RNN case (RNTK-GP). This can be more efficient for very large networks or when only few data points are available. Implementation aspects for fast and efficient computation of the correction term, as well as the initial state estimation for the RNN model are described. Numerical examples show the effectiveness of the proposed methodology in presence of significant system variations.

翻译:本文介绍了一种转让学习方法,使动态系统的经常性神经网络模型能够快速和有效地适应。首先使用现有的测量方法确定一个名义的RNN模型。然后假设系统动态会发生变化,从而导致扰动系统的名义模型性能出现不可接受的退化。为了应对不匹配,该模型将增加一个根据新的动态系统新数据培训的添加性更正术语。纠正术语是通过Jacobian地貌回归(JFR)方法学习的,该方法的定义是该模型Jacobian的表面参数所覆盖的特征。还提出了一种非参数性的观点,该方法将Neural Tangent Kernel(NTK-GP)最近关于高斯进程(GP)的工作延伸至RNNT(RNTK-G)案。这对于非常庞大的网络或只有很少的数据点可以使用,这可能会更有效率。对纠正术语的快速和高效计算,以及RNN模型的初步状态估算。数字示例显示了拟议方法在出现重大系统变异的情况下的有效性。