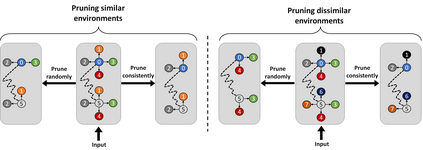

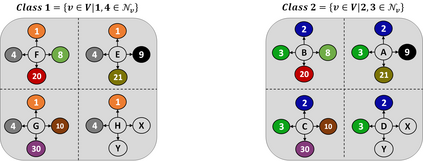

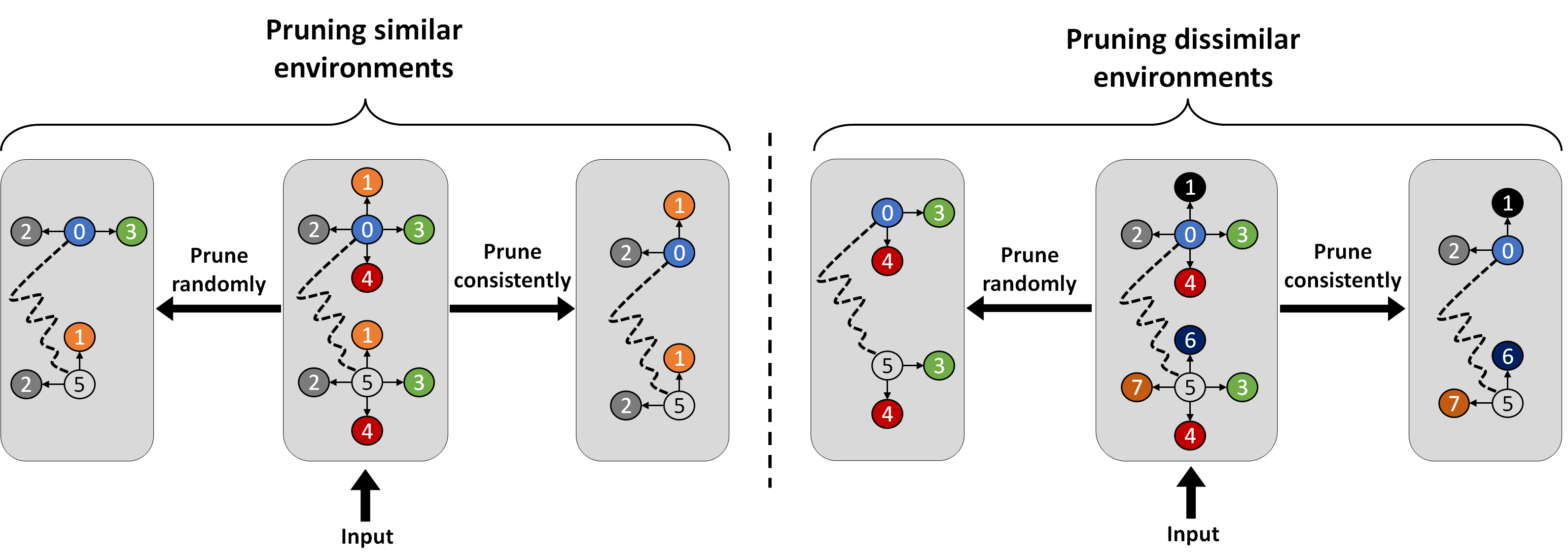

Graph Neural Networks (GNNs) have emerged as highly successful tools for graph-related tasks. However, real-world problems involve very large graphs, and the compute resources needed to fit GNNs to those problems grow rapidly. Moreover, the noisy nature and size of real-world graphs cause GNNs to over-fit if not regularized properly. Surprisingly, recent works show that large graphs often involve many redundant components that can be removed without compromising the performance too much. This includes node or edge removals during inference through GNNs layers or as a pre-processing step that sparsifies the input graph. This intriguing phenomenon enables the development of state-of-the-art GNNs that are both efficient and accurate. In this paper, we take a further step towards demystifying this phenomenon and propose a systematic method called Locality-Sensitive Pruning (LSP) for graph pruning based on Locality-Sensitive Hashing. We aim to sparsify a graph so that similar local environments of the original graph result in similar environments in the resulting sparsified graph, which is an essential feature for graph-related tasks. To justify the application of pruning based on local graph properties, we exemplify the advantage of applying pruning based on locality properties over other pruning strategies in various scenarios. Extensive experiments on synthetic and real-world datasets demonstrate the superiority of LSP, which removes a significant amount of edges from large graphs without compromising the performance, accompanied by a considerable acceleration.

翻译:神经网络(GNNs)已成为与图形有关的任务非常成功的工具。 然而,现实世界的问题涉及到非常大的图表,而让 GNNs适应这些问题所需要的计算资源也迅速增长。 此外,真实世界图形的噪音性质和大小使得GNNs如果没有适当地正规化,就会过度使用。令人惊讶的是,最近的工程显示,大图表往往包含许多多余的部件,可以在不过分损害性能的情况下删除。这包括通过 GNNs 层的推断或作为放大输入图的预处理步骤来进行节点或边缘清除。这种令人着迷的现象使得能够开发出高效和准确的GNNNs。此外,在本文中,我们进一步迈出了一步,去掉这个现象的神秘性,并提出了一个系统化的方法,即根据本地-敏感性散变速度进行图形调整。我们的目标是通过将原始图表的本地环境的相似的本地环境环境进行压缩。