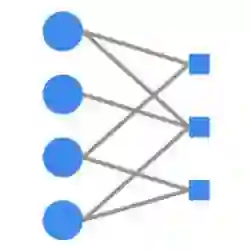

A fundamental computation for statistical inference and accurate decision-making is to compute the marginal probabilities or most probable states of task-relevant variables. Probabilistic graphical models can efficiently represent the structure of such complex data, but performing these inferences is generally difficult. Message-passing algorithms, such as belief propagation, are a natural way to disseminate evidence amongst correlated variables while exploiting the graph structure, but these algorithms can struggle when the conditional dependency graphs contain loops. Here we use Graph Neural Networks (GNNs) to learn a message-passing algorithm that solves these inference tasks. We first show that the architecture of GNNs is well-matched to inference tasks. We then demonstrate the efficacy of this inference approach by training GNNs on a collection of graphical models and showing that they substantially outperform belief propagation on loopy graphs. Our message-passing algorithms generalize out of the training set to larger graphs and graphs with different structure.

翻译:统计推理和准确决策的基本计算是计算任务相关变量的边际概率或最可能状态。 概率图形模型可以有效地代表这种复杂数据的结构, 但通常很难进行这些推理。 信通算法,例如信仰传播,是利用图形结构在相关变量之间传播证据的自然方法, 但是这些算法在有条件的依赖性图形包含环形时会挣扎。 在这里, 我们使用图形神经网络( GNNS) 学习一种解决这些推理任务的电传算法。 我们首先显示, GNNS 的架构非常适合进行推理任务。 然后我们通过训练GNNS 收集图形模型来展示这种推理方法的功效, 并显示它们大大超过在圆形图上传播的信念。 我们的电通算算法将培训内容概括为结构不同的较大图形和图表。