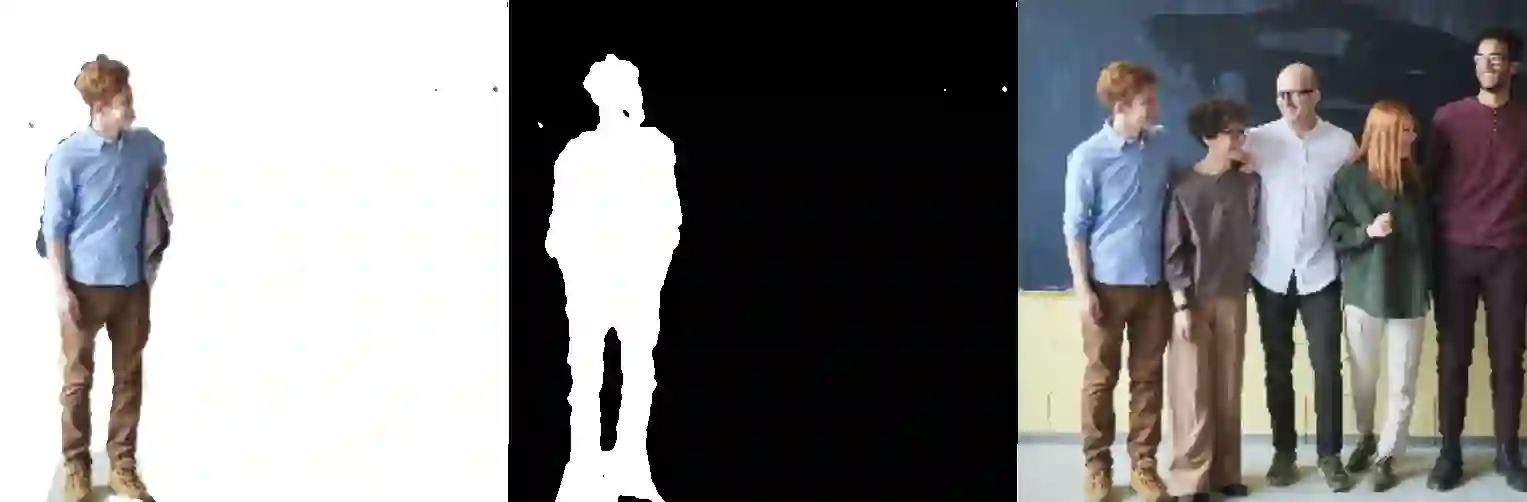

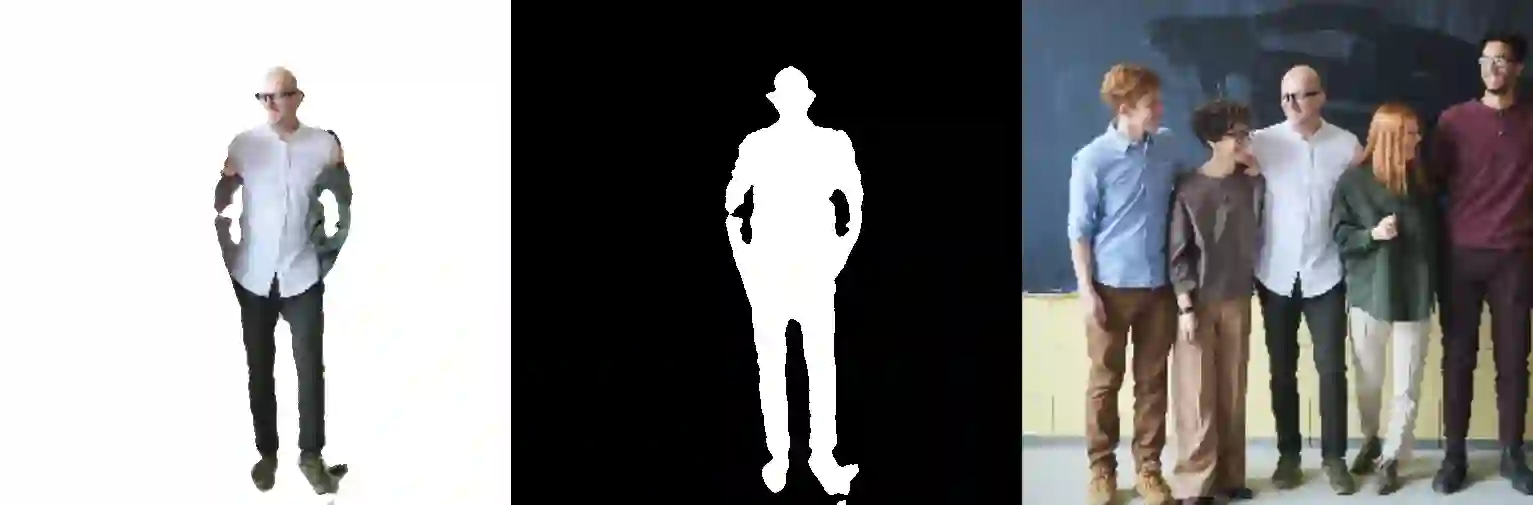

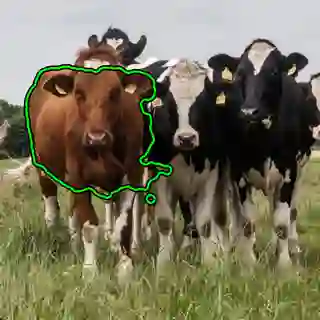

Recent diffusion-based generative models combined with vision-language models are capable of creating realistic images from natural language prompts. While these models are trained on large internet-scale datasets, such pre-trained models are not directly introduced to any semantic localization or grounding. Most current approaches for localization or grounding rely on human-annotated localization information in the form of bounding boxes or segmentation masks. The exceptions are a few unsupervised methods that utilize architectures or loss functions geared towards localization, but they need to be trained separately. In this work, we explore how off-the-shelf diffusion models, trained with no exposure to such localization information, are capable of grounding various semantic phrases with no segmentation-specific re-training. An inference time optimization process is introduced, that is capable of generating segmentation masks conditioned on natural language. We evaluate our proposal Peekaboo for unsupervised semantic segmentation on the Pascal VOC dataset. In addition, we evaluate for referring segmentation on the RefCOCO dataset. In summary, we present a first zero-shot, open-vocabulary, unsupervised (no localization information), semantic grounding technique leveraging diffusion-based generative models with no re-training. Our code will be released publicly.

翻译:与视觉语言模型相结合的最近基于传播的基因模型能够创造出来自自然语言提示的现实图像。 虽然这些模型是在大型互联网规模的数据集上培训的, 但是这些经过预先训练的模型并不直接引入任何语义本地化或定位。 大多数目前本地化或定位的方法都依赖于以捆绑框或隔断面遮罩的形式提供的附加人注的本地化信息。 这些例外是利用建筑或损失功能适应本地化的少数不受监督的方法, 但是它们需要单独培训。 在这项工作中, 我们研究如何在没有接触这种本地化信息的情况下, 将各种不公开的语义词句植入地下, 而没有区分特定的再培训。 引入了一个推论时间优化进程, 能够产生以自然语言为条件的局部化遮蔽。 我们评估了我们在Pascal VOC 数据集中使用不超超超超语义语义语义的语系分割法。 此外, 我们还评估了在RefCO数据集上提及分解的现式传播模式。 在摘要中, 我们展示了一种不偏向性本地的磁化的分解模型。