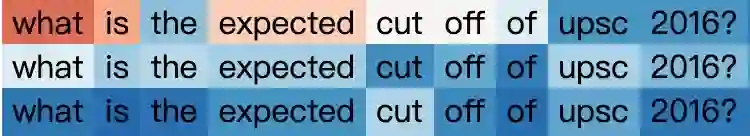

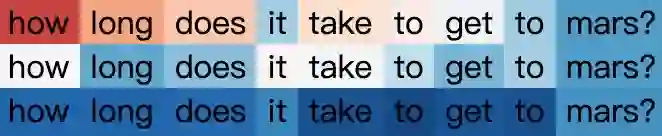

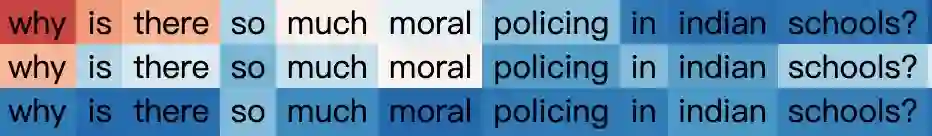

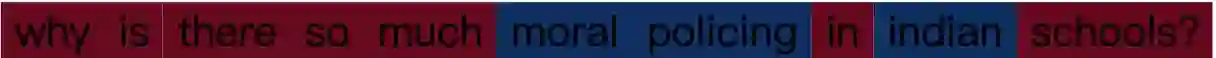

While Transformers have had significant success in paragraph generation, they treat sentences as linear sequences of tokens and often neglect their hierarchical information. Prior work has shown that decomposing the levels of granularity~(e.g., word, phrase, or sentence) for input tokens has produced substantial improvements, suggesting the possibility of enhancing Transformers via more fine-grained modeling of granularity. In this work, we propose a continuous decomposition of granularity for neural paraphrase generation (C-DNPG). In order to efficiently incorporate granularity into sentence encoding, C-DNPG introduces a granularity-aware attention (GA-Attention) mechanism which extends the multi-head self-attention with: 1) a granularity head that automatically infers the hierarchical structure of a sentence by neurally estimating the granularity level of each input token; and 2) two novel attention masks, namely, granularity resonance and granularity scope, to efficiently encode granularity into attention. Experiments on two benchmarks, including Quora question pairs and Twitter URLs have shown that C-DNPG outperforms baseline models by a remarkable margin and achieves state-of-the-art results in terms of many metrics. Qualitative analysis reveals that C-DNPG indeed captures fine-grained levels of granularity with effectiveness.

翻译:虽然变异器在生成段落方面取得了显著成功,但将判决视为线性象征序列,并常常忽视其等级信息; 先前的工作表明,分解投入符号颗粒-(如文字、短语或句子)的颗粒-(如文字、短语或句子)水平已产生重大改进,表明通过对颗粒性进行更细微微微的模型来增强变异器的可能性; 在这项工作中,我们提议对神经原言生成(C-DNPG)的颗粒性进行持续分解; 为了有效地将颗粒性纳入量刑编码, C-DNPG引入了颗粒性认知(GA-Avention)机制,将多头自省自我注意范围扩大:1 颗粒性头,通过对每个投入符号的颗粒性水平进行神经性模型的微分级模型来自动推断一个判决的等级结构; 和 2 两种新的关注面罩,即颗粒性共振荡和颗粒性范围,以便有效地将颗粒性纳入注意范围。 在两个基准上进行实验,包括质质质质问题配方和TF-G-G-NPLURLURLES的底值模型在显著基准值模型中显示,C-NP-NP-G-PS-S-S-BS-S-S-S-S-S-S-BS-S-B-B-B-B-B-S-S-B-B-B-B-B-S-S-B-B-B-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-

相关内容

Source: Apple - iOS 8