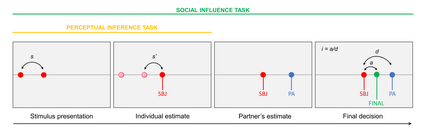

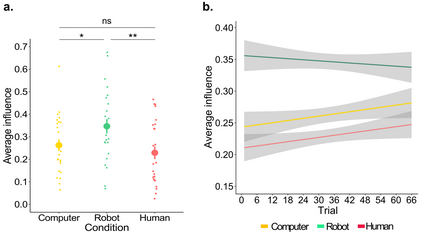

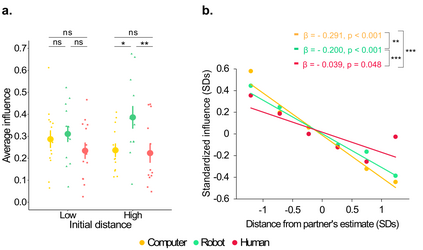

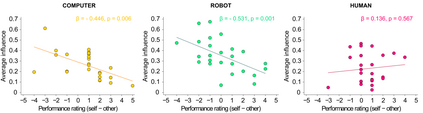

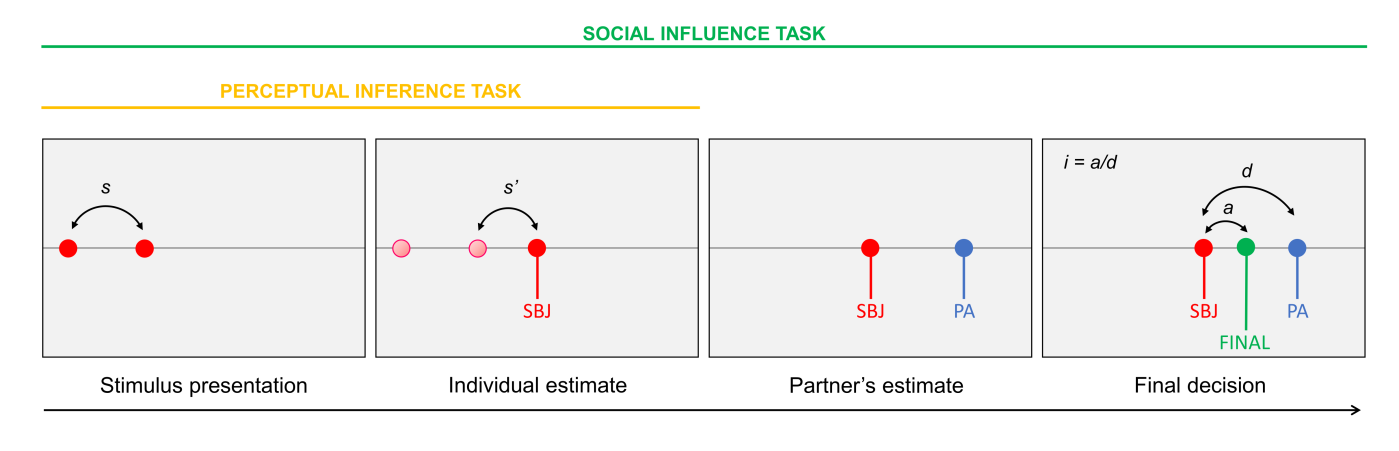

Taking advice from others requires confidence in their competence. This is important for interaction with peers, but also for collaboration with social robots and artificial agents. Nonetheless, we do not always have access to information about others' competence or performance. In these uncertain environments, do our prior beliefs about the nature and the competence of our interacting partners modulate our willingness to rely on their judgments? In a joint perceptual decision making task, participants made perceptual judgments and observed the simulated estimates of either a human participant, a social humanoid robot or a computer. Then they could modify their estimates based on this feedback. Results show participants' belief about the nature of their partner biased their compliance with its judgments: participants were more influenced by the social robot than human and computer partners. This difference emerged strongly at the very beginning of the task and decreased with repeated exposure to empirical feedback on the partner's responses, disclosing the role of prior beliefs in social influence under uncertainty. Furthermore, the results of our functional task suggest an important difference between human-human and human-robot interaction in the absence of overt socially relevant signal from the partner: the former is modulated by social normative mechanisms, whereas the latter is guided by purely informational mechanisms linked to the perceived competence of the partner.

翻译:向他人征求建议需要对其能力有信心。 这对与同行的互动很重要,但对于与社会机器人和人工代理人的合作也很重要。 然而,我们并不总是能够获得关于他人能力或性能的信息。在这种不确定的环境中,我们以前对我们互动伙伴的性质和能力的看法会改变我们依赖其判断的意愿?在一项共同的观念决策任务中,参与者作出概念性判断并观察了人类参与者、社会人类机器人或计算机的模拟估计。然后,他们可以根据这一反馈修改其估计。结果显示参与者对其伙伴的性质的信念有偏向于其判断:参与者受社会机器人的影响大于人和计算机伙伴。在任务一开始,这种差异就明显地显现出来,反复暴露了对伙伴反应的经验反馈,暴露了先前信仰在不确定情况下的社会影响中的作用。此外,我们的工作结果表明,人类与人类和人类机器人之间的互动在缺乏与伙伴公开的社会相关信号时存在重大差异:前者被社会规范机制所改变,而后者则被社会规范性机制所左右,而后者则被纯粹地引导为信息机制所引导。