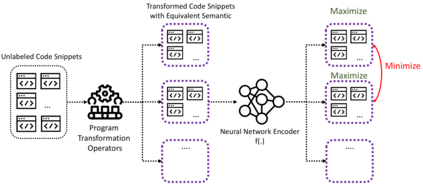

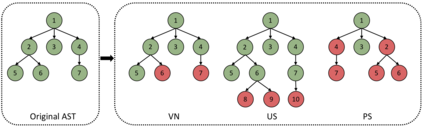

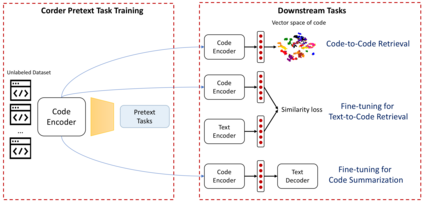

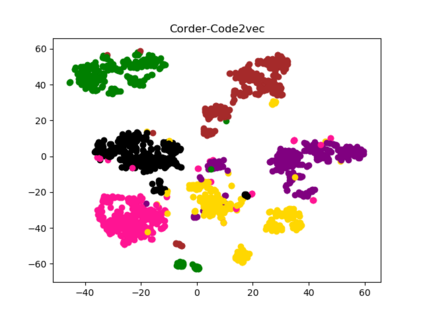

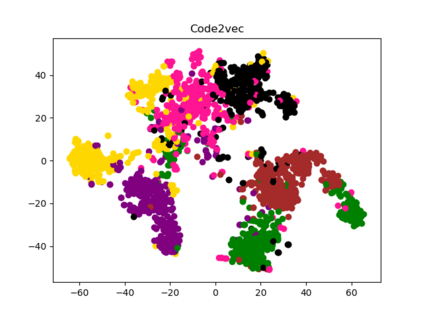

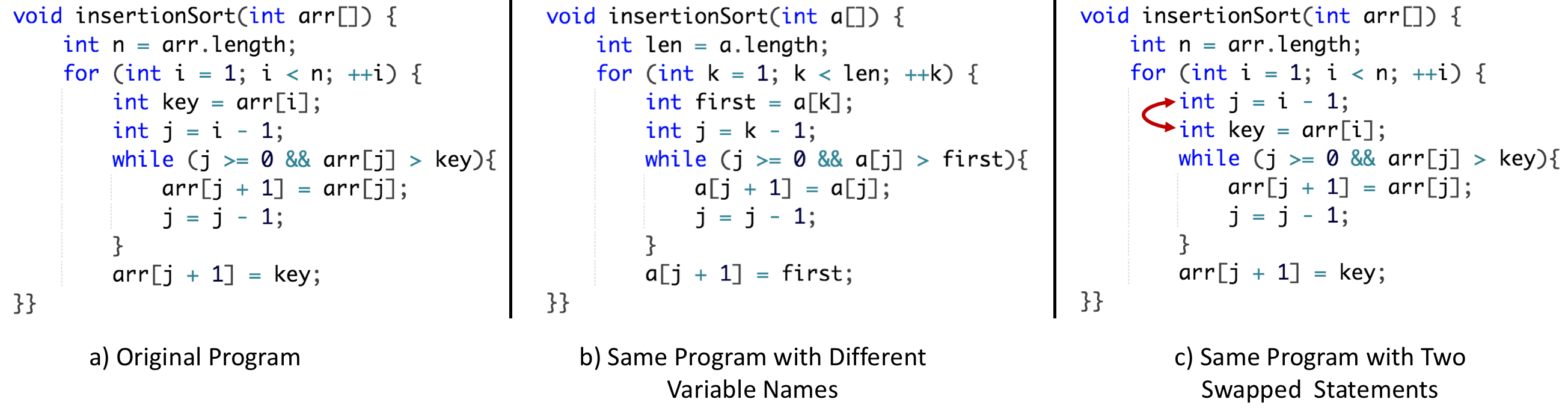

Code retrieval and summarization are useful tasks for developers, but it is also challenging to build indices or summaries of code that capture both syntactic and semantic essential information of the code. To build a decent model on source code, one needs to collect a large amount of data from code hosting platforms, such as Github, Bitbucket, etc., label them and train it from a scratch for each task individually. Such an approach has two limitations: (1) training a new model for every new task is time-consuming; and (2) tremendous human effort is required to label the data for individual downstream tasks. To address these limitations, we are proposing Corder, a self-supervised contrastive learning framework that trains code representation models on unlabeled data. The pre-trained model from Corder can be used in two ways: (1) it can produce vector representation of code and can be applied to code retrieval tasks that does not have labelled data; (2) it can be used in a fine-tuning process for tasks that might still require label data such as code summarization. The key innovation is that we train the source code model by asking it to recognize similar and dissimilar code snippets through a \textit{contrastive learning paradigm}. We use a set of semantic-preserving transformation operators to generate code snippets that are syntactically diverse but semantically equivalent. The contrastive learning objective, at the same time, maximizes the agreement between different views of the same snippets and minimizes the agreement between transformed views of different snippets. Through extensive experiments, we have shown that our Corder pretext task substantially outperform the other baselines for code-to-code retrieval, text-to-code retrieval and code-to-text summarization tasks.

翻译:代码检索和总和是开发者的有用任务, 但对于开发者来说, 建立包含代码合成和语义基本信息的代码索引或摘要也具有挑战性。 要在源代码上构建一个体面的模型, 人们需要从代码托管平台, 如 Github、 Bitbucket 等收集大量数据, 对其进行标签, 并对每个任务进行从头到尾的培训。 这种方法有两个局限性:(1) 培训每个新任务的新模式需要花费时间; (2) 需要大量人力来为单个下游任务标记数据。 为了应对这些限制, 我们提议一个自上而下的模型, 用于在未贴标签的数据中培养代码代表代码的代码模型。 预培训的模型可以用两种方式:(1) 它可以生成代码的矢量代表, 并用于没有贴标签数据的代码的代码检索任务; (2) 可以在一个微调的流程流程流程中使用相同的代码, 如代码和代码的代码等值。 关键创新是, 我们通过要求源代码模型模型模型的对等值进行培训, 要求它识别类似和不固定的版本。