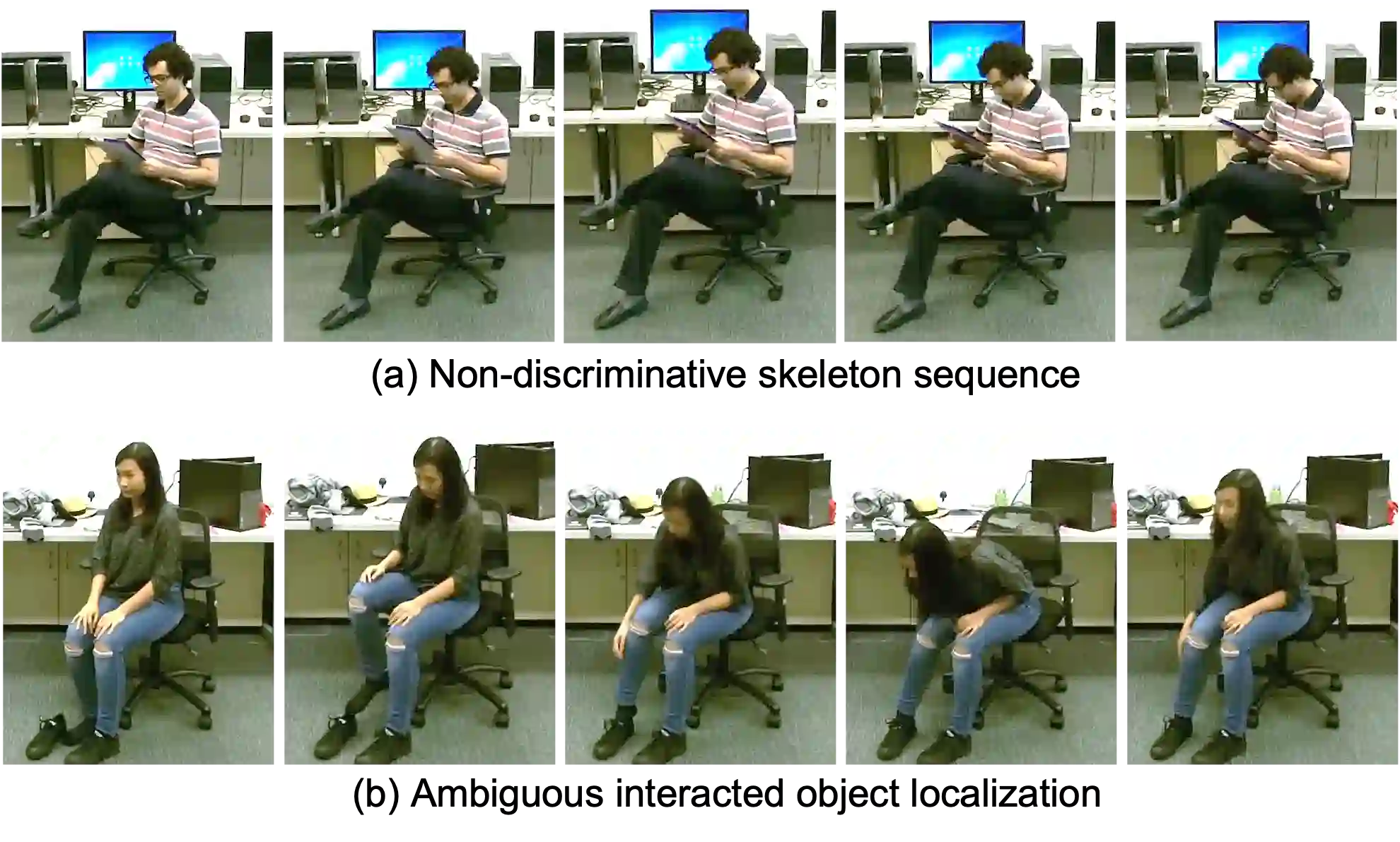

Skeleton data carries valuable motion information and is widely explored in human action recognition. However, not only the motion information but also the interaction with the environment provides discriminative cues to recognize the action of persons. In this paper, we propose a joint learning framework for mutually assisted "interacted object localization" and "human action recognition" based on skeleton data. The two tasks are serialized together and collaborate to promote each other, where preliminary action type derived from skeleton alone helps improve interacted object localization, which in turn provides valuable cues for the final human action recognition. Besides, we explore the temporal consistency of interacted object as constraint to better localize the interacted object with the absence of ground-truth labels. Extensive experiments on the datasets of SYSU-3D, NTU60 RGB+D and Northwestern-UCLA show that our method achieves the best or competitive performance with the state-of-the-art methods for human action recognition. Visualization results show that our method can also provide reasonable interacted object localization results.

翻译:然而,不仅运动信息,而且与环境的互动也提供了识别人的行动的歧视性提示。在本文件中,我们提议了一个基于骨骼数据的相互协助的“交互物体定位”和“人类行动识别”的联合学习框架。这两项任务一起进行序列化,相互促进,其中仅从骨骼中得出的初步行动类型有助于改进互动物体定位,这反过来又为最终人类行动识别提供了宝贵的线索。此外,我们探索互动对象的时间一致性,作为将互动对象更好地定位的制约因素,因为没有地面真相标签。关于SYSU-3D、NTU60 RGB+D和西北地区CLA的数据集的广泛实验表明,我们的方法在人类行动识别方面实现了最佳或竞争性的成绩。可视化结果表明,我们的方法还可以提供合理的互动对象定位结果。