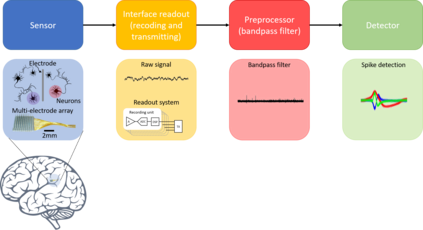

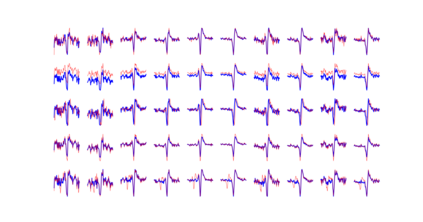

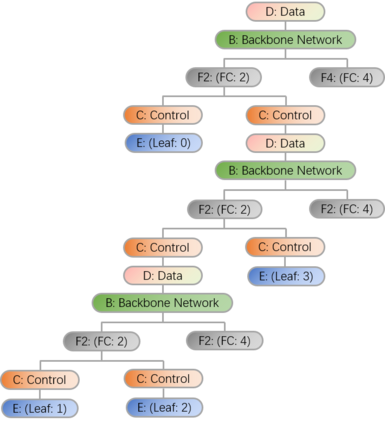

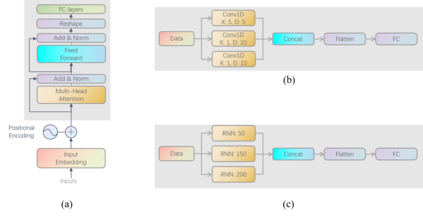

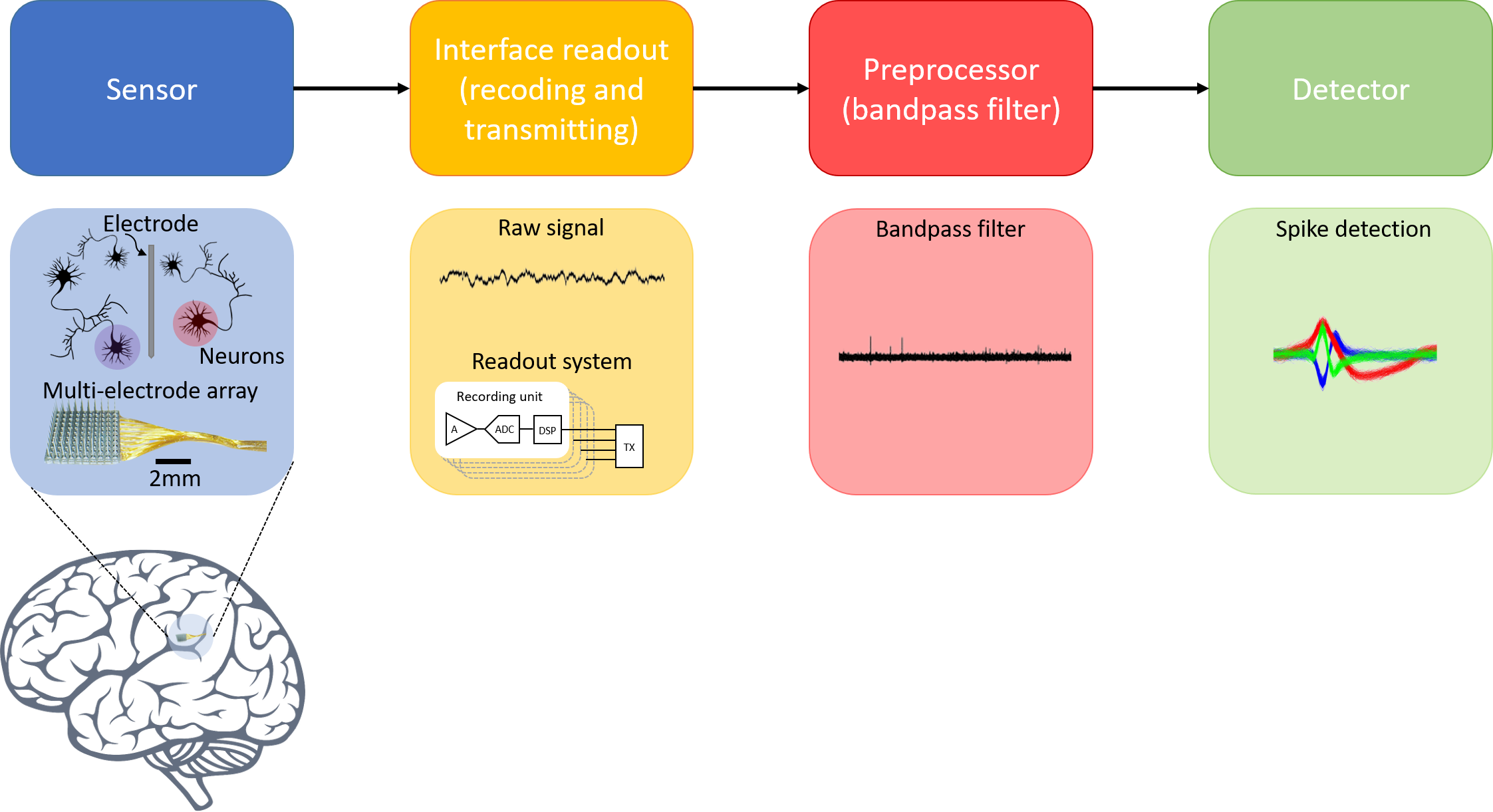

Brain-computer interfaces (BCIs), is ways for electronic devices to communicate directly with the brain. For most medical-type brain-computer interface tasks, the activity of multiple units of neurons or local field potentials is sufficient for decoding. But for BCIs used in neuroscience research, it is important to separate out the activity of individual neurons. With the development of large-scale silicon technology and the increasing number of probe channels, artificially interpreting and labeling spikes is becoming increasingly impractical. In this paper, we propose a novel modeling framework: Adaptive Contrastive Learning Model that learns representations from spikes through contrastive learning based on the maximizing mutual information loss function as a theoretical basis. Based on the fact that data with similar features share the same labels whether they are multi-classified or binary-classified. With this theoretical support, we simplify the multi-classification problem into multiple binary-classification, improving both the accuracy and the runtime efficiency. Moreover, we also introduce a series of enhancements for the spikes, while solving the problem that the classification effect is affected because of the overlapping spikes.

翻译:脑计算机界面是电子设备与大脑直接沟通的方式。 对于大多数医学类型的脑计算机界面任务,多个神经元单位或局部领域潜力的活动足以解码。但对于神经科学研究中使用的脑计算机界面,重要的是分离个体神经元的活动。随着大型硅技术的开发以及探测渠道数量的增加,人工解释和标签钉钉正在变得越来越不切实际。在本文中,我们提出了一个新的模型框架:适应性对抗学习模型,通过基于最大限度地增加相互信息损失功能的对比性学习,从峰值中学习表现。基于类似特征的数据具有相同的标签,无论是多分类还是二分解的,我们通过这种理论支持,将多分类问题简化为多个二进级分类,提高准确性和运行时间效率。此外,我们还为钉钉引入了一系列强化措施,同时解决了由于叠叠叠的钉子而影响分类效应的问题。