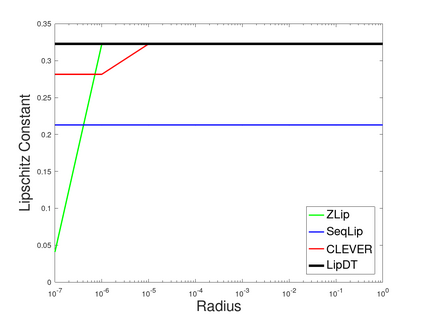

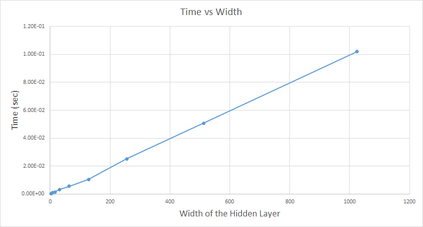

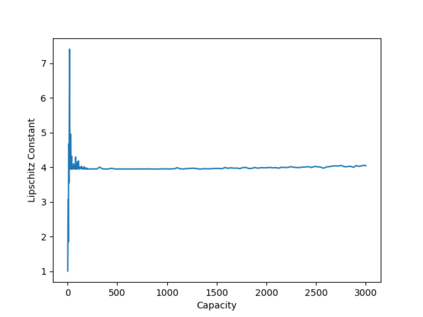

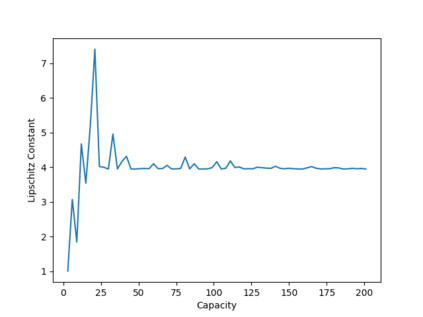

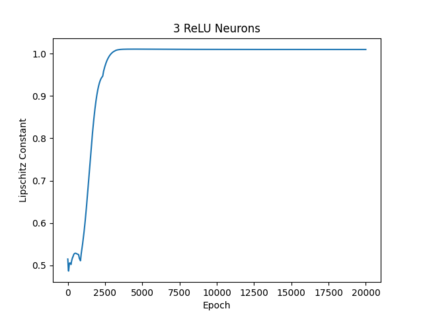

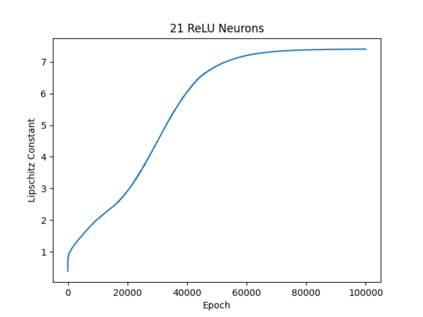

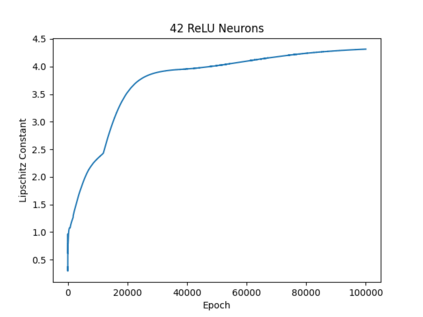

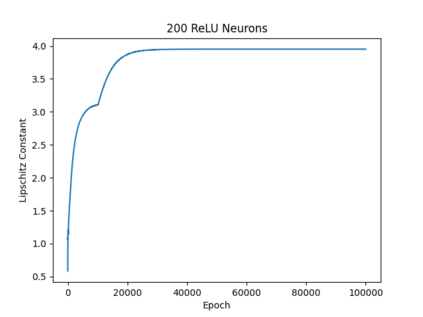

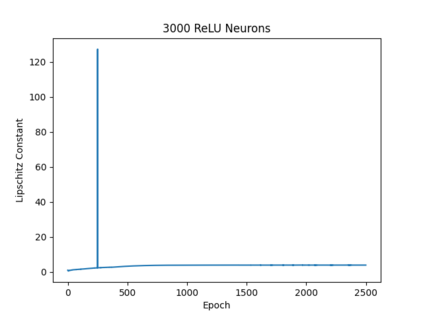

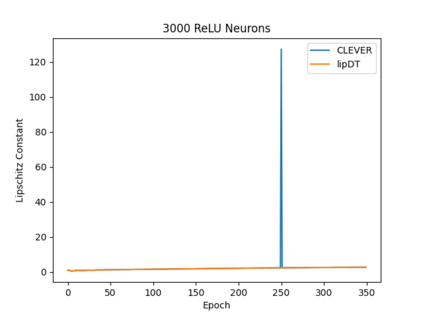

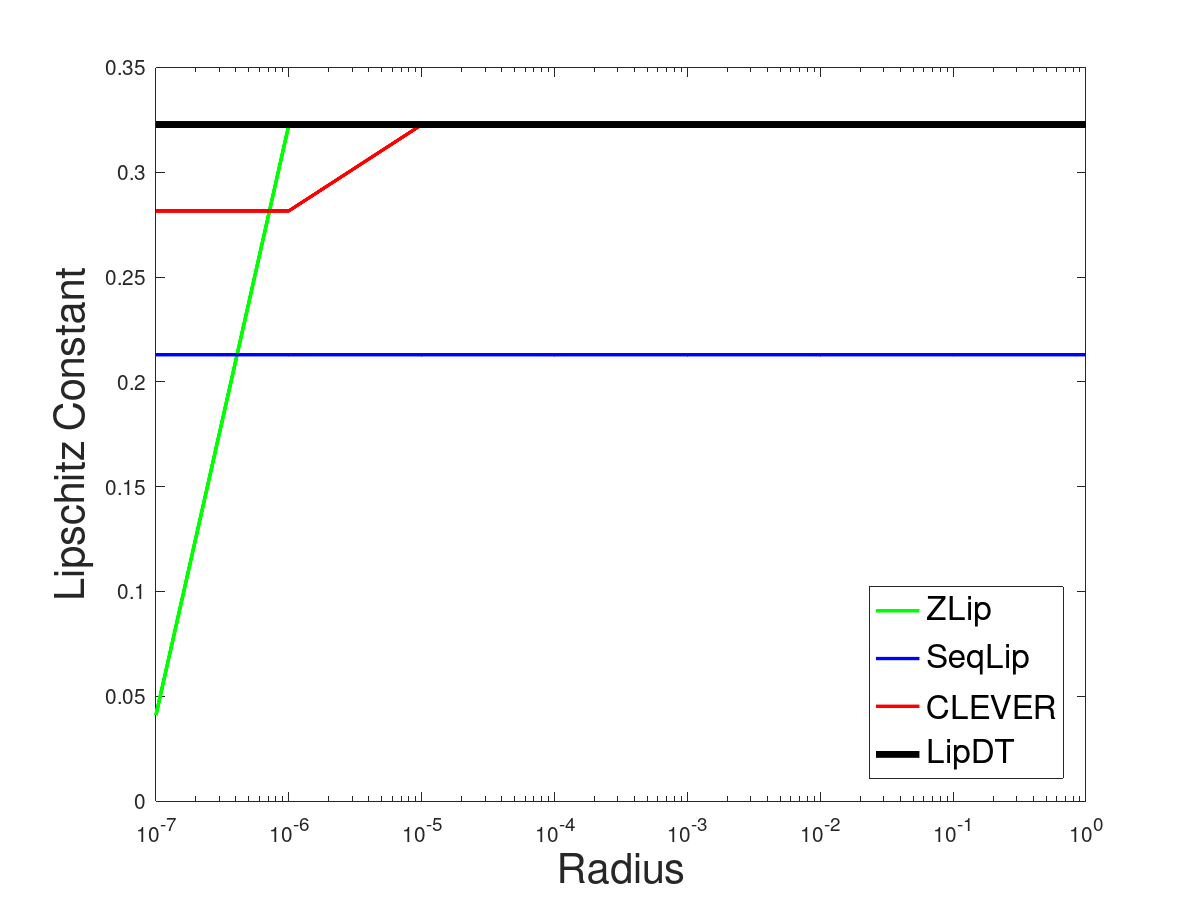

We present a domain-theoretic framework for validated robustness analysis of neural networks. We first analyze the global robustness of a general class of networks. Then, using the fact that Edalat's domain-theoretic L-derivative coincides with Clarke's generalized gradient, we extend our framework for attack-agnostic local robustness analysis. Our framework is ideal for designing algorithms which are correct by construction. We exemplify this claim by developing a validated algorithm for estimation of Lipschitz constant of feedforward regressors. We prove the completeness of the algorithm over differentiable networks, and also over general position ReLU networks. We obtain computability results within the framework of effectively given domains. Using our domain model, differentiable and non-differentiable networks can be analyzed uniformly. We implement our algorithm using arbitrary-precision interval arithmetic, and present the results of some experiments. Our implementation is truly validated, as it handles floating-point errors as well.

翻译:我们为神经网络的可靠度分析提出了一个域论框架。 我们首先分析整个网络类别的全球稳健性。 然后, 我们利用Edalat的域- 理论L- 遗传性与克拉克的通用梯度相吻合这一事实, 扩展了攻击- 不可知性本地稳健性分析框架。 我们的框架是设计通过构建正确的算法的理想框架。 我们通过开发一个有效的算法来估计Lipschitz 向后退者向后退的常数来证明这一主张。 我们证明了对不同网络的算法的完整性, 也证明了一般位置 ReLU 网络的完整性。 我们在有效给定的域框架内取得了可比较性结果。 我们可以使用我们的域模型, 对不同和无差别的网络进行统一分析。 我们使用任意的精度间距算法来应用我们的算法, 并展示一些实验的结果。 我们的运用得到了真正的验证, 因为它也处理浮点错误 。