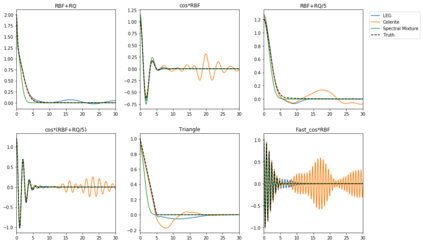

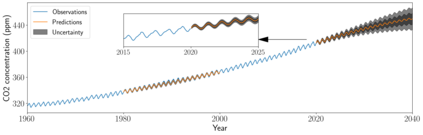

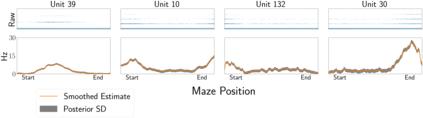

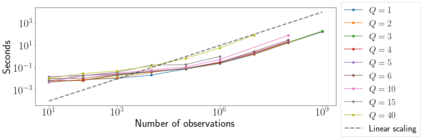

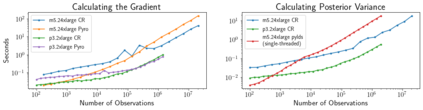

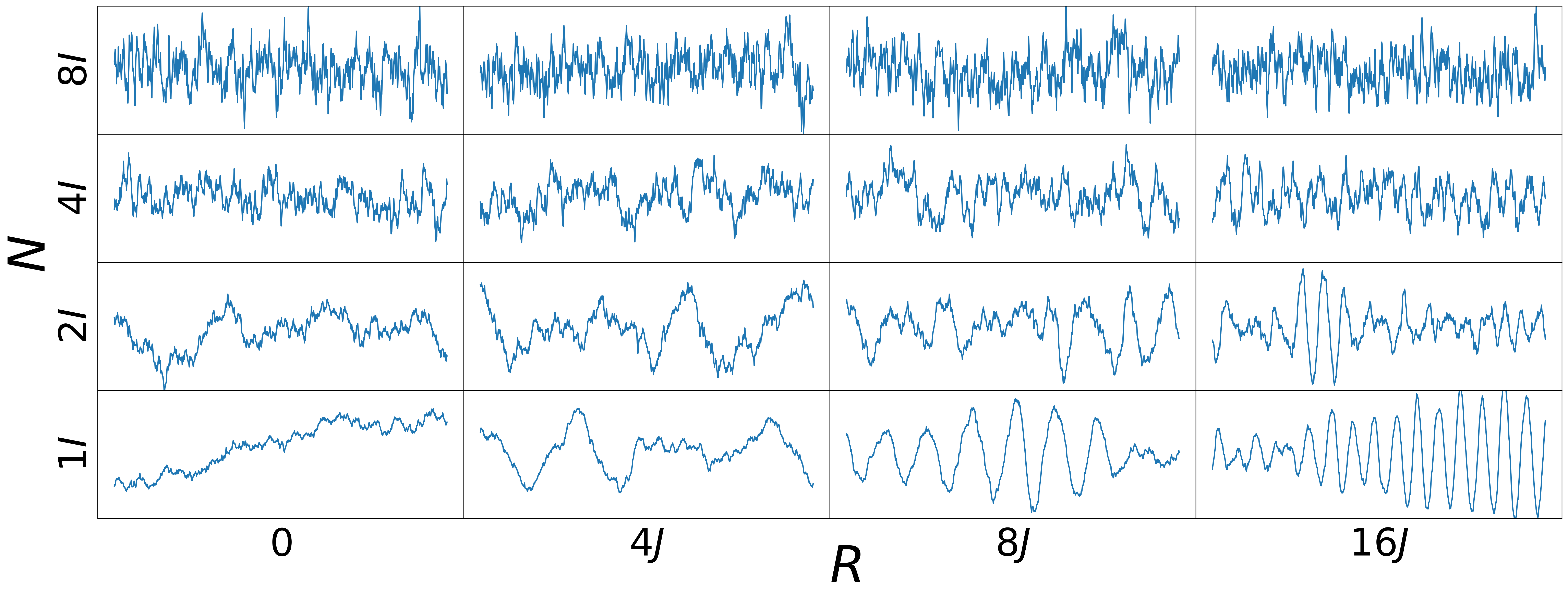

Gaussian Processes (GPs) provide powerful probabilistic frameworks for interpolation, forecasting, and smoothing, but have been hampered by computational scaling issues. Here we investigate data sampled on one dimension (e.g., a scalar or vector time series sampled at arbitrarily-spaced intervals), for which state-space models are popular due to their linearly-scaling computational costs. It has long been conjectured that state-space models are general, able to approximate any one-dimensional GP. We provide the first general proof of this conjecture, showing that any stationary GP on one dimension with vector-valued observations governed by a Lebesgue-integrable continuous kernel can be approximated to any desired precision using a specifically-chosen state-space model: the Latent Exponentially Generated (LEG) family. This new family offers several advantages compared to the general state-space model: it is always stable (no unbounded growth), the covariance can be computed in closed form, and its parameter space is unconstrained (allowing straightforward estimation via gradient descent). The theorem's proof also draws connections to Spectral Mixture Kernels, providing insight about this popular family of kernels. We develop parallelized algorithms for performing inference and learning in the LEG model, test the algorithm on real and synthetic data, and demonstrate scaling to datasets with billions of samples.

翻译:高斯进程( GPs) 为内推、 预测和平滑提供了强大的概率框架, 提供了强大的内推、 预测和平滑的概率框架, 但却受到计算比例问题的影响。 我们在这里调查一个层面的数据抽样( 例如在任意的间距中抽样, 任意的间距抽样, 星空模型因其线性缩放计算成本而非常受欢迎) 。 长期以来人们推测, 状态空间模型是通用的, 能够接近任何一维的 GP。 我们提供了这一预测的第一个一般证明, 显示任何关于一个层面的定点GP, 由可加固的矢量连续内核调节的矢量定值观测, 可以与任何想要的精确度相近( 例如, 在任意的间距间距间距间距间距间距间距间距间距间距间距间距中取样或矢量时间序列) 。 这个新组与一般的状态空间模型相比, 有好几项优势: 它总是稳定( 没有未受约束的增长), 组合可以以封闭的形式计算,, 其参数空间模型是不协调的( ) ) 其参数空间模型, 其参数空间是无法调节的,, 以 以 直观 显示 直观 的 。