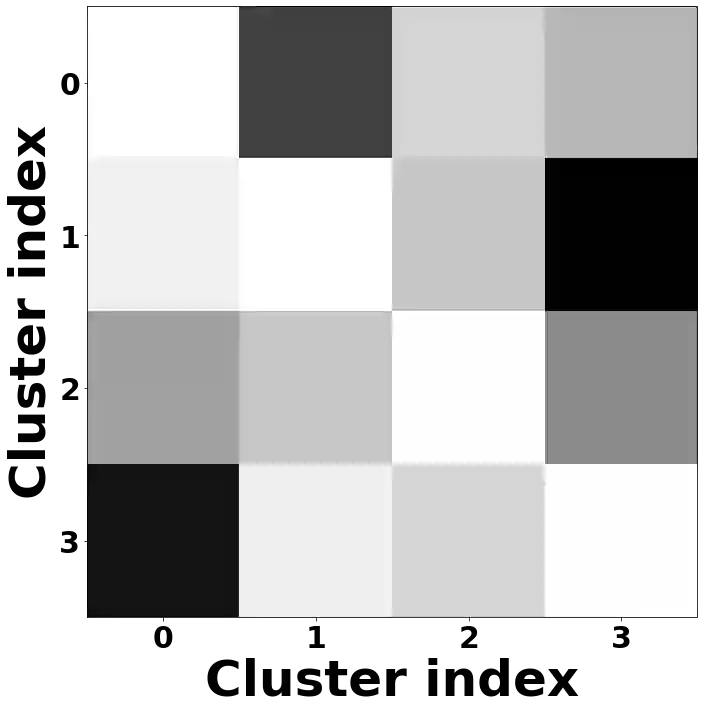

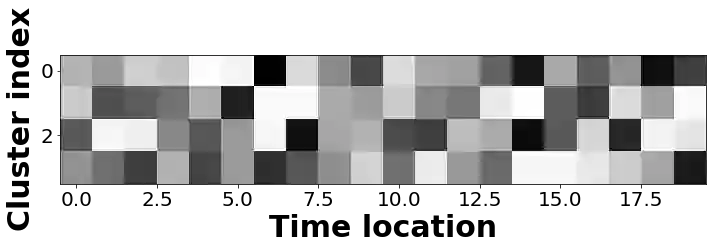

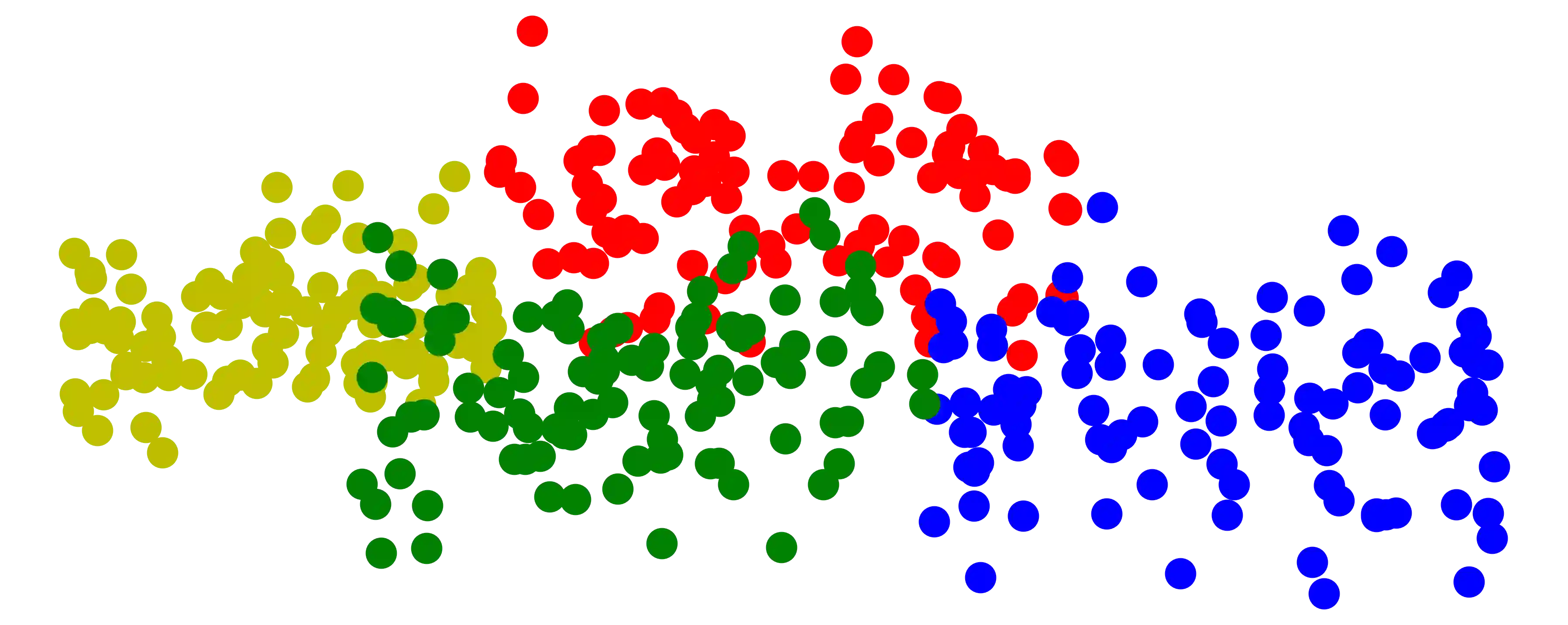

Temporal action segmentation is a task to classify each frame in the video with an action label. However, it is quite expensive to annotate every frame in a large corpus of videos to construct a comprehensive supervised training dataset. Thus in this work we propose an unsupervised method, namely SSCAP, that operates on a corpus of unlabeled videos and predicts a likely set of temporal segments across the videos. SSCAP leverages Self-Supervised learning to extract distinguishable features and then applies a novel Co-occurrence Action Parsing algorithm to not only capture the correlation among sub-actions underlying the structure of activities, but also estimate the temporal path of the sub-actions in an accurate and general way. We evaluate on both classic datasets (Breakfast, 50Salads) and the emerging fine-grained action dataset (FineGym) with more complex activity structures and similar sub-actions. Results show that SSCAP achieves state-of-the-art performance on all datasets and can even outperform some weakly-supervised approaches, demonstrating its effectiveness and generalizability.

翻译:将视频中的每个框架分类为动作标签, 时间行动分割是一项任务。 但是, 在大量视频中对每个框架进行批注, 以构建一个全面监管的培训数据集, 成本相当高 。 因此, 在这项工作中, 我们提出一种不受监督的方法, 即 SSCAP, 以一组未贴标签的视频运行, 并预测视频中可能的一系列时间段 。 SSCAP 利用自我监督学习来提取可区分的特性, 然后应用一种新的共生行动分析算法, 不仅能捕捉活动结构下的各个子行动之间的关联性, 而且还能准确和笼统地估计子行动的时间路径 。 我们评估经典数据集( Brekfast, 50Salads) 和新出现的精细的动作数据集( FineGym), 其活动结构和类似的子动作 。 结果显示, SSCAP 在所有数据集上都取得了最先进的性能, 甚至可以超越一些薄弱的监控方法 。