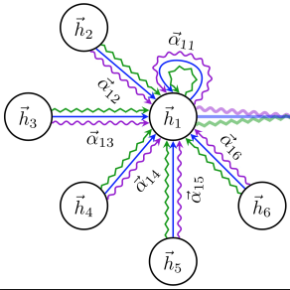

Aggregating messages is a key component for the communication of multi-agent reinforcement learning (Comm-MARL). Recently, it has witnessed the prevalence of graph attention networks (GAT) in Comm-MARL, where agents can be represented as nodes and messages can be aggregated via the weighted passing. While successful, GAT can lead to homogeneity in the strategies of message aggregation, and the "core" agent may excessively influence other agents' behaviors, which can severely limit the multi-agent coordination. To address this challenge, we first study the adjacency tensor of the communication graph and show that the homogeneity of message aggregation could be measured by the normalized tensor rank. Since the rank optimization problem is known to be NP-hard, we define a new nuclear norm to replace the rank, a convex surrogate of normalized tensor rank. Leveraging the norm, we further propose a plug-and-play regularizer on the adjacency tensor, named Normalized Tensor Nuclear Norm Regularization (NTNNR), to enrich the diversity of message aggregation actively. We extensively evaluate GAT with the proposed regularizer in both cooperative and mixed cooperative-competitive scenarios. The results demonstrate that aggregating messages using NTNNR-enhanced GAT can improve the efficiency of the training and achieve higher asymptotic performance than existing message aggregation methods. When NTNNR is applied to existing graph-attention Comm-MARL methods, we also observe significant performance improvements on the StarCraft II micromanagement benchmarks.

翻译:聚合信息是多试剂强化学习(Comm-MARL)交流的一个关键组成部分。 最近,它见证了Comm-MARL中平面关注网络(GAT)的普及,在Comm-MARL中,代理商可以作为节点代表,信息可以通过加权传递进行汇总。虽然GAT成功,但GAT可以导致信息汇总战略的同质性,而“核心”代理商可能会过度影响其他代理商的行为,从而严重限制多试剂的协调。为了应对这一挑战,我们首先研究通信图的相近性强度,并表明信息汇总的同质性可以用正常的达氏级来衡量。由于级别优化问题已知为NP-硬性,我们定义了一个新的核规范,以取代等级,即电压的共振动,我们进一步提议在匹配性强力软调时设置一个插接和播放调节器,称为“正常的Tensority Temor” 规范化(NTNNNURRRRR),以合作性固度测量测量应用的信息的多样性。我们广泛评估了GAT的常规和高额培训方法。